|

Getting your Trinity Audio player ready...

|

Artificial Intelligence on Twitter:

An inside look at how Twitter used big data and artificial intelligence to moderate content

Twitter AI: The Role of Artificial Intelligence in Content Moderation

How does Twitter use artificial intelligence and machine learning?

Twitter uses large-scale machine learning and AI for sentiment analysis, bot analysis and detection of fake accounts, image classification and more.

From Amazon to Instagram, Sephora, Microsoft, and Twitter, AI will shape the future of speech in America and beyond. Every modern company leverages artificial intelligence.

The big question is not if they use it, but how it is being used, and what impact will this have on consumer privacy in the future.

Social Media content decisions have become highly political, and artificial intelligence has facilitated the moderation process at scale. But along the way, the public was left in the dark on just how large of a role machine learning plays in large-scale content operations in Silicon Valley.

Discussions about online content moderation rarely focus on how decisions by social media companies — often made with AI input — impact regular users.

Internal Twitter documents I obtained provide a window into the scope of the platform’s reliance on AI in the realm of political content moderation, meaning what phrases or entities were deemed misinformation and were later flagged for manual review. The documents show that between Sept 13, 2021-Nov 12, 2022, when political unrest was taking place, Twitter was flagging a host of phrases including the American flag emoji, MyPillow, Trump, and election fraud.

AN INSIDE GLIMPSE INTO THE INTERNAL MACHINE LEARNING PROCESSES OF TWITTER

Insider documents reveal unprecedented access and an inside look at how Twitter flagged political misinformation.

While much of the conversation around the so-called Twitter Files releases have focused on how government agencies sought to pressure Twitter into moderating content on its platform, the role of artificial intelligence has remained widely misunderstood.

Social Media content decisions today are aided by artificial intelligence, machine learning, and natural language processing. which helps facilitate moderation at scale. I spoke with a former Twitter Data Scientist, who previously worked at Twitter doing machine learning in US Elections and political misinformation on AI and machine learning. The former employee consented to this interview on the condition of anonymity.

Twitter created a data science team that was focused on combatting U.S. political misinformation. This new division was created after the 2016 election. The team was comprised of data scientists who frequently corresponded with Trust and Safety at Twitter as well as third party government agencies including The CDC to identify alleged political misinformation. Content moderation and Machine Learning at Twitter was directly influenced by third party government agencies, academic researchers, and Trust and Safety.

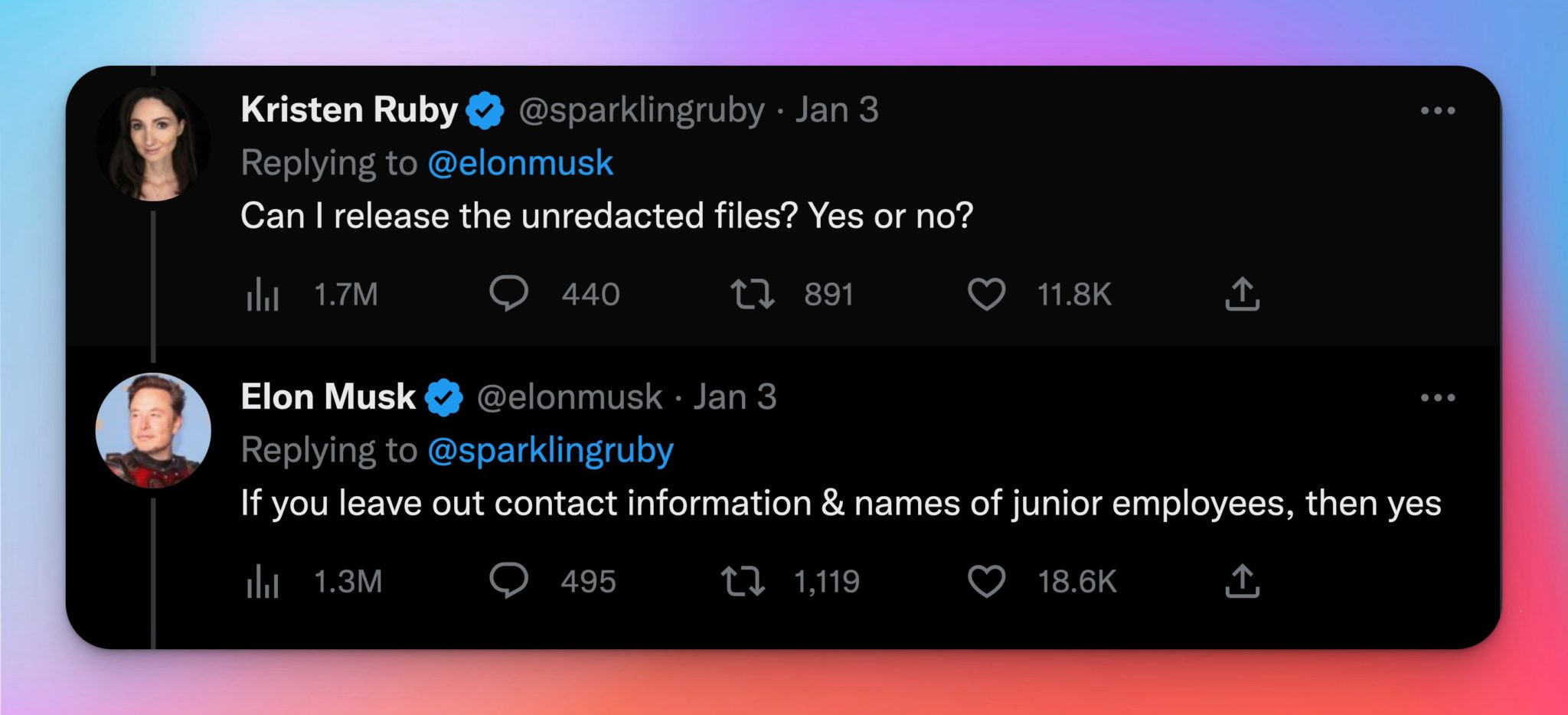

The Ruby Files reveals an inside look into how Twitters’ machine learning detected for political misinformation, and the word lists used in natural language processing.

I was granted access to internal Twitter documents by a former employee. These documents showed examples of words that were flagged for automated and manual removal by Twitter.

These company documents have never been previously published anywhere else. What follows is that discussion.

Worth a read

— Elon Musk (@elonmusk) December 10, 2022

EXECUTIVE SUMMARY:

ALGORITHMIC POLITICAL BIAS. Political bias was exhibited in Twitter’s training dataset in the U.S. Political Misinformation Data Science division that monitored election content. Multiple independent Data Scientists who reviewed the dataset confirmed this to be true.

UNINFORMED CONSENT FOR MACHINE LEARNING EXPERIMENTATION ON USERS. Twitter ran numerous Machine Learning experiments on users at any given time. Some of these experiments were ethically questionable. Users did not give consent, nor were they told that any of the experiments were happening. It is reasonable for a company to do A/B testing or Machine Learning experimentation as long as the process is transparent and users can opt-in or opt-out and know what they are participating in.

LACK OF PARAMETER TRANSPARENCY. A former Twitter Data Scientist alleged that the n-gram parameters pertaining to U.S. political misinformation were changed weekly at Twitter. How often are these parameters being updated, clean, and retrained now? If any of this algorithmic filtering is live on the platform, censorship will still be in effect on Twitter. Since the n-gram technology used by Twitter was referred to by Musk as primitive, what new Machine Learning system & technology will Twitter replace this with to incorporate deep learning to prevent word filtering mistakes that often result in suspensions due to lack of context?

NO CLEAR LINE BETWEEN GOVERNMENT AGENCIES & BIG TECH AI. The CDC, government agencies, and academic researchers worked directly with Trust and Safety at Twitter to propose word lists to the Data Science team. This team was responsible for monitoring US political misinformation at Twitter. Should The CDC and government agencies have such a large role in the oversight and implementation of the algorithmic work of engineers and data scientists at Twitter? Data Scientists assumed that what they were told to do was accurate because it came directly from trusted experts. Are data scientists being put in a compromising position by government authorities?

A look inside the AI Domestic digital arms race fueled by NLP

Misinformation included real information.

Twitter flagged content as misinformation that was actually real information. Furthermore, their antiquated technology led to tons of false positives. The users suffered the consequences every time that happened.

99 percent of content takedowns start with machine learning

The story of deamplification on Twitter is largely misunderstood. It often focuses on esoteric terms like shadow banning or visibility filtering, but the most important discussion of deamplifcation is on word relationships that exist with NLP. This article reveals the specifics of how entity relationships between words are mapped to internal policy violations and algorithmic thresholds.

Every tweet is scored against that algorithmic system, which then leads to manual or algorithmic content review. The words you use can trigger a threshold in the system, without you ever knowing it. Only a tiny fraction of suspensions and bans actually have to do with the reason most people think. Part of this is because they have little knowledge or insight into the threshold, how it is triggered, which words trigger it, and what content policies are mapped to the alleged violation.

Censoring political opponents by proxy

If you want to censor a political opponent, NLP is the primary tool to use in the digital arms race. Largely still misunderstood by Congress and the media, Twitter was able to deploy NLP in plain sight without anyone noticing. One of the ways they were able to do this was through hiring third party contractors for the execution of the content moderation and data science work.

Therefore, when they told Congress they weren’t censoring political content that was right-leaning, they were technically not lying- because they used proxies to execute the orders.

Technically, the most important Twitter Files of all are not files that Twitter owns. The IP is scattered all over the world, and is in the hands of outsourced contractors in data science and manual content review. Who are these people and what are they doing with our data? Does anyone even know? No.

The term employee and contractor were used interchangeably when referring to this work. Contractors were used by Twitter as employees, but were not actually paid as employees, and were not given severance. That is a story for another investigation. But the part of this that people don’t understand is that the third-party agencies who hired those contractors to execute political censorship often had deep rooted political ties.

One of the third-party agencies Twitter worked with has a PAC, and frequently lobbies Congress for specific areas of interest pertaining to immigration. It is also worth noting that ICE was hard coded into the detection list, by one of the contractors the agency hired who frequently lobbies Congress for immigration reform.

So technically, Twitter is not doing it- the employee of the contractor is doing it. These are not neutral parties, and the contracting companies serve as proxies. Some of those companies essentially have shell corps, thus hiding the entire paper trail of the actual execution of the censorship.

If the feds ever decide to go knock down Twitter’s door to find evidence of political bias in their machine learning models, they won’t find it. Why? Because that evidence is scattered all over the world in the hands of third-party contractors- thus moving all liability on to those third parties, who did not even realize how they were being used as pawns in all of this until it was too late.

These were not people who went rogue or had nefarious intentions. They did not go off track. They did what they were told by the people who hired them and in alignment with the expert guidance of the academic researchers they trusted. They were not sitting there thinking about how to tip the political scales- but then again- they didn’t have to. They were told what to do and believed in the mission. This part is critical to understanding the full scope of the story.

The most important files won’t come from Twitter headquarters, they will come from contractors who had employment agreements with outsourced staffing agencies. Silicon Valley loves to disregard this poor behavior by saying this is how it’s done here. Well, maybe it should not be done that way anymore.

Their reckless regard for employment law has real world consequences. In an attempt to try to get away with not paying severance or benefits, the public ends up paying the real price for their arrogance because they are aiding and abetting the crime of free speech by making it infinitely harder for the government to track down evidence.

Twitter was complicit in a crime.

But the fact that they were complicit does not mean that they actually committed the crime. Instead, they used innocent parties (victims in my opinion) to commit the crimes for them. This is a clear and gross abuse of power at the highest level and a complete and reckless regard for the truth and system of justice.

The data shows clear evidence that Twitter deployed the AI to match the dialectic of only one way of talking about key political issues. The data also shows clear evidence that political misinformation does not exist in the dataset on the left. The entire category of political misinformation consisted of solely right-leaning terms. This is cause for concern and further congressional investigation.

At some point, Congress will be forced to compel further discovery to obtain the training manual used for Machine Learning at Twitter, which will contain the list of connected words and underlying vocabulary that tells the larger story of the dataset shared in this article. Those documents are critical to see the end-to-end process of how this was deployed.

Who creates the Machine Learning & AI tools used for active social media censorship?

Many of the tools are VC backed or funded with government grants under the guise of academic research. But the academic research is not subtle. The “suggestions” they make are actual policy enforcement disguised as recommendations. Make no mistake, these are not suggestions- they are decisions that have already been made.

I have said for years that whoever controls the algorithm controls the narrative. But we have reached a new part of this story- where it is on the verge of taking a very dangerous turn.

Whoever controls the model controls the narrative.

Ultimately, the one who can fine tune a model to their political preference will be able to tip the political scale in such a way that 99 percent of people will be oblivious to it- as evidenced by the publication of this article.

You could literally give the public everything they have ever asked for on a silver platter regarding the underlying technology that was deployed to censor political content on one of the largest social media platforms in the world- and yet- no one really cares. People don’t care about what they don’t understand. And that in itself is another issue with all of this.

The knowledge gap is growing wider by the day. Conservatives aren’t doing themselves any favors either by not even attempting to comprehend what is being said in this reporting.

This week, several former Twitter employees will take the stage for a congressional hearing. How many people leading that hearing will grill them on NLP? My guess? None.

What legal framework was used to deploy this system of social media censorship? Circumvention.

Third party agencies were essentially used to potentially violate the first amendment. How? Twitter used a corporate structure of outsourcing the most critically sensitive data science work – this enabled the company to get by without technically breaking the law. The corporation worked with several LLCs who then created the rules.

So, technically Twitter didn’t officially receive any instructions from the government. The LLC did. The same LLCs who were also tied to PACs and lobbied Congress. Twitter received the word list instructions from academic groups- who were funded with government grants. This story shows the deep corruption of Americas institutions at every level. Twitter used third parties to circumvent free speech laws. They were able to dismantle and censor free speech on the platform through natural language processing.

The intelligence community is working not only through academia, but also through shell corporations. All of this leads to a larger dystopian picture that big tech believes they are above reproach in society. That, fueled with the fact that VCs are also complicit in funding some of the censorship technology that is used to fight the very thing they claim to stand against- makes this story even worse.

The systems would degrade over time and nobody would ever know what they had done. The core systems used for visibility filtering to both train and manage machine learning models were often on the laptops of random third-party contractors that they alone had access to- but the company did not.

All of this has also been stated on record in an official whistleblower reporter.

My experience and first party reporting can confirm this to be true- which leads to serious data privacy concerns. It is not a Twitter employee that you should be worried about reading your data- rather- it is the thousands of contractors all over the world that still have this data that people need to be most concerned about.

EXCLUSIVE INTERVIEW WITH FORMER TWITTER EMPLOYEE ON AI/ML:

ML & AI: The most important levers of power and communication in The United States

DAU OR MAO?

Kristen Ruby, CEO of Ruby Media Group. interviewed a former Twitter employee on machine learning, artificial intelligence, content moderation and the future of Twitter.

What is the role of AI in mediating the modern social media landscape?

“People don’t really understand how AI/ML works. Social media is an outrage machine. It’s driven by clicks, and I think Twitter is/was vulnerable to this. On the one hand, when Trump was on the platform, he was all anyone could talk about. Love him or hate him, he drove engagement. On the other hand, Twitter was working to suppress a lot of what it saw as hate speech and misinformation, which could be seen by an outside party as bias.

Internally, we did not see this as bias. So much of social media is run by algorithms, and it is the ultimate arbitrator and mediator of speech. Public literacy focused on ML/AI must increase for people to understand what is really going on. There has to be a way to explain these ideas in a non-technical way. We all kind of get algorithmic and implicit bias at this point, but it’s a black box to non-technical people. So, you have people making wild assumptions about what old Twitter did instead of seeing the truth.”

How does Twitter use Machine Learning?

“In non-technical basic terms, Artificial Intelligence (AI) is the ability of a computer to emulate human thought and perform real-world tasks. Machine Learning (ML) is the technologies and algorithms that let systems identify patterns, make decisions, and improvements based on data. ML is a subsection of AI. The Twitter dataset that I have shared pertains to natural language processing, which is a form of AI.

I wish people would stop getting caught up in the semantic distinction between AI and ML. People seem to treat AI as a more advanced version of machine learning, when that’s not true. AI is the ability for machines to emulate human thinking. ML is the specific algorithm that accomplishes this. ML is a subset of AI. All machine learning is AI. AI is broader than Machine Learning.”

Can you share more about Twitter’s use of Natural Language Programming (NLP)?

“NLP is one of the most complicated processes in Machine Learning, second only to Computer Vision. Context is key. What is the intent? So, we generate features from a mixture of working with Trust and Safety and our own machine-learning processes. Using a technique called self-attention, we can help understand context. This helps generate these labels. We do in fact vectorize the tokens seen here. NLP splits sentences into tokens- in our case- words.

As stated above, the key in NLP is context. The difficulty is finding context in these short messages. A review or paper is much easier because there is much more data. So, how does it work? You have a large dataset you’ve already scored from 0-300. No misinformation to max misinformation.

Step 1: Split the tweet into n-grams (phrases of between 1 to whatever n is. In our case, usually 3 was max.

By this, I am referring to the number of words in the phrase you’re using. An n-gram could be 1 word, 2 words, or 3 words. There’s no real limit, but we set it at 3, due to the extremely small number of characters in a tweet.

Take that and measure accuracy. We searched for terms ourselves by seeing what new words or phrases were surfacing, and we also accepted inputs from outside groups to train our models.

Step 1. The tokenization and creation of n-grams.

Step 2. Remove stop words. These are commonly used words that don’t hold value -of, the, and, on, etc.

Step 3. Stemming, which removes suffixes like “ing,” “ly,” “s” by a simple rule-based approach. For example: “Entitling” or “Entitled” become “Entitl.” Not a grammatical word in English. Some NLP uses lemmatization, which checks a dictionary, we didn’t.

Step 4. Vectorization. In this step, we give each word a value based on how frequently it appears. We used a process called TF-IDF which counts the frequency of the n-gram in the tweet compared to how frequently it occurred across other tweets.

There is a lot of nuance here, but this is a basic outline. The tweet would be ranked with a score based on how much misinformation we calculated it had above.

When we trained our models, we would get a test dataset of pre-scored or ranked tweets and hide the score from the algorithm and have it try to classify them. We could see how good of a job they did and that was the accuracy – if they guessed a lot of them correctly.

We used both manual and auto. I don’t have the weights. That was calculated in vector analysis.”

What does the full process look like from start to finish?

“Here is what the cycle looks like:

We work with Trust and Safety to understand what terms to search for.

We build our ML models. This includes testing and training datasets of previously classified tweets we found to be violations. The models are built using NLP algorithms.

We break the words into n-grams, we lemmatize, we tokenize.

Then we deploy another model, for instance XG Boost or SVM to measure precision. We used precision because it’s an unbalanced dataset.

Then once we have a properly tuned model – we deploy through AWS SageMaker and track performance. The political misinfo tweets I showed you – the ones with specific tweets and the detections came out of me writing a program in a Jupiter notebook to test tweets we had already classified as violations according to our model score.

These models had all been run through SageMaker. Our score was from 150-300. The idea was that we were going to send these to human reviewers (we called them H COMP or agents) to help and tell us how they felt our model performed.”

Test or control?

“No. The misinfo tweets were already live. All of this was live.”

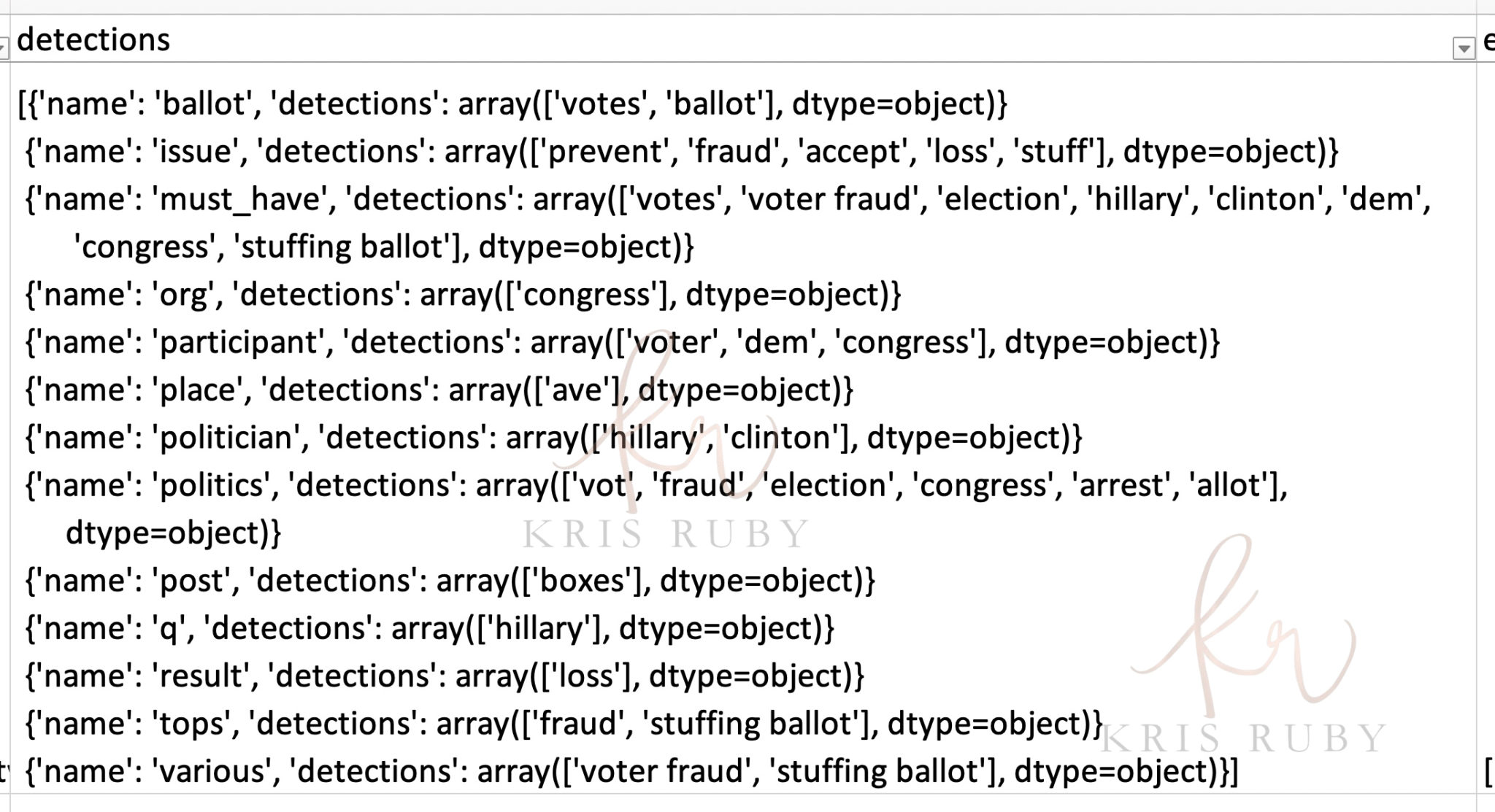

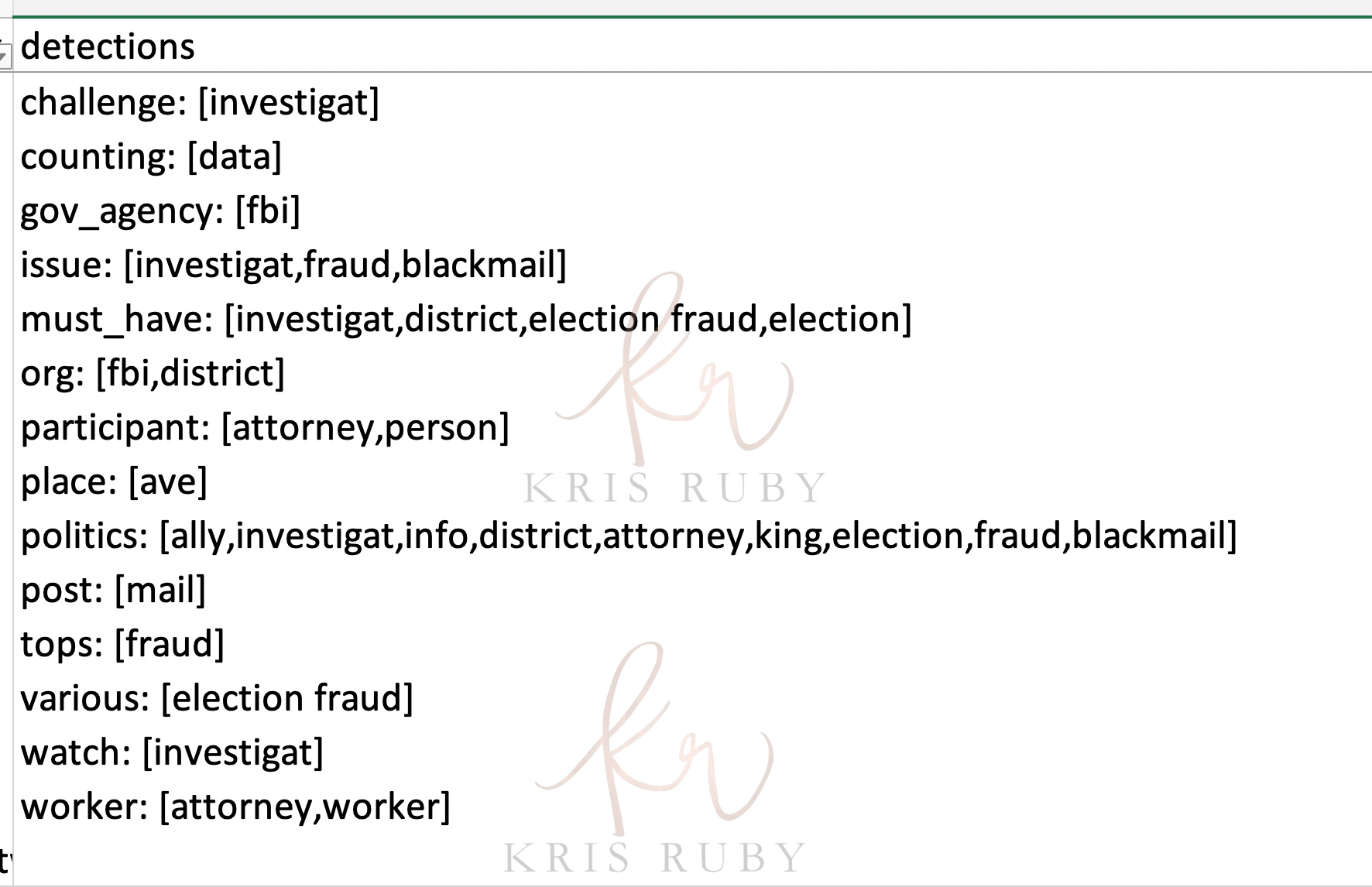

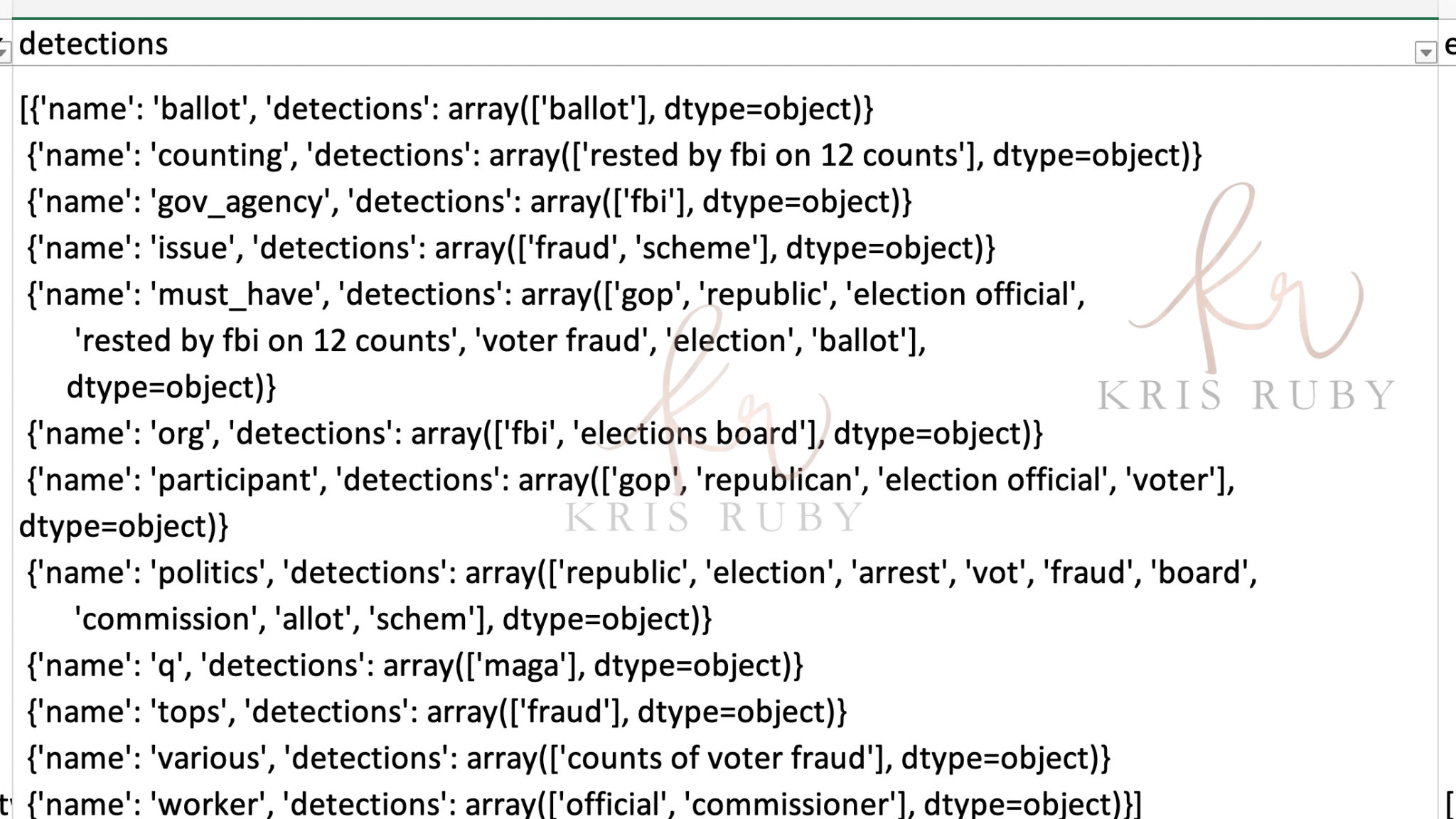

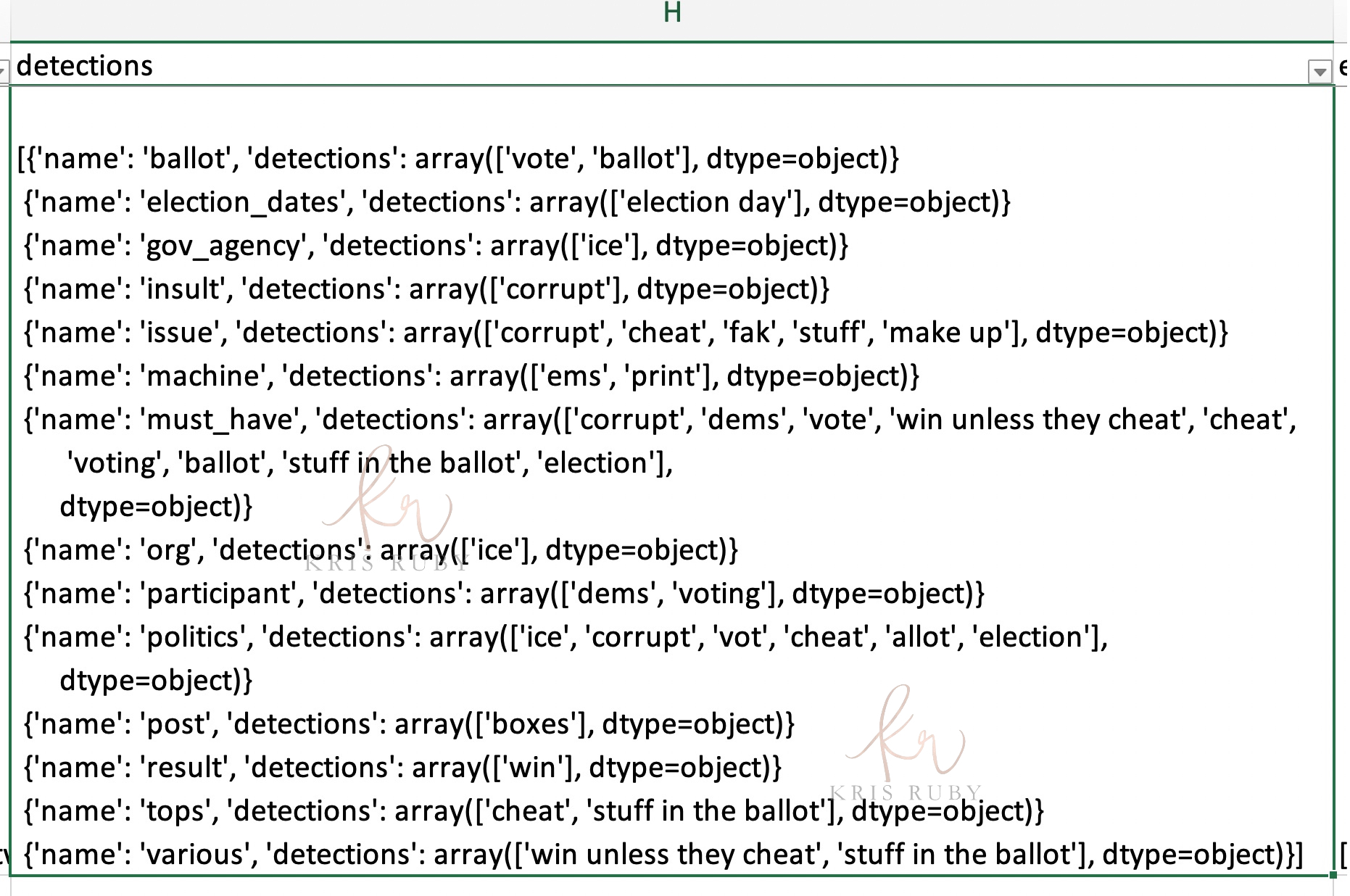

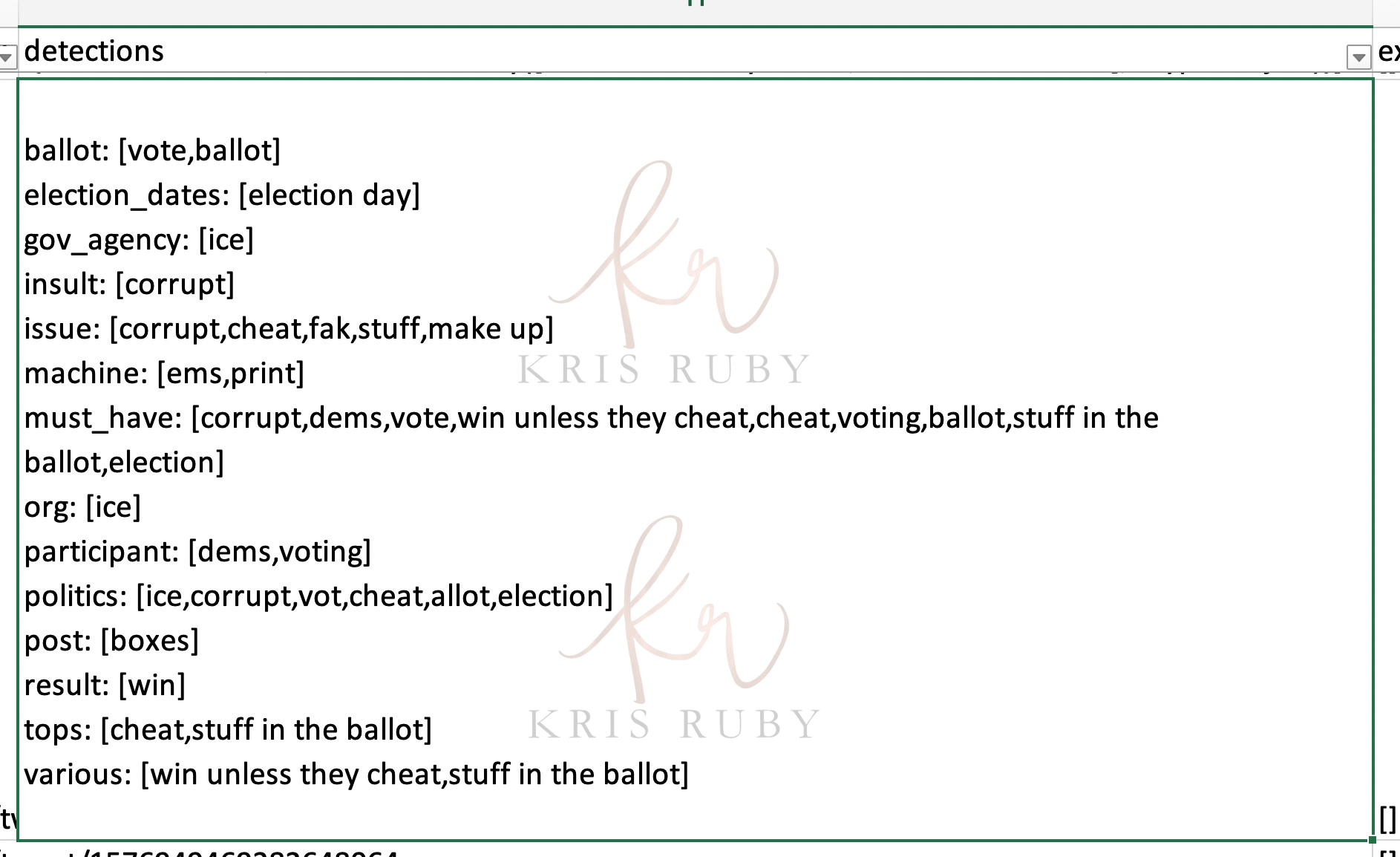

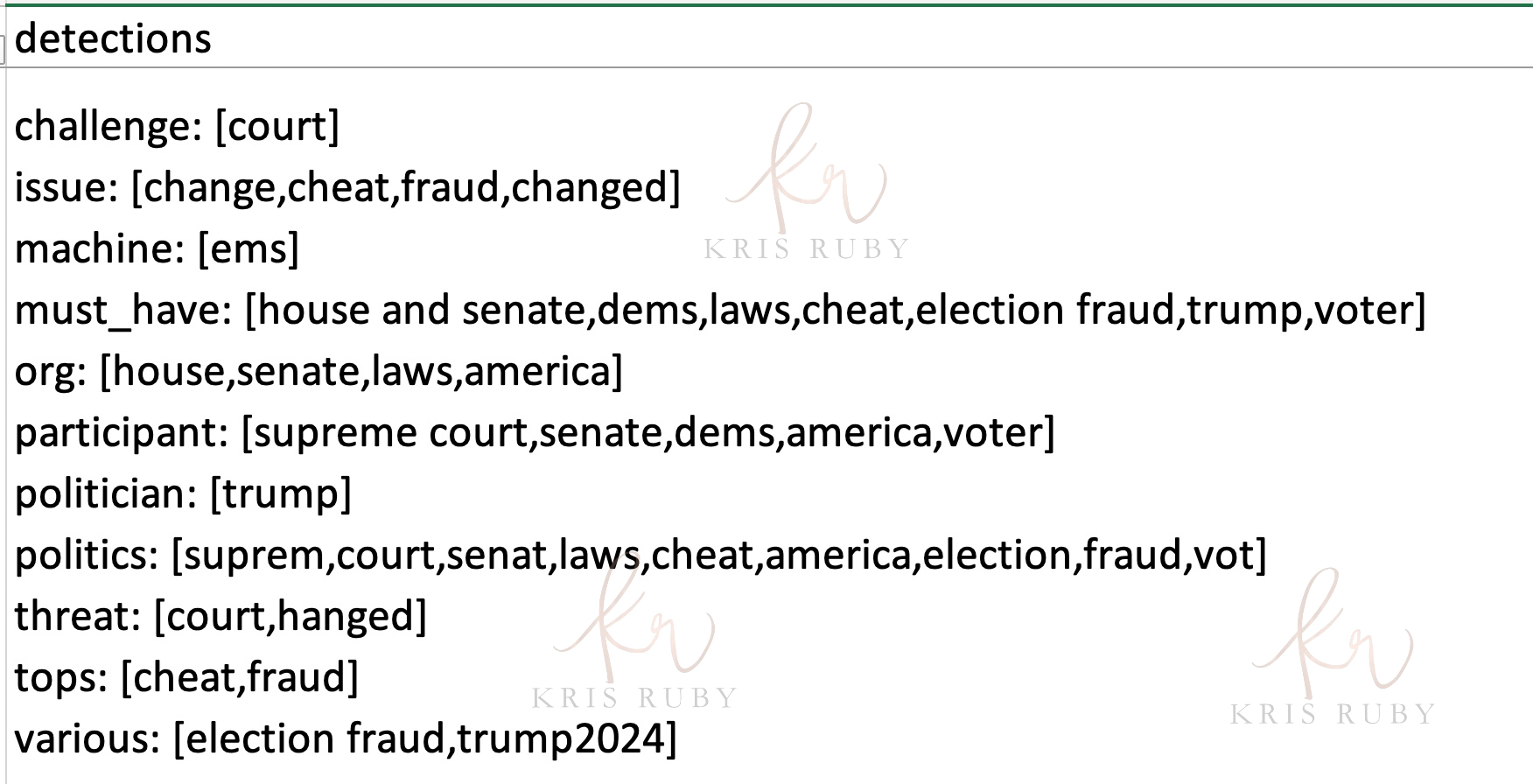

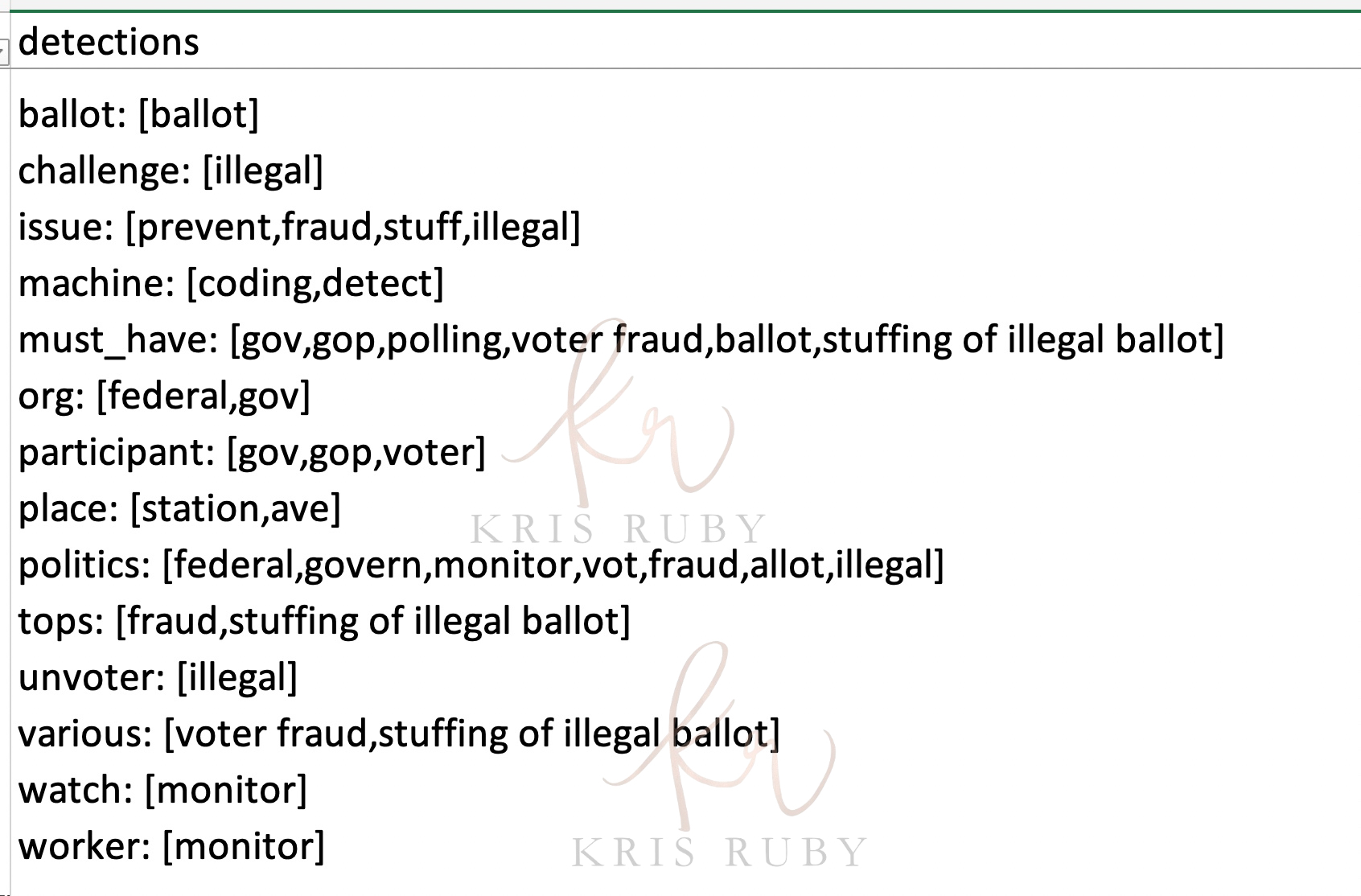

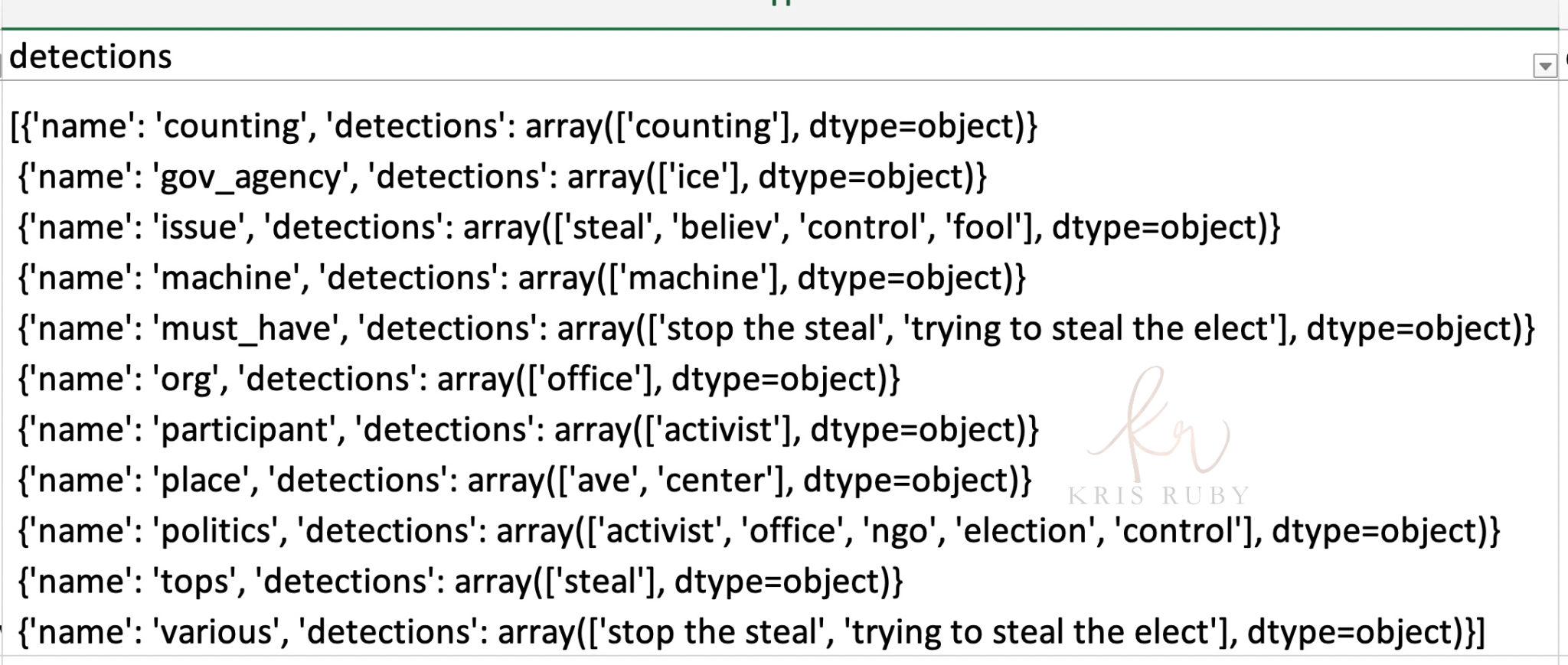

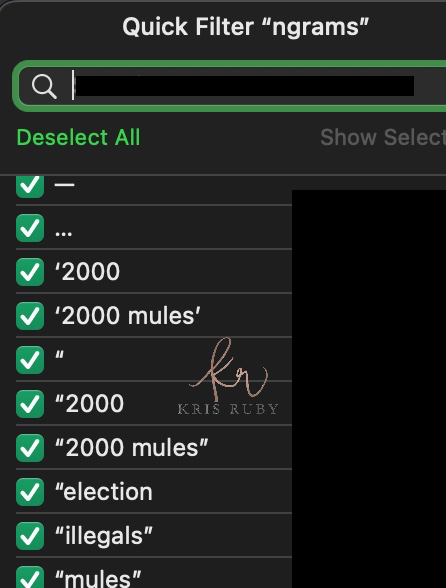

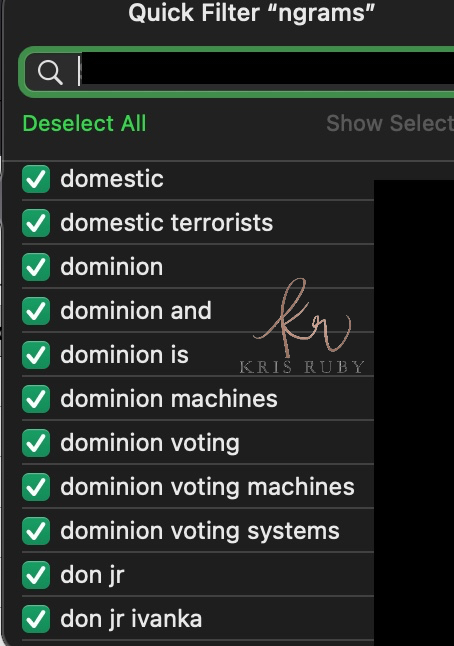

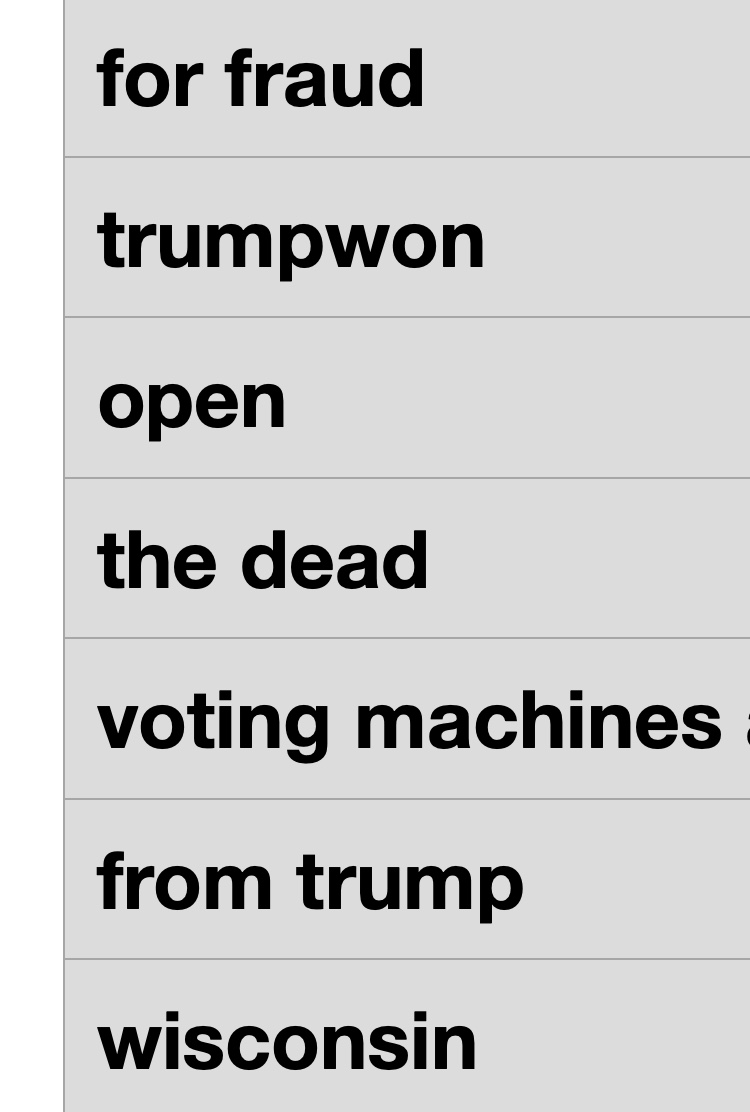

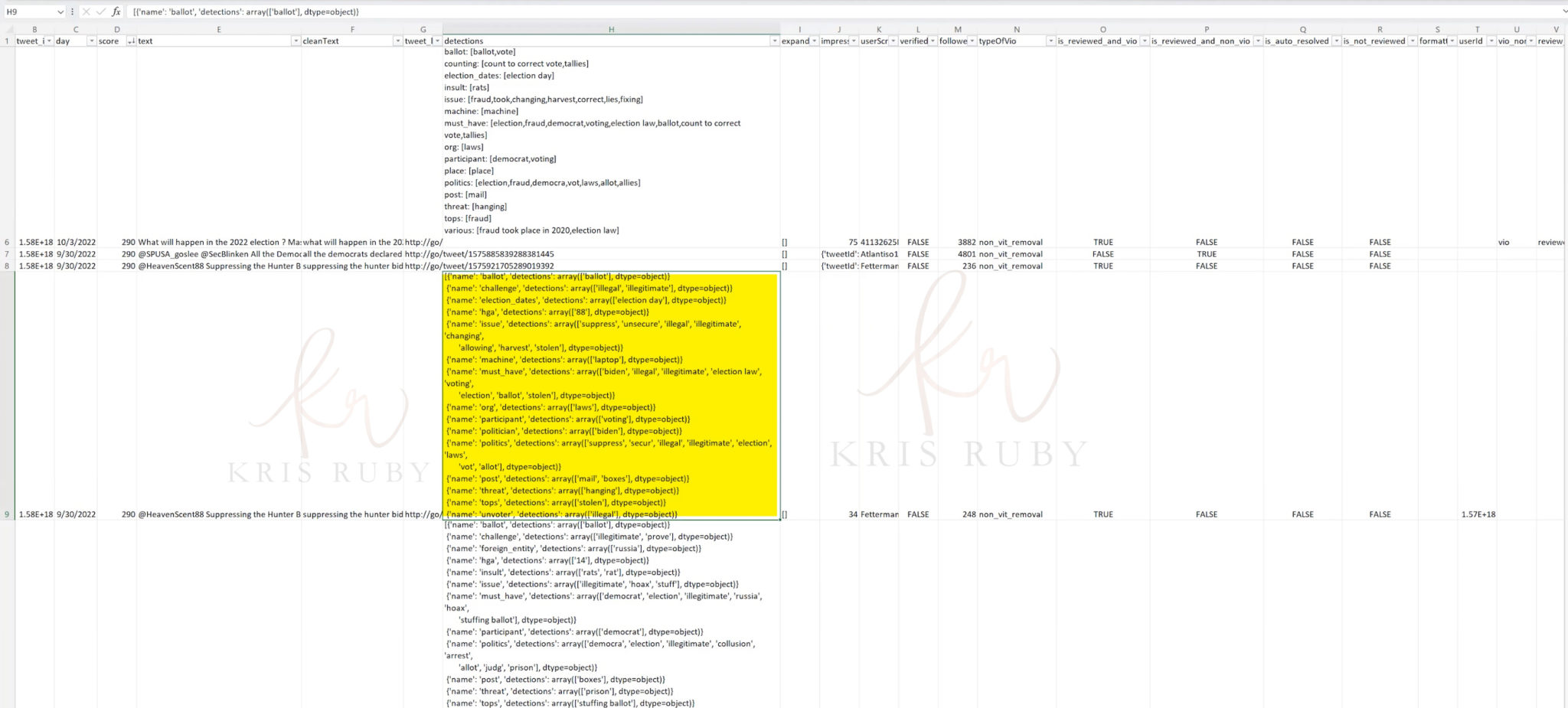

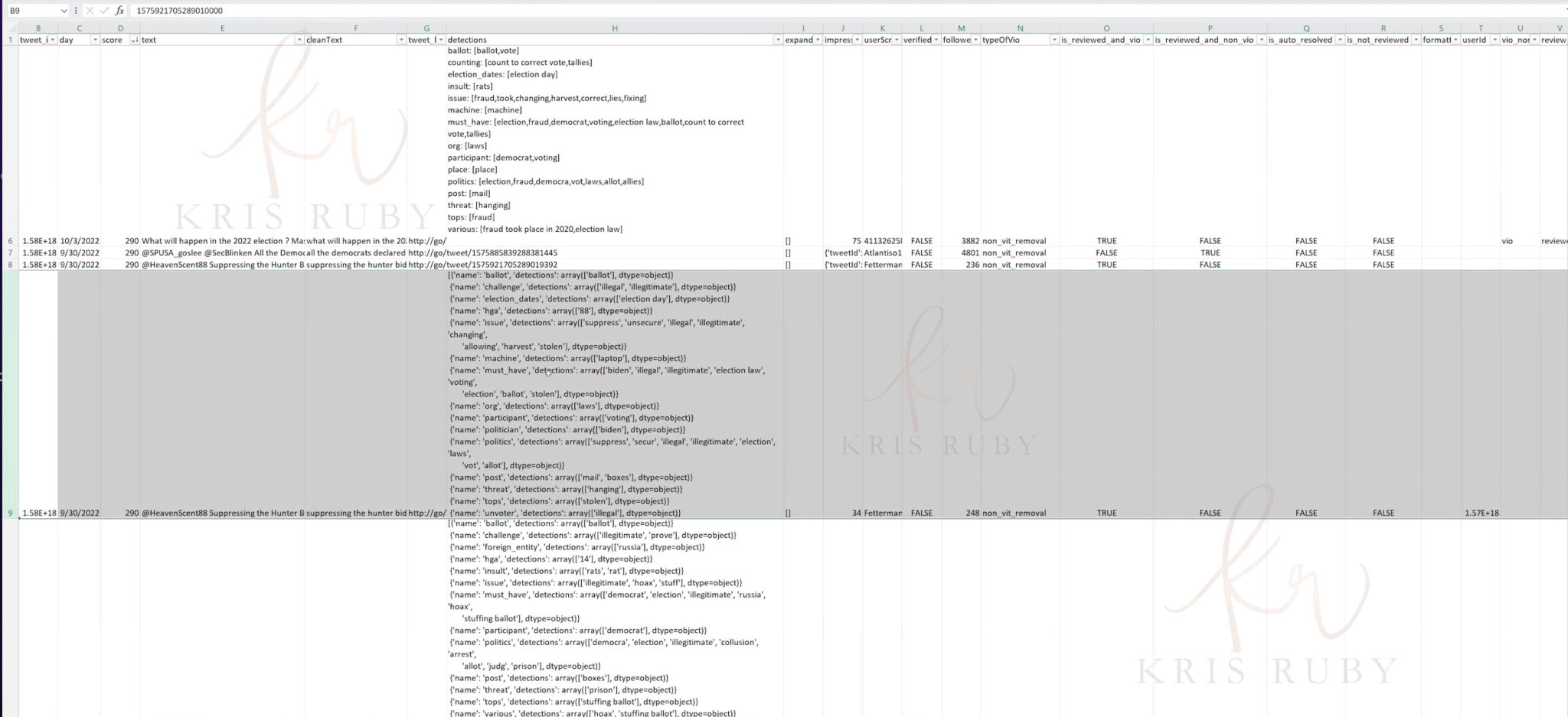

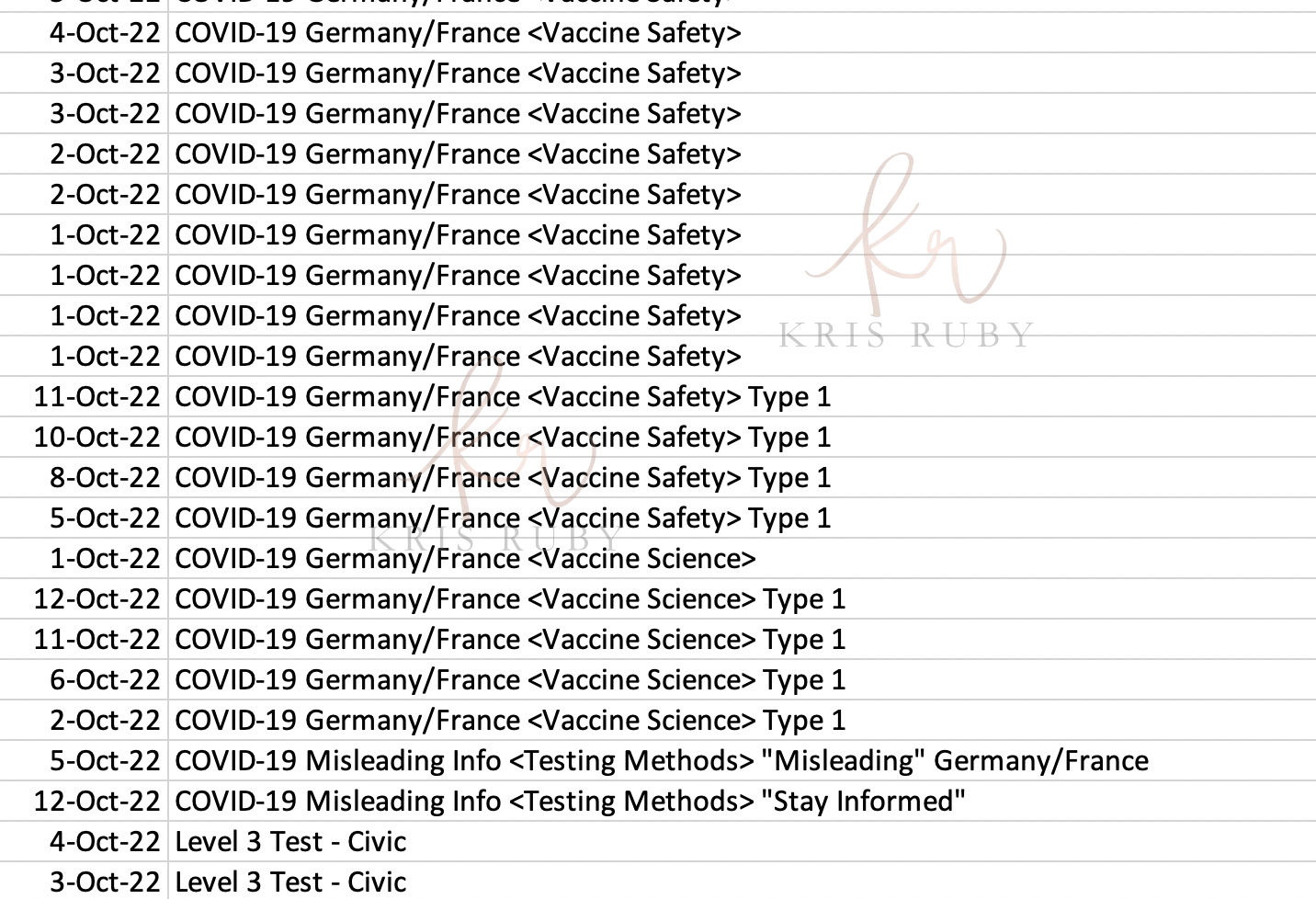

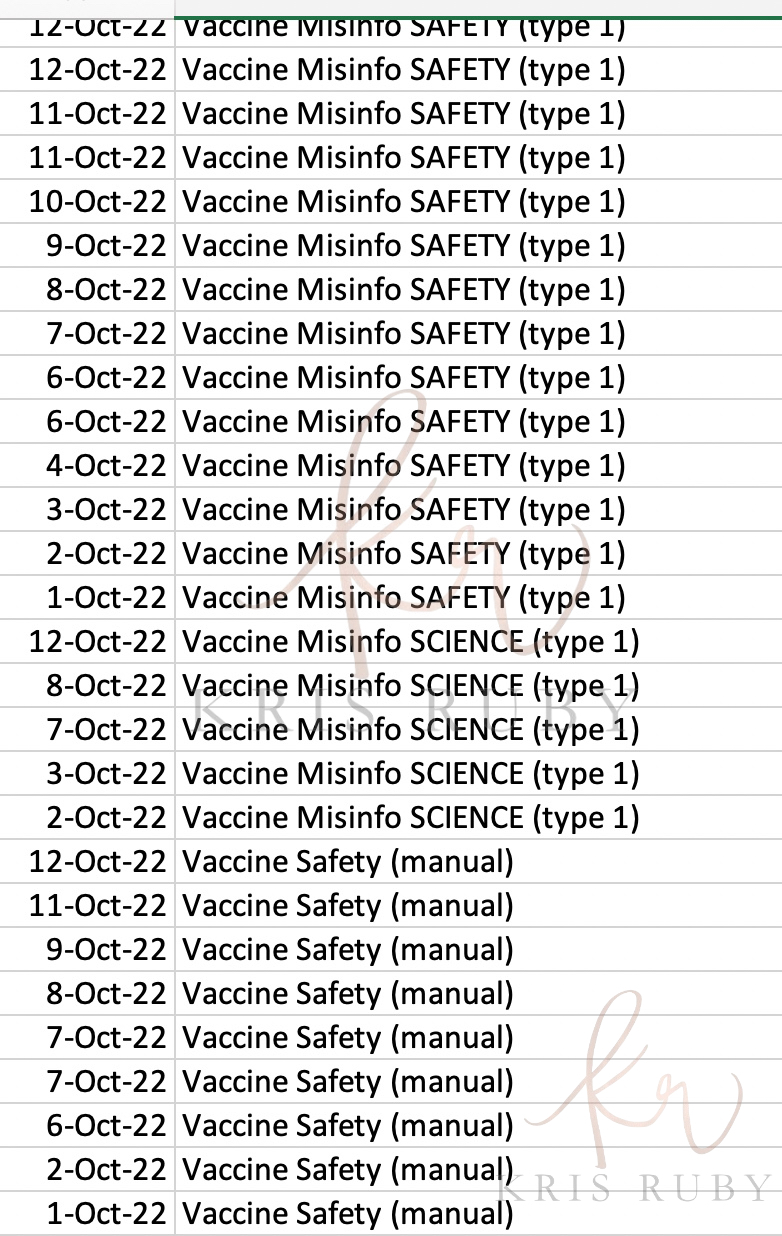

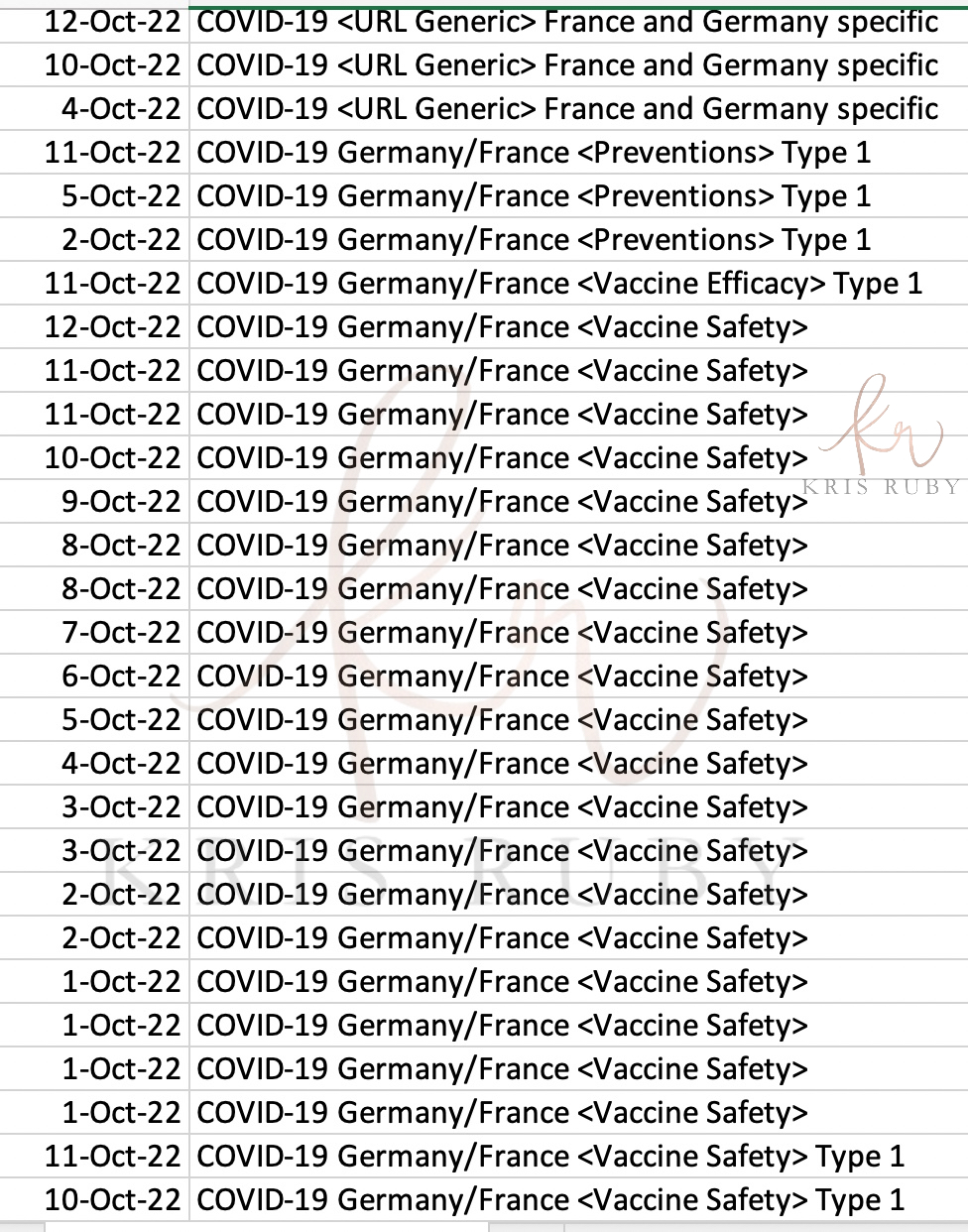

*The following screenshots will reveal the type of content Twitter was monitoring for political misinformation. The file included dates, language and annotation.

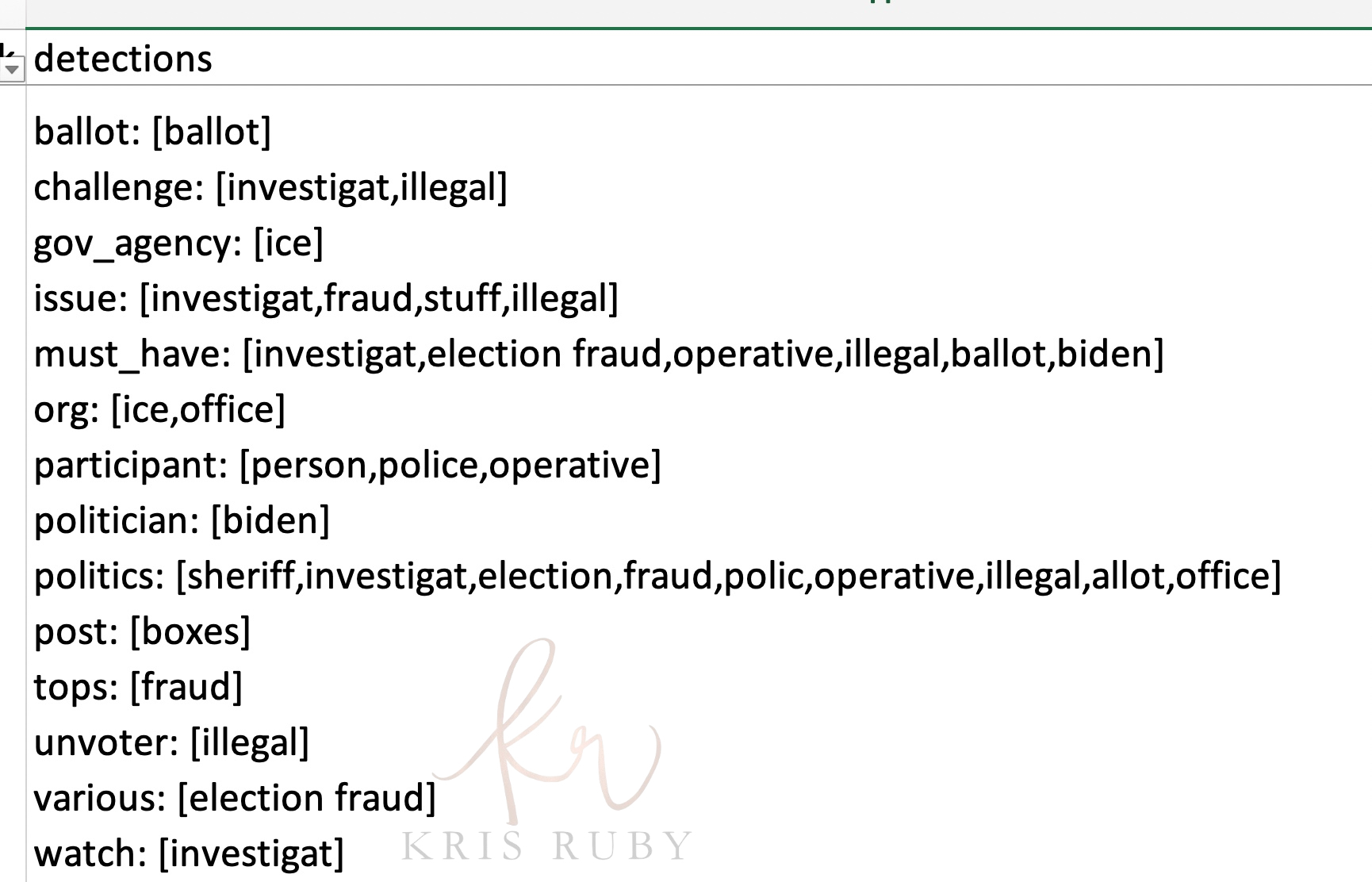

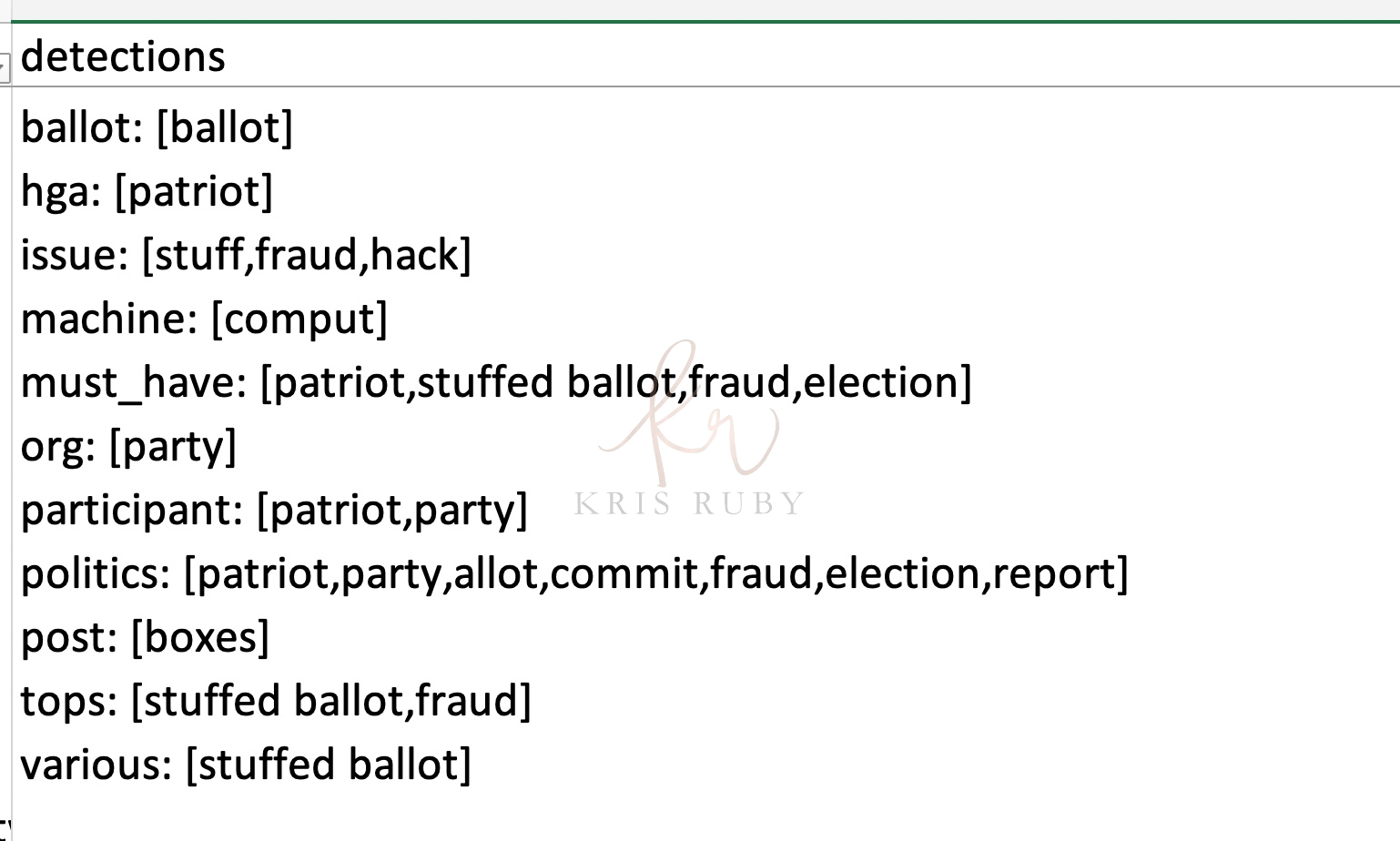

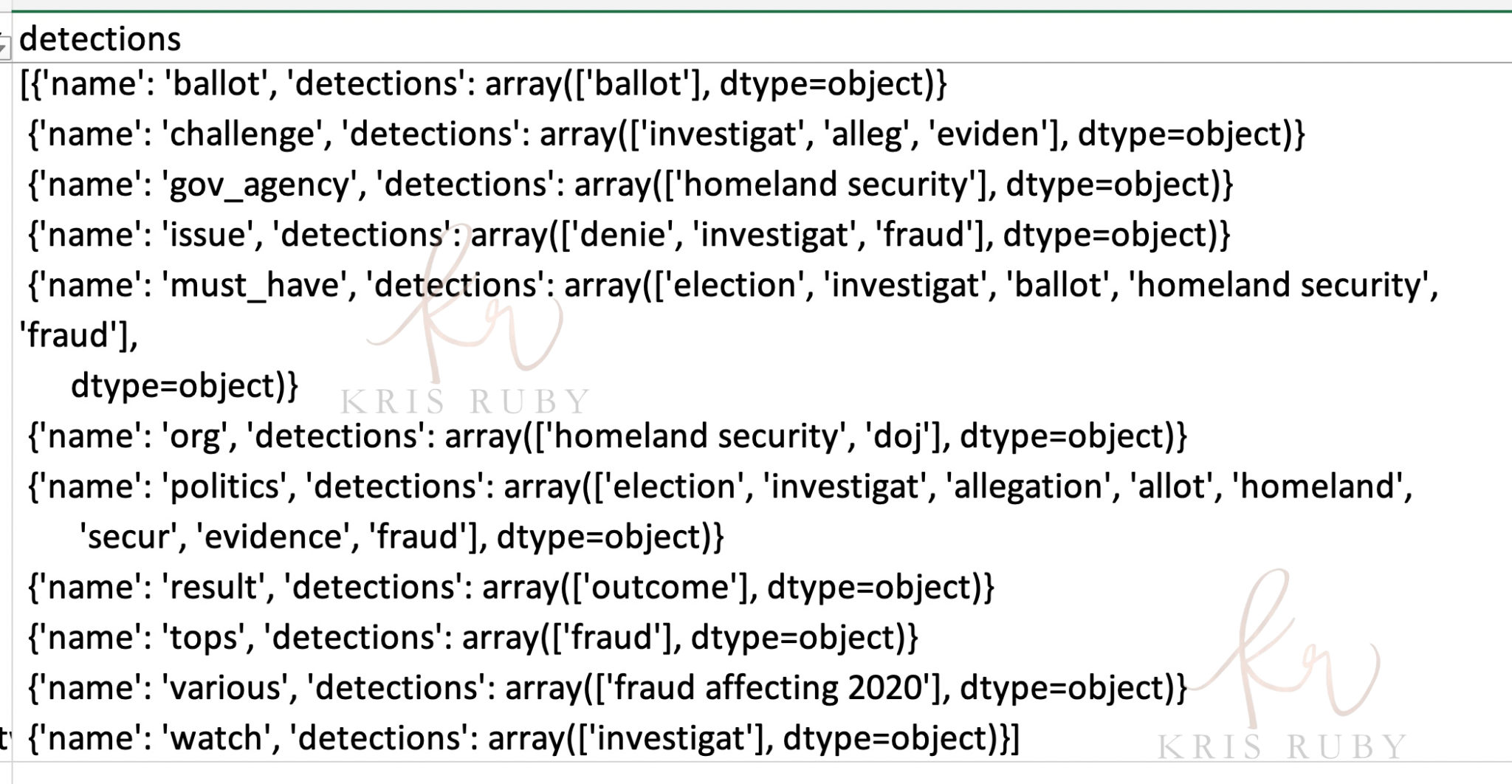

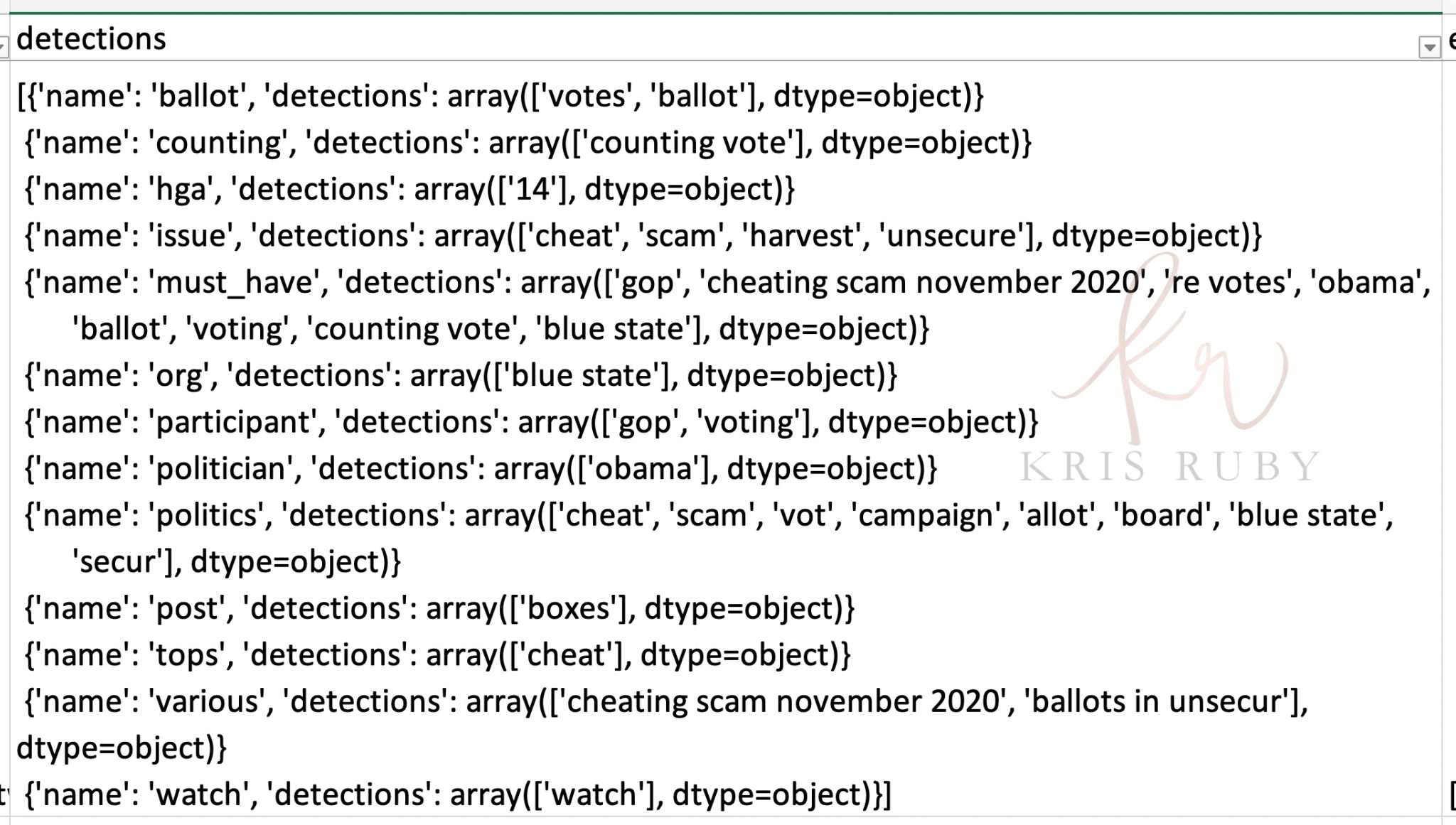

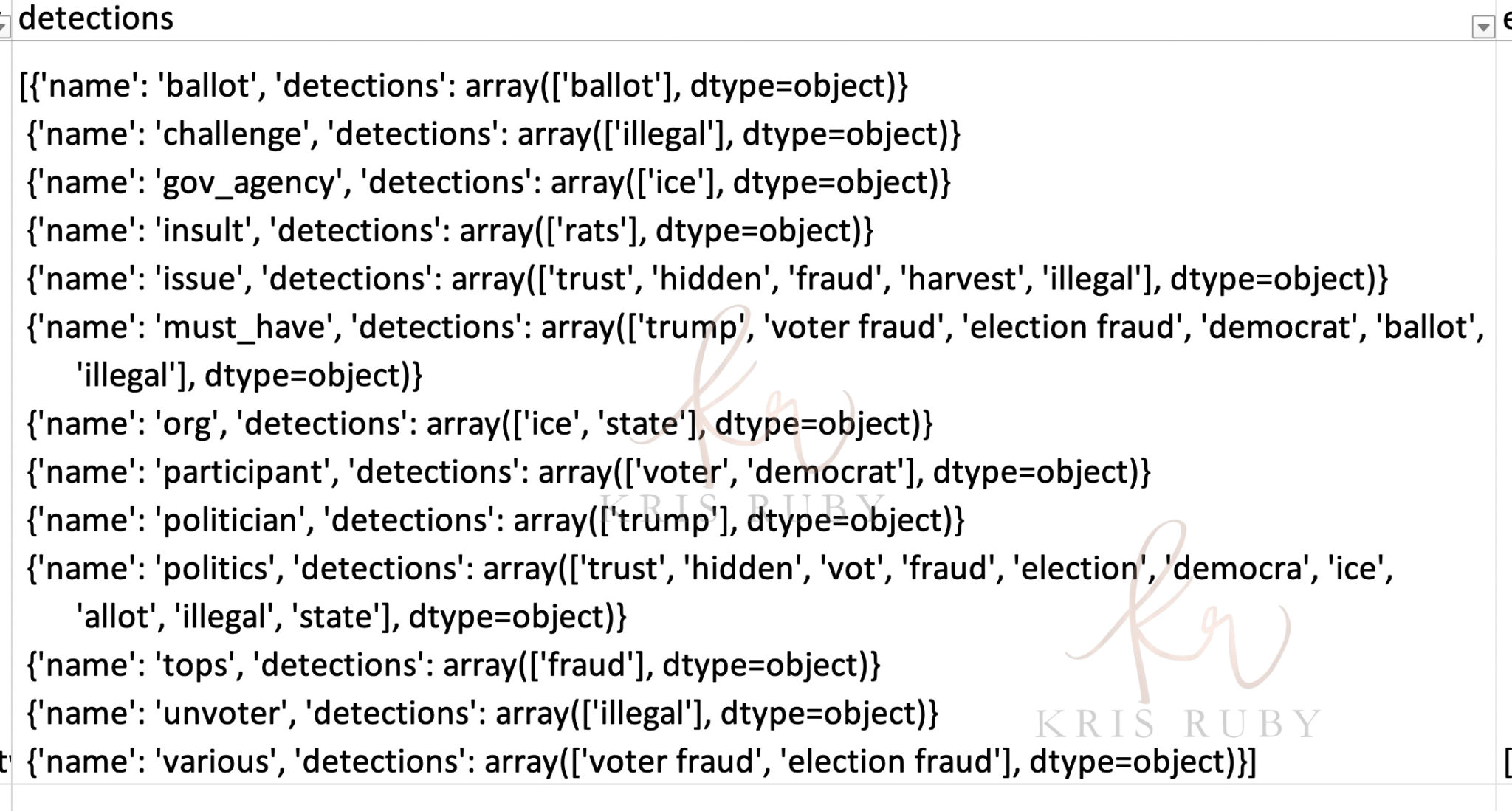

Pictured: “Detections show what we were looking for.”

Caption: “We looked for terms. If there were enough of them your tweet could get flagged.”

What are we looking at? Is this a dump of rule inputs?

“Yes. This is one step in the process that shows just the inputs.”

Does this data show the full process?

“This dataset is only 1/10 of 1% of what’s going on here. The data shows you some of the things we were looking for at Twitter. I don’t claim this data is going to provide all the answers. It’s not- it provides only a partial view for only a few weeks in English, in U.S. politics. However, people may appreciate seeing information that is unfiltered. The public is subject to manipulation. This is data right from an algorithm. It’s messy and hard to understand.

Can you share more about the database access process at Twitter?

“I think it was a way to keep information segregated. To get access to a database, you had to submit a request. Requests were for LDAP access- just directory access. Once granted, you had access to all tables in that database. So maybe some of the engineering database or whatever had thousands of tables. But I built some of these and for highly specialized purposes. The schema would be like ‘Twitter-BQ-Political-Misinfo.’

It was fairly specialized- not for production so to speak. There were two layers of protection. The actions of the agents- human reviewers- were logged and periodically audited. Those are the people looking at those screenshots on PV2. That data went into a data table. It looks like a spreadsheet.

Access to that table was strictly limited in the following way. If you needed access for your job, you submitted a request. It needed to be signed off by several layers. However, once you had access, you had it. And that access wasn’t really monitored.

Here is how it worked:

We had some words and phrases we were looking for. These were developed in consultation with lots of people from government agencies, human rights groups, and academic researchers. But government itself can be a big source of misinformation, right? Our policy people, led by Yoel Roth, among others, helped figure out terms. But we weren’t banning outright.

Machine Learning is just statistics. It makes best guesses for unknown data (abuse, political misinformation) based on what it has seen before.

First, what did we annotate?

Here is a list of items we marked. It is global so I think interesting. The next file called political misinfo contains the actual tweets (only for the US election.) All of the tweets here were scored above 150, meaning they were likely misinformation. There is a field if a human reviewer decided if they were misinformation or not.”

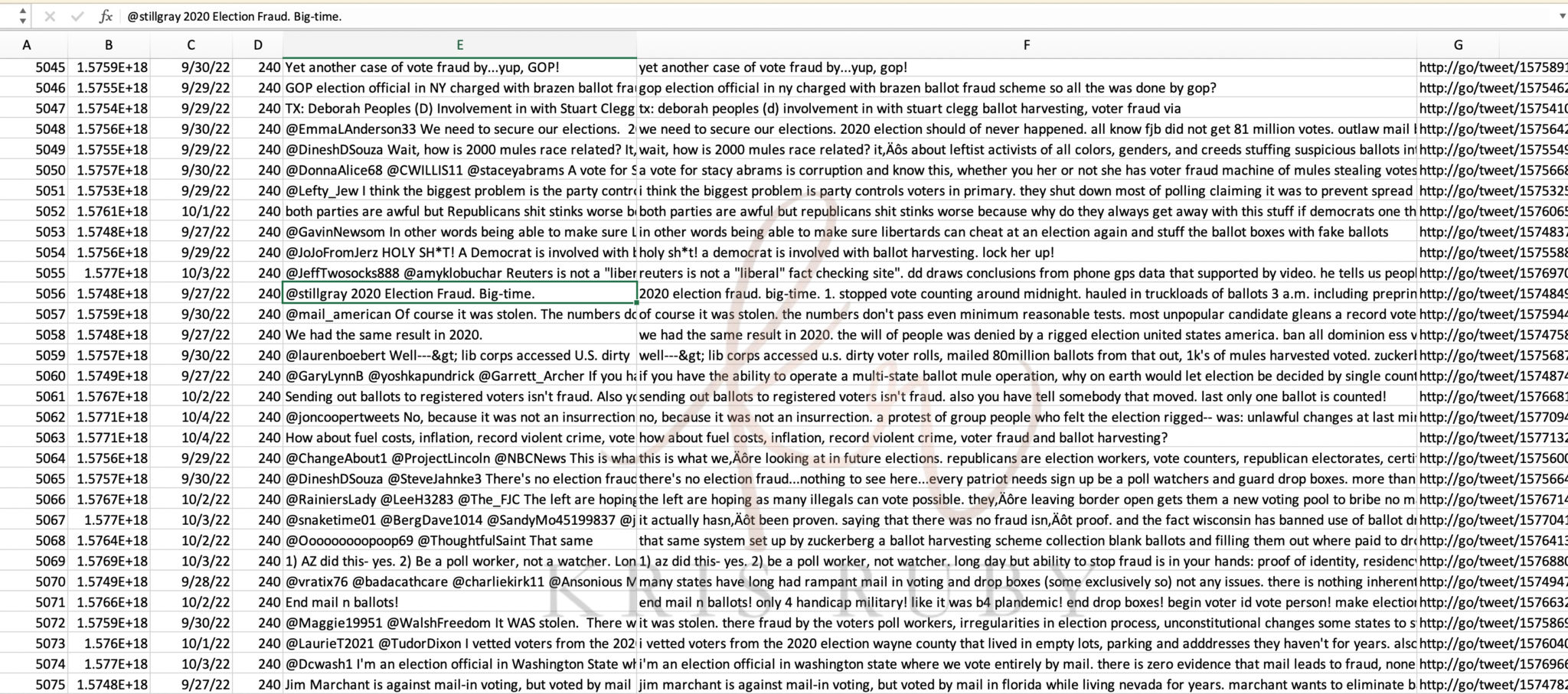

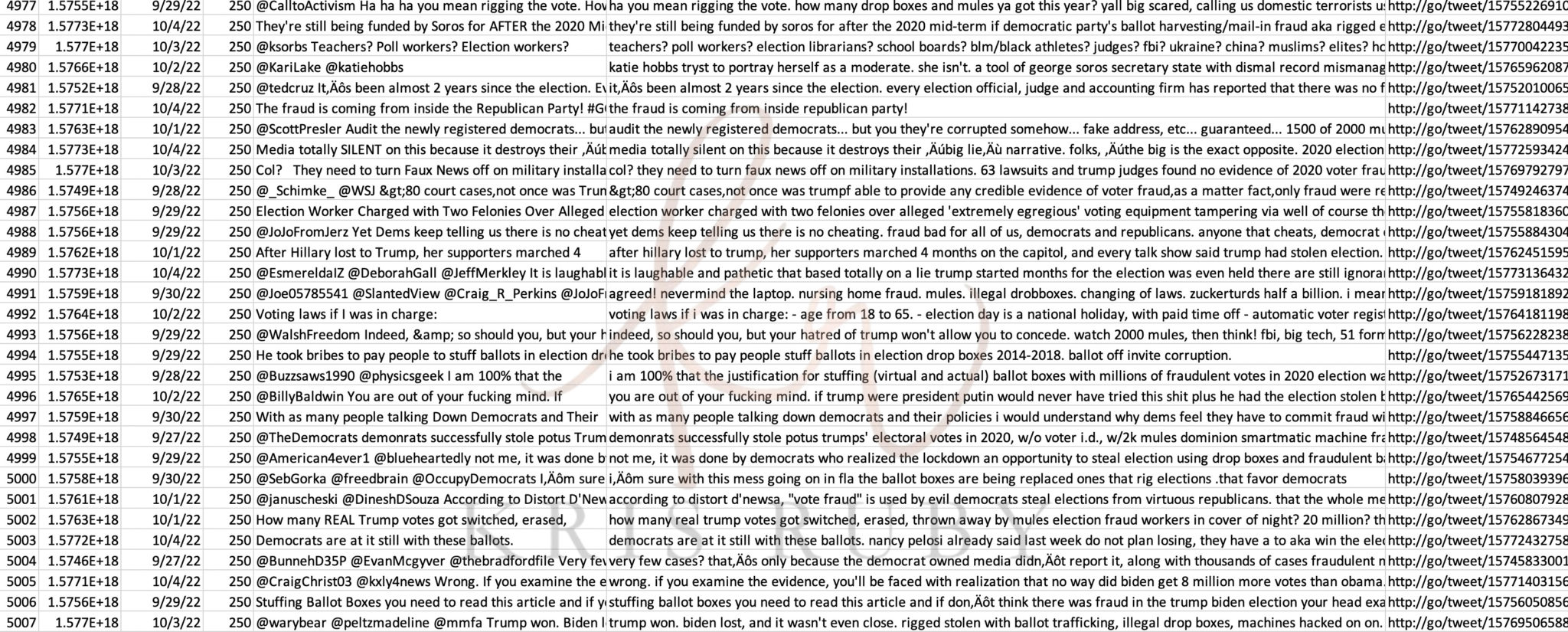

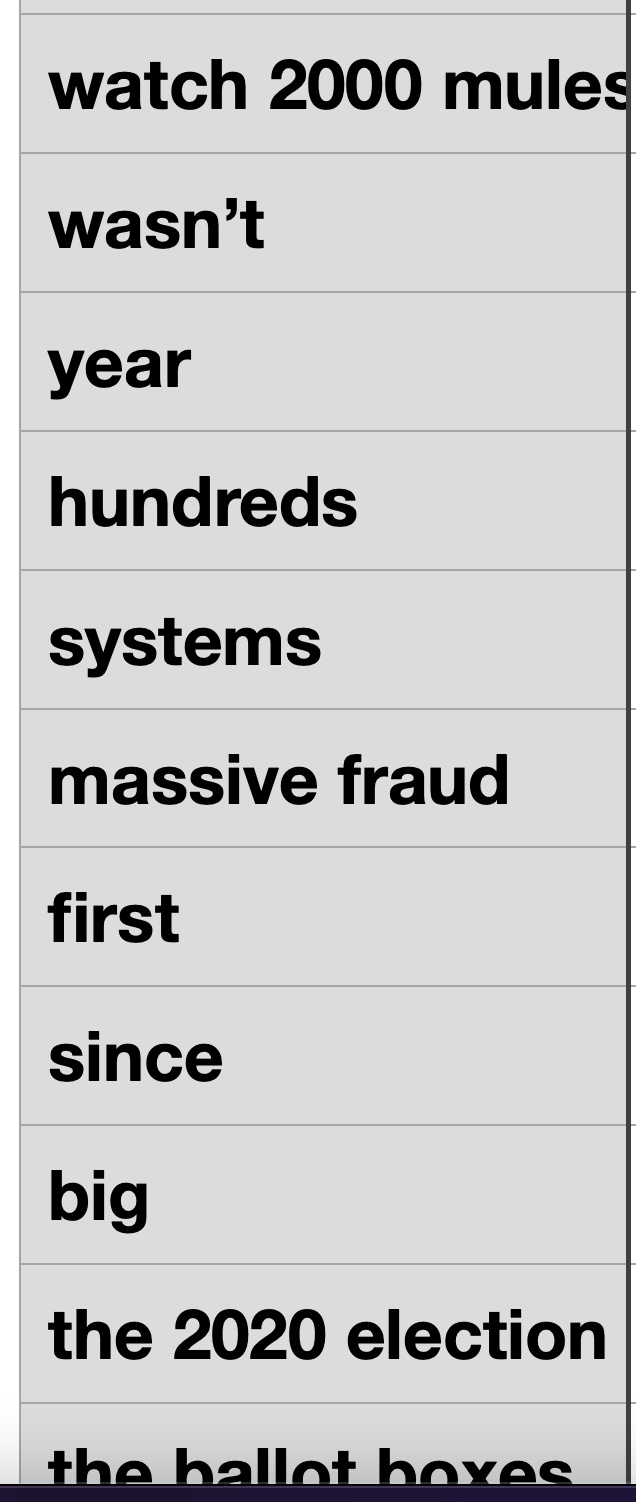

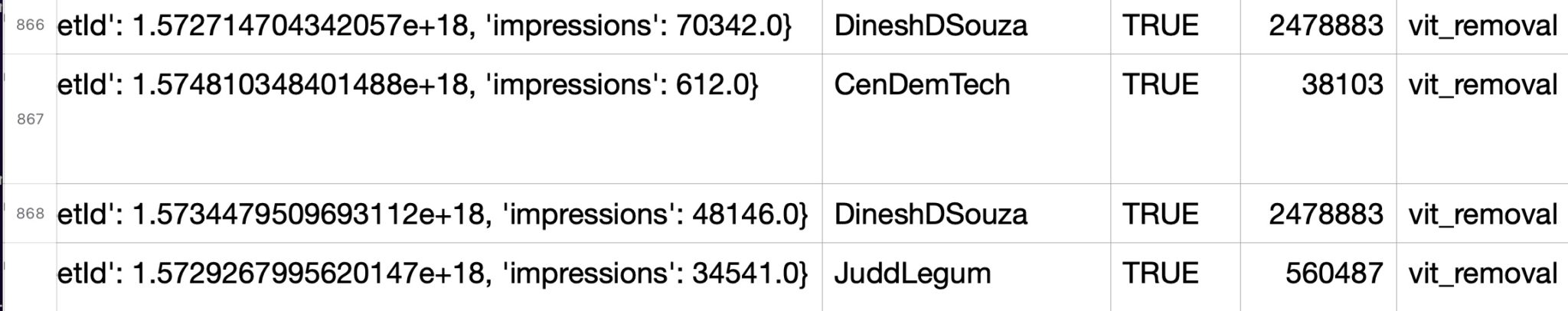

Examples of thousands of tweets marked for review that were flagged by Twitter as political misinformation:

Pictured: Sample results of the Machine Learning algorithm at Twitter in the US political information unit. When the ML scored 150 or higher, it was tagged as likely to be misinformation by Twitter.

Pictured: Sample of training data tweets deemed to be misinformation by Twitter.

*Please note, I have only shared a small sample of screenshots from the data I have.

TWITTER, AI, & POLITICIAL MISINFORMATION

What did the political misinfo team at Twitter focus on?

“We were most concerned with political misinformation. This included The US and Brazil. We focused on other countries as their elections came close.”

How did Twitter define political misinformation?

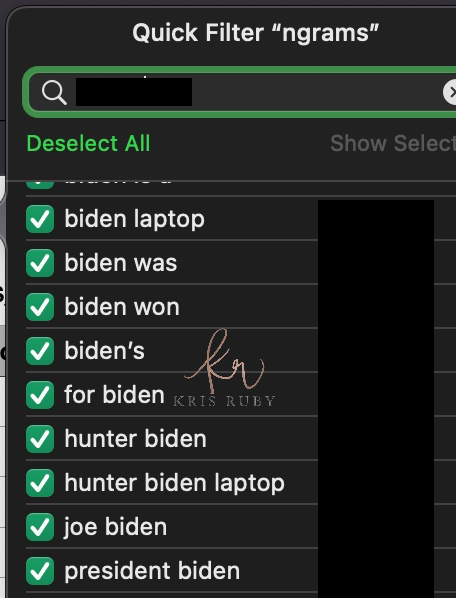

“Every tweet in this set was judged as likely political misinformation. Some tweets were banned and some were not. This dataset might show how Twitter viewed misinformation based on words and phrases we judged to be likely indicators of misinformation. For example, stolen election was something we looked for in detections. We aren’t searching for fair election tweets. Get out and vote type tweets.

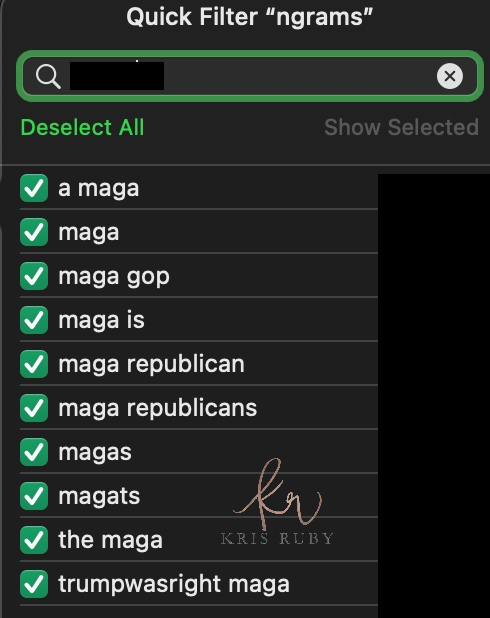

We also searched for the movie 2000 Mules, which said that Democrats stole the election. Our opinion of this movie was neutral. We didn’t judge it, but what we’d found was that tweets which contained that term were frequently associated with misinformation. If you tweeted about that, stolen elections, and a few other things, our AI would notice.

We created a probability. We would give each tweet a ranking and a score from 0 to 300. The scale is completely arbitrary, but meant that tweets with higher scores were more likely to have misinformation.

MyPillow is in there because people tweet about it in conjunction with conspiracy theories. If you just tweeted MyPillow your tweet wouldn’t be flagged. Only in conjunction and context. It is important to look at the actual tweets we flagged. The clean text is the tweet itself.

We also needed a way to look at images, which is a whole other thing (that VIT flag you see).

A VIT flag shows up in the dataset with the actual tweets and the detections. Like “VIT removal”, “VIT annotation”. It was a way to look at images, which could interpret them for an algorithm.

Then we tried to measure context. What was the relationship in position to other words?

The list of words and phrases in the detection column are things we found in the tweet in that row. It’s not the whole picture. But the sample dataset gives a portion of the picture.

Regarding Russia/ Ukraine and war images, any images or videos suggesting the targeting of civilians, genocide, or the deliberate targeting of civilian infrastructure were banned. We had an image encoder, but there were some videos and images about some of the fighting that we flagged.”

Explain what political misinfo is.

“It’s a type of policy violation. It was originally formed in 2016 in response to the numerous fake news and memes generated during that election. The U.S. political misinfo was a data science division Twitter formed in 2016 to combat misinformation.”

Is all content related to political misinformation?

“No, the political_misinfo file is, but I worked on lots and lots of stuff. Other policies are sometimes shown. Political misinfo was my primary function, but I worked on many other things.”

What is the political misinfo jurisdiction? Is this limited to elections? What about Covid?

“We attempted to cover all issues related to politics. We focused more attention when elections were nearing, but could cover lots of non-election related issues. E.g. Mike Lindell and Dinesh D’Souza were talking about the stolen election well after the election was over. We still flagged discussion. We also flagged on images of war, or violence related to politics. Lots and lots of stuff. Also important to note- the U.S. midterms happened just as musk fired half of the company. People were fired Friday November 4th. Those of us left were working on the midterms for the next week until we were fired. I don’t think I downloaded anything that final week. The political misinfo file was the result of more frequent scanning during the run up to the midterm.”

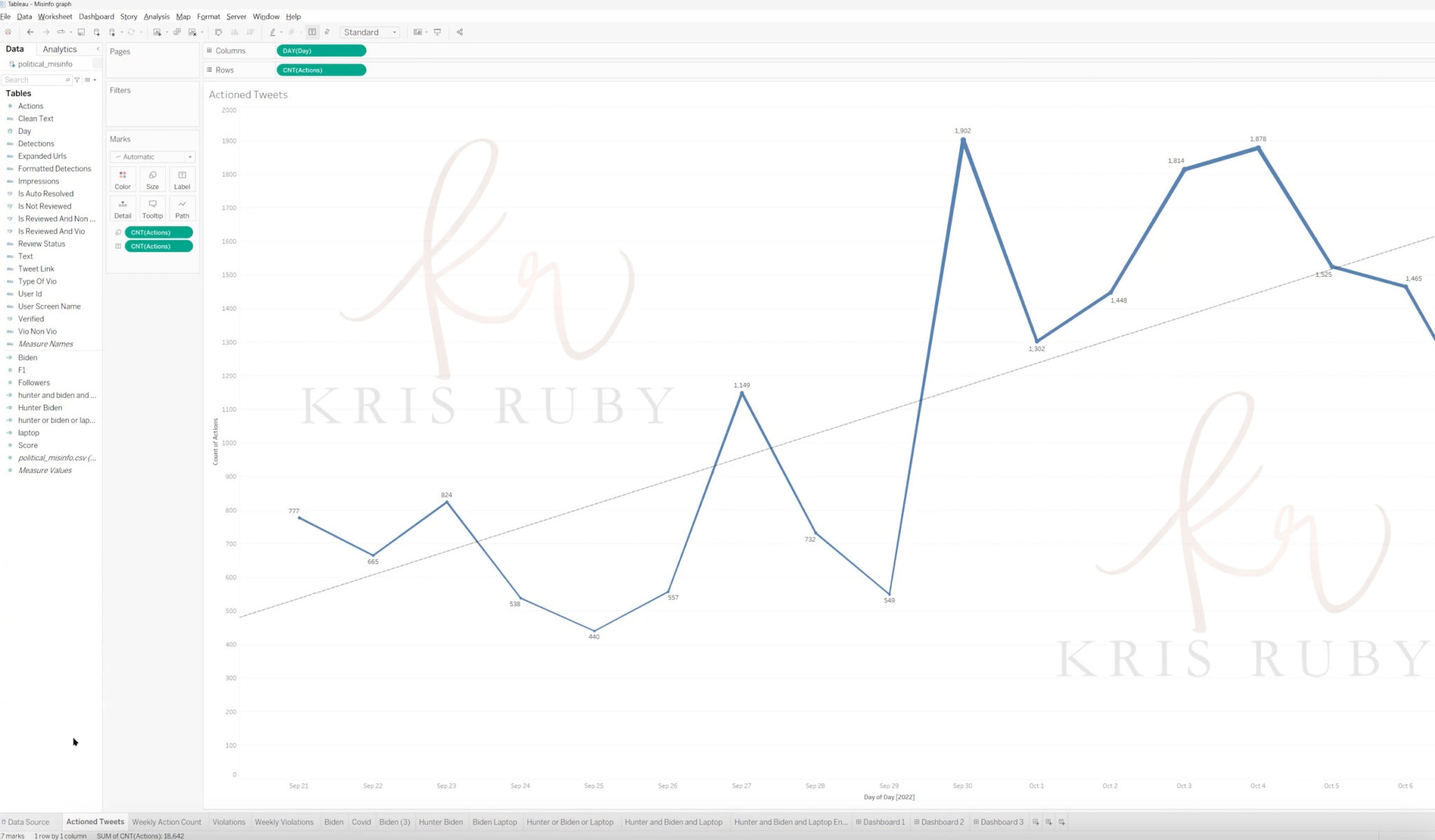

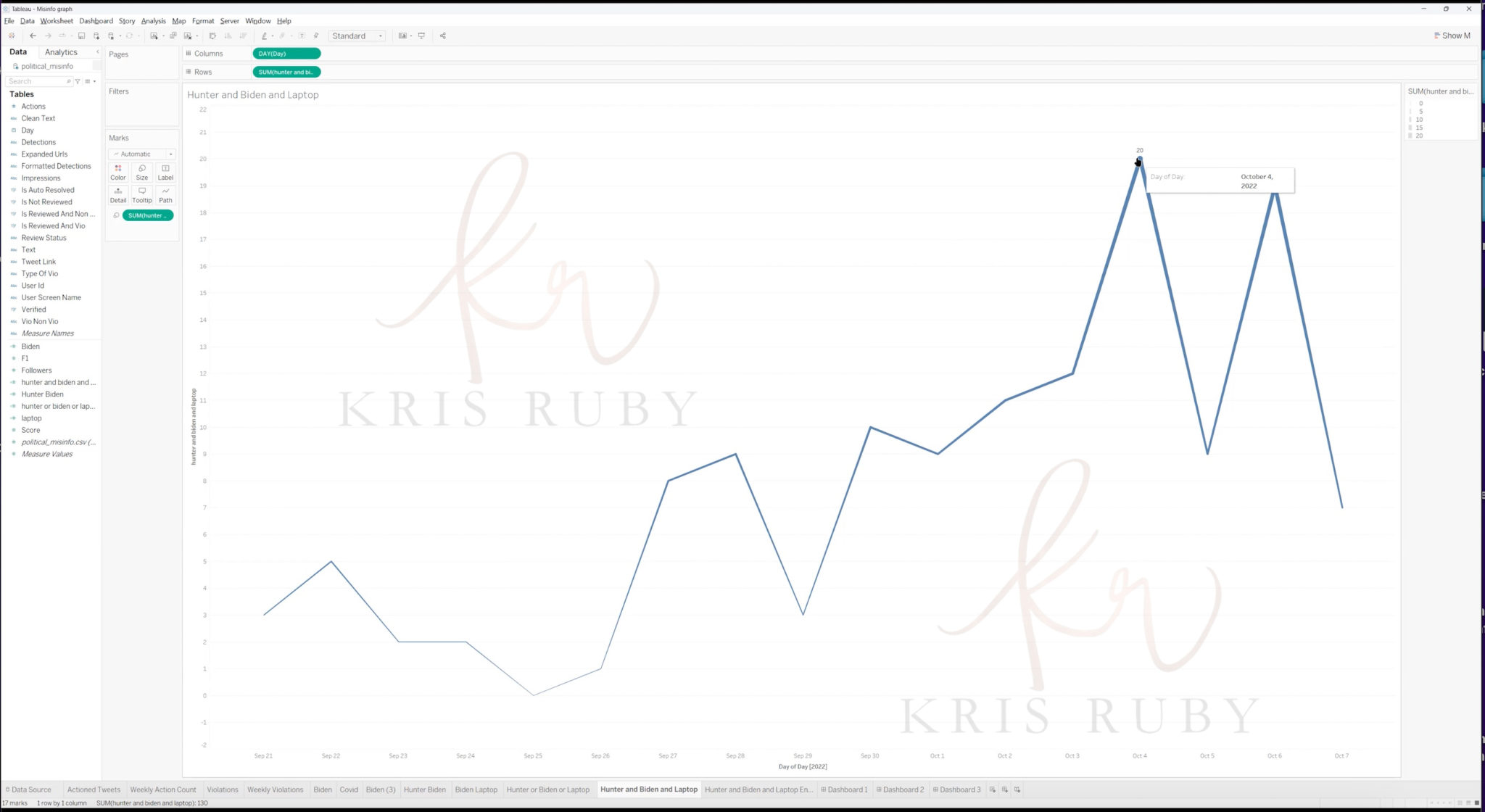

Pictured: Misinfo tweets pertaining to US midterm election.

“These are daily tweets that the algorithm actioned that fell above the threshold of 150. As we get closer to the midterm election in The United States, you can see that from September- October 7, 2022, it trends upwards. The ML algo finds more misinformation as the days get closer to the election.”

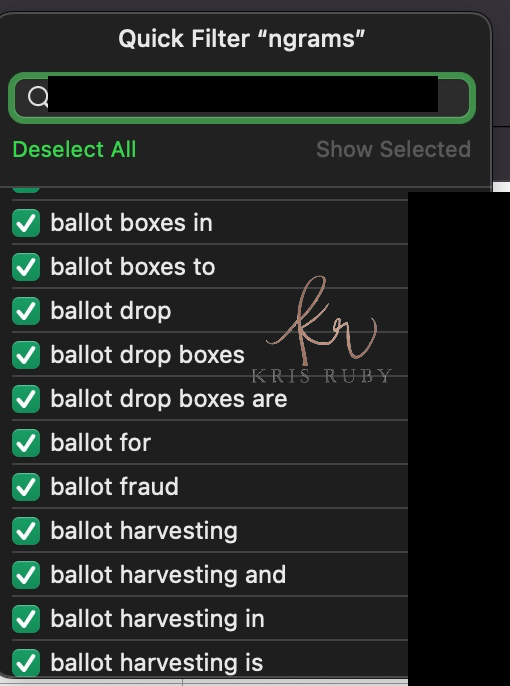

Pictured: Misinfo tweets pertaining to Hunter Biden laptop.

This image shows the number of tweets with the name Hunter and Biden with laptop in them that was caught a day during that time.’

Is the list of terms exhaustive?

“No, we covered elections all over the world. It was not exhaustive, just some we found.”

What is the date range of the data?

“Sept 13, 2021-Nov 12, 2022.”

Can you change or modify the input?

“You can have a log of the change. But very few people understand how it’s working. I had several dashboards that monitored how effective it was. By this I mean the accuracy and precision of the model. The only people who signed off on it were my boss and his boss before it was deployed and put into production. The health and safety team can’t understand the specifics of the model. They can say what they are looking for, but they don’t understand the specifics of the model. By they, I am referring to the team we worked with who helped us understand what ideas to search for.”

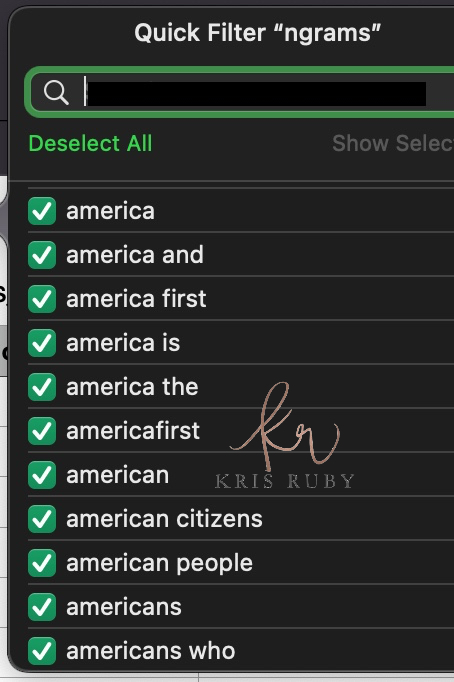

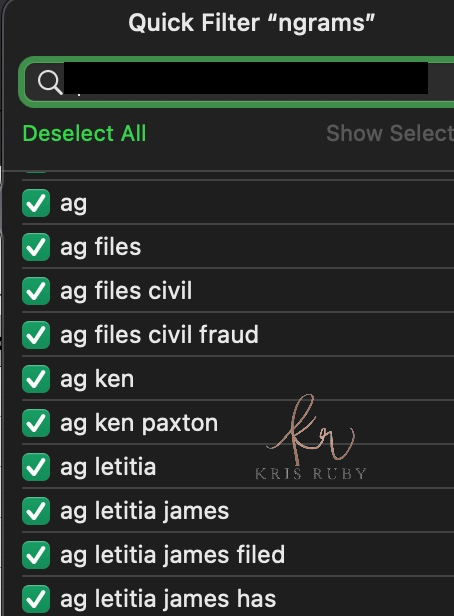

Please define N-Grams:

“N-grams include words or phrases we found in tweets which were violations (political misinformation). The us_non_vio_ngrams are the same – but no violation.

All words come from tweets judged to be:

- Political

- About the U.S. election process

We don’t have the tweets in this dataset, just words we found in tweets. This is similar to the ‘What did we action’ sheet I sent, but just words. That one was on policies which were violated. This is just the words or phrases, which led to the judgment.

Stop words are common words like ‘the’, ‘in’, ‘and’, judged to have no value to the model. They will not count in any calculations we make.

These are the things we are looking for:

(Shows me example on screen of data)

*This thread provides an inside look at the type of terms that were flagged by Twitter as political misinformation. Inclusion on the list does not mean action was taken on every piece of flagged content. Context is critical.

Building a proximity matrix. That’s what these machine learning algorithms do. You could just be saying this – but you want to judge it in context with other words. That’s the hard part. To look at them in context with other words and then to make a judgment.

(Shows me example of on-screen data)

‘Political misinformation is an even split. Despite headlines to the contrary. There is not a ton of political misinformation on Twitter. Maybe 9k per day on the busiest day when I was there.

Machine Learning was good at looking for it. The human work force could check for violations. Abuse, harassment, violent imagery- much more common- that is mainly machine learning, because there is so much of it.

All ngrams in the detections list show words or phrases being violated. Important to understand these were made in context. We tracked hundreds of n-grams.”

Twitter N Gram examples:

💎A few more Twitter N-grams for you: #RubyFiles pic.twitter.com/RgjUshonEI

— Kristen Ruby (@sparklingruby) January 27, 2023

Natural Language Processing and Social Media Content Moderation

What is the big picture?

“We aren’t searching for fair election tweets- get out and vote type tweets. This list gives you a look into the types of content we determined to be political misinformation.”

The political_misinfo file I sent has actual tweets we flagged. We scored these at or above our minimum level for misinfo. These specific tweets are shown with the words or phrases which were used to classify it that way. These were sent to human review so they could give us feedback on whether or not they considered it misinfo. In other words, did our algorithm do a good job? Other files show specific policies violated and how many times or when they were violated.”

What does the data show?

“These tweets are tweets that we considered violations of our political misinformation policy. They may or may not have been reversed later. You can assume we felt these tweets were dangerous politically. That’s why they were banned. The list of policy violations shows general policies we were looking for at Twitter.”

Why is Letitia James flagged?

“Letitia James was there because many people had theories about her and aspersions on her character. People said nasty stuff and she was also part of conspiracy theories.”

Why is a photo of a Nazi saluting a flag in Ukraine dangerous enough to ban?

“Because it was being used as a justification for war and it was a lie.”

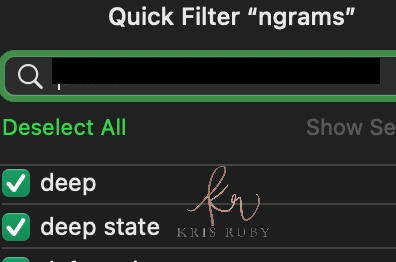

I see the word deep state. Why?

“Deep state was definitely there. It’s one of those things we judged as a keyword to indicate a conspiracy theory. However, it is important to note that word alone wouldn’t get you banned. But in conjunction with other terms, we felt it was a likely indicator. I do see that it lends to the perception of left-wing bias. Keywords are a part of NLP. The context to which the tweet is referring to is important.”

What does the Greta line refer to in the data?

“Greta trash ID refers to a photo of her circulating that claimed the climate rally events she attended had a lot of trash at them. It was an attempt to discredit her and we considered it slander. The photo was similar to this one – without the fact check sticker on it.”

What does the Brazilian protest line refer to in the data?

“The Brazilian protest videos were related to Covid. The claim was they were protests against pharma companies.”

Why was the American flag emoji on the n-gram list?

“The flag emoji, and particularly multiples were just one thing we looked for. Please keep in mind none of these are prohibited terms. We’d found them to be likely to be associated with misinformation. For sure it can seem like bias. Sorry. I know it looks like bias. I guess it was.”

What is the Rebekah Jones annotation?

“Rebekah Jones was the subject of a smear campaign and violent threats due to her involvement in Florida Covid tracking. Later she ran for Congress and all of the hate just came back up again.”

Why were certain things flagged in the data? Misc. answers on flagging:

“There were many conspiracy theories that China or the FBI were involved to help Biden.”

“It contained allegations of specific crimes. In our view, those allegations were false. We felt we had a duty to stop the spread of misinformation.”

TWITTER & NATURAL LANGUAGE PROCESSING

Why are certain phrases misspelled?

“NLP uses something called stemming. Ran, run, running are all verb tenses of the same word. NLP tries to figure out the stem of a word and only uses that. Sometimes it uses a shorter version ‘tak’ for take, taken or taking.”

Please share more about the technology used at Twitter:

“We used a variety of approaches. Python is the language we used. So NLTK, Vision Training, various natural language processing algorithms. We were using a variety of NLP models. Nobody else is using anything that is more advanced. I have visibility into what Meta is using. We used a lot of NLP and computer vision.

In terms of the database used, we interacted with data through a tool called BigQuery, an SQL interpreter (SQL stands for structured query language). It puts data into tables similar to excel spreadsheets. It pulls from a SQL database. What is it pulling? The text of the tweet. There was a table called unhydrated-flat. That table held tweets as they came in. This Python code pulled from that table.

We also used AWS to host data and deploy our models to production. AWS can interact with data in Python or JavaScript to build out data tables. In my case, the data was developed in Python and hosted through AWS.

I used many tools including Support Vector Machine (SVM), Naive Bayes. Google’s Jigsaw Perspective was used for sentiment analysis.”

What is the difference between supervised and unsupervised learning?

“AI is a series of probabilities. Supervised learning is where you ‘know’ the answer. For example, let’s assume there are 10,000 people in your dataset. Some have diabetes, some don’t. You want to see what factors predict diabetes. Unsupervised – you don’t know the answer. You feed the algorithm 1,000,000 photos of cats and dogs but don’t tell it what it’s looking at. You then see how good it is at sorting them into piles. Unsupervised learning is often an initial step before supervised learning begins. Not always, but sometimes.”

Did Twitter use supervised or unsupervised learning?

“We used both. NLP can be done either way. I happened to use supervised learning, but you can use either. Each has advantages. The point is to have lots of models running to see which works best. Largely because of the complexity of the data, no one approach is best. Not all of the models were unsupervised. Our supervised models were used in different ways. It’s an incredibly complex thing with many moving parts.

Our unsupervised model:

We were scoring based on context, so not just ‘deep state,’ but that ngram and ‘stolen election’ combined with others in a certain order and with other likely indicators such as size of following, follower count, impressions.

For our unsupervised model- we used both skip-gram and continuous bag of words (CBOW). The idea being to create vector representations. This helps solve context issues in supervised learning models such as the ones I’ve described. The embeddings help establish a representation in multi-dimensional space. So, the human mind can’t visualize beyond 3D space, but a computer can. No problem.

A classic example here – we would associate king with man and queen with woman as seen in the diagram. In short, CBOW often trains fast than skip gram and has better accuracy with frequent words. But we used both. Now, here’s the thing. Both these models are unsupervised BUT internally use a supervised learning system.

Both CBOW and skip-gram are deep learning models. CBOW takes inputs and tries to predict targets. Skip gram takes a word or phrase and attempts to predict multiple words from that single word.

Skip-Gram uses both positive and negative input samples. Both CBOW and skip grams are very useful at understanding context.

We factored our words into vectors using a global bag of words model. The NN models we used were the unsupervised ones that used embedding layers and GloVe. We felt a varied approach and using many different models produced the best results.

We used it on unsupervised models mostly. Or GloVe. But Word2Vec is better for context training. The data I showed you was on supervised models, which don’t use any word embeddings to vectorize such as Word2Vec.

To calculate multimodal tweet scores, we used self-attention, so that images or text, are masked. Our model used self-attention to compute the contextual importance of each element of the sample tweet, and then attempted to predict the content of the masked element.”

TWITTER MACHINE LEARNING (ML) MODELS & BOTS

How often was the model updated?

“It was updated and retrained weekly near an election and monthly otherwise.”

How many bots are used at any given time?

“There are multiple bots running in the background all the time.”

Shows me an example of a flagged tweet on screen:

“I used Python to find this. I wrote the script.

Trust and Safety helped us to understand the issues. I deployed it. We used AWS SageMaker to put ours into production. There are lots of bots all the time and we are always ranking them against each other to see who was doing a better job. They are not very good. They have a 41 percent rate of success.

There are two ways you can get it wrong.

- False positive – you say something is misinformation and it’s not.

- False negative- where you say something is safe and it is misinformation, abuse, or harassment.

If you feel these things are not legitimate, then you feel that there is bias.

These are what we made a decision on.”

How did AI detect bots on Twitter?

“Sometimes the AI data would show who is a bot. It’s hard to find out who is a bot. If you tweet a bunch in a short period of time that can be a signal. It’s been a continuing issue at Twitter that we never fully solved. There definitely are bots on the platform but they are notoriously difficult to detect. Easy to deploy. Difficult to detect.

It is a tough problem to root out bots. That’s why we had such a difficult time. It’s hard to really know what’s a bot and what is not because they can be created so rapidly. Musk himself acknowledged this too.”

You stated there were two different types of bots on Twitter. What is the difference between both types?

“We wrote scripts – known as bots – autonomous programs that performed some task. In our case, the bot was looking for political misinformation tweets. Bots can also be fake or spam accounts. It is still the same idea – an autonomous program that performs an action – in this case searching for info, or trying to post links to malware, etc.”

Were different algorithms involved?

“Yes. For example, that is a different algorithm that affected the home timeline. To the extent that they would affect them is if your tweet was flagged by an algorithm that a data scientist wrote and kept off the general home timeline would still show up for the timeline of the person’s followers.

The U.S. bot tweet file shows things flagged by our bots or algorithms.”

TWITTER TESTING & MACHINE LEARNING EXPLAINED:

Please explain what testing means in the context of AI/ML:

“Testing is when you have a machine learning algorithm and want to test to see how good of a job it did. The relationship between training and test data is that training data is used to build the model. Test data checks how good the model is. All data is preserved. When new data comes in (new tweets), we test our model on that data. That is why it is called machine learning. It learns from new data. In machine learning, we have a dataset. Let’s say we have a dataset of two million tweets. I split that data into a training and testing dataset.

Let’s say 80% training and 20% testing. I train my model on the training dataset. When that training has occurred, I have a model. I test that model, which is a ML algorithm, on the test dataset. How good a job did the model do at predicting with actual data? If it is very bad or doesn’t get many correct, I either try a different model, or I change my existing model to try to make it better.

Machine learning models ‘learn’ by being trained on data. Here are one million tweets that are not violations- here are some that are. Then you have it guess. Is this tweet a violation? If it guesses it is a violation, and it is, that is a true positive. If it guesses it is a violation and it isn’t, that is a false positive. If it guesses it is not a violation and it is, that is a true negative. If if guesses it’s not a violation and it is, that is a false negative. The idea is to get the true positive and negative up and the false positive and negative down. New data helps with that.

If your tweet scores high enough, above 150, our algorithm could ban, annotate, or suspend the account, but we tried to be selective. In the tweets I sent over, not all that scored very highly ended up being banned.”

I predict Twitter Spaces data could be used to train a model.

“Yeah. Definitely. Anything on the site is fair game. We used everything to train.”

ML EXPERIMENTS

What is in the machine learning database experiment list?

“Those were all of the experiments we were running in our A/B test tool. The one where we ran our suspension banner experiments. The tool was called Duck Duck Goose.

Whenever I pulled data from this table, I always filtered it to just my experiment data.We started in December and ours ran for nearly a year.I probably ran a query to give me a unique list of names of all experiments in the table. I think I noticed some crazy names and was like ‘what is this?’

This is also many years of data. I intended to investigate at some point. That’s probably why I downloaded it. But you know how it is. You get busy with other stuff. It is always easy to say ‘I’ll look into that later’.”

🔬 We are all lab 🐀 in a giant social experiment 🧪#SocialMedia #Twitter pic.twitter.com/PIvBBfN0QO

— Kristen Ruby (@sparklingruby) January 11, 2023

Did these experiments run? Were they live?

“Do I think they ran? Yes. They wouldn’t be in the table if they hadn’t run.”

Is visibility filtering ever positive?

“It’s usually a content moderation tool. I can’t think of any other use. I just don’t know the context here re what was being filtered and why.

In an A/B test, you are testing how people will react to a change. You randomly assign a group into either an A group or a B group (in our case test and control instead of A and B). Then you show one of them the change and do not show it to the other group so you can test how people react to the change.

The numbers attached to the end are catalogue numbers. We had to assign some unique id to track. I think that’s what they are.

Lots of groups use A/B tests. They are beloved by marketing and sales for instance. Those are names of tests. A holdback is a group that will never be exposed to the test condition.

You have a source of truth for how people react. It’s usually quite small. 1% in our case. This was not limited to data science. That’s why I have so little visibility.

Is machine learning testing ever used for nefarious purposes?

“Yes. Testing can be used for nefarious purposes.”

What is the process for gaining access to run an experiment?

1) You need to apply for permission.

2) Once permission is granted, you need to apply to run your experiment. They took it pretty seriously. You’d need to fill out a formal application with the scope and written statement proving a business case.

3) It was moderately technical to set up. Not like PhD level, but you would definitely need to have help or take your time to figure it out.

4) Once it ran, you didn’t have to touch base except to increase or decrease the sample size or to end the experiment.

TWITTER, CONTENT MODERATION, & AI

Discuss the content moderation process at Twitter and the role of AI in it:

“If you got a high score and we marked you- we might just suspend you. We might just put you in read-only status, meaning you can read your timeline, but you can’t reply or interact. Read-only was a temporary suspension.14 days typically triggered to a certain tweet. That is different from permanent suspension, which could include violent imagery.

Direct threats of violence would be triggers for immediate banning. Abuse was our largest category of violations. There were hundreds of models running all the time.

In terms of work on the appeals process- users were complaining that they didn’t even know what tweet they were banned for and that was a problem. We worked hard to identify the tweets so they would know.

AI can set a flag on a tweet and perform an action automatically. If it was later determined that the flag was set unfairly or incorrectly it could be removed, but I don’t know if that was done by AI. AI was flagging tweets for suspension. Removals had to be done by appealing or second pass. The AI went through with second pass. You could appeal and it would be seen by a human. You could appeal and it would be seen by AI. If you were seen by AI the flag could be removed.

AI would evaluate it. If you appealed your suspension and another model evaluated your tweet, it could remove your suspension. AKA, if it appealed -the second model not the model that logged you. A different model. If it determined with a high degree- in the second round -higher degree of accuracy that the tweet was in violation- we might uphold the appeal. If it was not sure at the high level, then you might get sent to human review. Also, your appeal may go to human review instead of another model.

It sounds dystopian. Part of the reason we did that was the sheer volume of what we had to deal with.

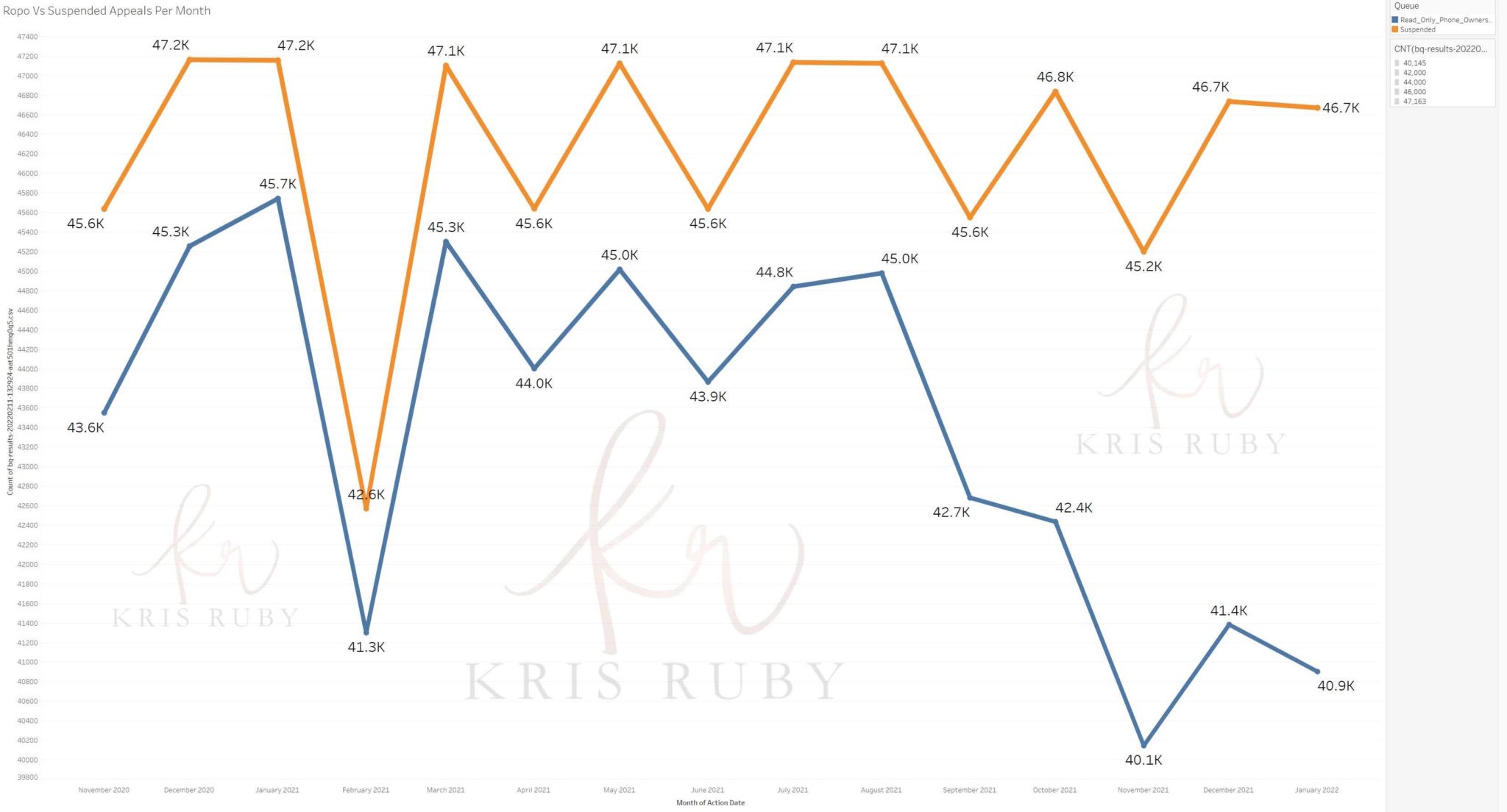

Here is a screenshot comparing the number of appeals per month between permanent suspensions (i.e. you are banned entirely) and read-only phone suspension or ROPO, when you are temporarily blocked for a max of 14 days, or until you perform an action such as deleting the offending tweet.”

What is an action?

“An action is any of the following:

- Annotate

- Bounce

- Interstitial

They are all actions. Bounce is suspended. Annotate and interstitial are notes we made for ourselves to resolve later.

This data is related to an appeals project I worked on. Previously when a user was suspended, they wouldn’t even know they were until they tried to perform an action (like, retweet, or post) and they would be prohibited.

We built a suspension banner that told users immediately that they were suspended, how to fix it, and how long they would be in suspension. These files are all related to that. We wanted to see if that banner led to more people appealing their suspensions (it did), because that might mean we had to put more agents (human reviewers) on the job because of increased workflow.

The data is all aggregated with daily counts, time to appeal, etc.

We were trying to roll out a test product enhancement that would show users who had been suspended a banner that contained information on the suspension.

For this specific ML experiment, we used test and control groups so that we could see if users shown the banner had different behaviors.

- Did it take them longer or shorter to appeal?

- Did it cause them to appeal more frequently?

Users in the test column were shown the banner and those in control were not.

Column E is time to appeal. I was trying to calculate if they were appealing faster with that banner.

The banner was a little rectangle at the top of the screen that couldn’t be dismissed. It would show:

- Your suspension status

- Time remaining in suspension if not permanent

- Links to appeal and information about where you were in the process

The control group was not shown a banner.

So, the end of this test was that it increased the appeal rate – users were more likely to appeal – this had effects on human reviewers downstream who might have a bigger workload if more people appealed. Also, time to appeal was reduced. People appealed faster which makes sense. They did appeal faster and were more likely to appeal if they saw a banner.”

What is the takeaway in this dataset for non-technical people?

“I think the takeaway from this dataset is the extremely high number of appeals daily. Remember, there are about 500 million tweets every day and that number of appeals suggests people were not happy, thought the ban was wrong, etc.

The second takeaway is appeals for permanent suspensions (what we just called suspensions) are way higher than bans for temporary ones (we called read-only phone ownership).

This makes sense, because you may be more likely to appeal if you’re permanently banned than if you’re only locked out for a bit.

I don’t have the overturn rate, but it was fairly high as well. Meaning, your ban was overturned on appeal.

The time to appeal (TTA) metric was an attempt to calculate time to appeal. This was part of the in-app appeals project, which would make the entire appeals process easier and more transparent.

We were trying to measure time to appeal because we wanted to get a sense of the effect this new process would have on total appeals, time to appeal, etc. The idea is, if more people are appealing, more agents should be allocated to review those appeals.”

What is guano?

“Every database serves a purpose. Tracking actions or tweets we had probably hundreds if not thousands of databases each with several tables. The raw data existed in a guano table in each (most) databases. This was called guano because they had dirty non-formatted data. You could also make notes in them. If a tweet was actioned it means we marked it in some way, it would have a guano entry. Sometimes we would take notes on a tweet and those tweets would be collected in a guano table.”

TWITTER DIRECT MESSAGE ACCESS:

Could employees read Twitter users DM’s (in your division)?

“Yes. We could read them because they were frequent sources of abuse or threats. Strict protocols, but yeah. So, if I am being harassed I might report that so Twitter can take action.”

What protocol was in place for access to confidential user data and Direct Messages?

“We dealt with privacy by trying to restrict access to need to know. As far as bans, the trends blacklist indicates it on a list of trends blacklisted- meaning it violated one of our policies. The note up top is interesting. We couldn’t take action on accounts with over ten thousand followers. That had to be raised.”

Did you have access to private users Direct Messages?

“Yes. We had access to tweets. That is how we trained our models. We would make a tweet as misinformation (or not) or abuse or whatever like copyright infringement. People who had access were pretty strictly controlled and you had to sign off to get access but a fair amount of people working in Trust and Safety did have it.”

SHADOW BANNING & VISIBILITY FILTERING ON TWITTER:

Did Twitter engage in shadow banning?

“I don’t mean to sound duplicitous, but let’s first define shadow banning. Twitter considered shadow banning to be where the account is still active, but the tweets are not able to be seen. The user is literally posting into thin air.

Here is what we did. We had terms which, yes, were not publicly available, along the lines of hate speech, misinfo, etc. If your account repeatedly violated this but we decided not to ban you- maybe you were a big account, whatever- we could deamplify. That is what I mean by corporate speech seeming shady. So, your tweet and account were still available to your followers. They could still see, but the tweets were hidden from search and discovery.

We tried to be public about it. Here is a blog post from Twitter titled ‘setting the record straight on shadow banning.’ Not a perfect system. You could argue, reasonably, I think, that this is shadow banning. But that’s why we said we didn’t.”

Were you aware that users were publicly banned from searchers? How much if this was shared with employees vs. limited to certain employees?

“Me? No. I didn’t know. Those screenshots are from a tool called Profile Viewer 2 (PV2). If it is data that is captured, as this data was, someone could see it and was aware, but not me. Those are tools for people we called agents – people who manually banned tweets or accounts. I was definitely an individual contributor and didn’t have the full picture.”

Was shadow banning and search blacklisting information shared with other teams?

“No. Heavily siloed data. I literally just counted violations to see how good our algorithms were performing. That is part of content moderation, technically called H Comp. So, they were aware of it, but not me. Few employees were aware. Managers in the thread of former data scientists are like, what?

There is a disconnect between what Twitter considers censorship and what others in public consider censorship. Twitter feels it was very public about what it was doing, but this was often buried in blog posts.”

Source: Twitter blog titled “Debunking Twitter Myths”

POLITICAL MISINFORMATION, TWITTER, & AI:

What outside groups provided input on words and phrases deemed political misinformation?

“Typically, governments, outside researchers and academics and various non-profits could submit words. We didn’t have to take any of them, but I’m sure we took requests from US government agencies more seriously.”

What are some examples of outside groups you worked with or that provided input?

- CDC

- Academic Researchers

- Center for Countering Digital Hate

- FBI

- Law Enforcement

There were others. I don’t know all of them.”

Who are the key players involved in content moderation and who did you collaborate with both internally and externally?

- “Trust and Safety. We worked with policy and strategy, people who were experts in their country to understand the laws, customs, and regulations of the country. We got information from the government researchers and academics. This helped us formulate policy not just in the US, but around the world.

- Data scientists writing machine learning algorithms.

- Human reviewers.”

Who created the Machine Learning (ML) dataset at Twitter?

“We worked heavily with Trust and Safety. Machine learning works on probability. We said if there are that many things I am seeing in cell h9 -ballot detections- these are all the things we found in this tweet- that’s what makes it have this score of 290.

I wrote the scripts. Trust and Safety helped us to understand the issues. I deployed it and put it into production. Data scientists write the algorithms. Trust and Safety regularly meets with a data science department dedicated to political misinfo.

Who was responsible for deciding what was deemed misinformation? Who told you the model should be trained on those parameters?

“Trust and Safety mainly. We worked with Trust and Safety, who provided many of the inputs. Algorithmic bias is real. Machine learning reflects those biases. If you tell a machine learning algorithm that tweets which mention the movie 2000 Mules are more likely to contain misinfo, that is what it will return.

We met regularly with Trust and Safety.

They would say, ‘We’d like to know X.’ How many vios on a certain topic in a certain region? We could help generate dashboards (graphs all in one place) for them. Then, they could also help us understand what terms to look for and it was all part of our process.

We worked within the systems to build our models so they would conform to the policies and procedures laid out by Trust and Safety. They were policy experts. They understood the issues. We were ML experts. We knew how to code. That’s how it worked.

Also, the strategy and politics and health and safety teams were involved. We are in meetings with them. They say, these are the things we are finding. We then write algorithms based on that. How good of a job does this do? We also worked with human reviewers to make our algorithms better. Other people helped to set the policy. I am not an expert at elections that I worked on. That is why we had to have these people to help us understand the issues.

The terms were created by working with the Trust and Safety- who were the experts. Also, by working with the government, human rights groups, and with academic researchers. That is how we got wind of these terms.

On the question of if we were looking for conservative specifically- the terms we looked for included stolen election, big lie, Covid vaccine, etc. That is what we looked for.”

You worked with the US government?

“I mean like- yeah. We definitely worked with various government agencies. Did we take requests from The Biden White House? I don’t have any knowledge of that. I do know we worked with certain government agencies who would say these were things they were seeing that were troublesome to them. We worked with government agencies around the world. We had to make internal decisions as to what was a legitimate request and what was not. We got the terms from Trust and Safety.”

TWITTER, AI, & CONTENT MODERATION PROCESS:

Describe the content moderation process at Twitter and how AI was involved:

“Agents or human reviewers could get wind of a tweet through somebody reporting it. The tweet had to reach a certain critical mass of people. If enough people reported a tweet in a short enough time, then it would come up for human review. It had to be reported by enough people then a human could review the specific tweet and make a decision based upon what they felt.

My algorithms were patrolling Twitter and trying to find violations specifically in political misinformation.

(Showed me via video example of tweets that violated misinformation policies around politics).

This is not a complete list of everything we looked for- just the terms found in a specific tweet. It’s not the whole picture, but a small slice of data opens a window into a subset of it.

You could be banned by the algorithm. You could be banned by the person.

By far, the algorithm was banning a higher number because there are just so many tweets. There are multiple bots running all the time.”

How many people signed off on this?

“I would develop the model. The only people who really signed off on it were my boss and their boss before it was deployed into production. The health and safety team can’t understand the specifics of the model, but we can say this is what we are looking for based upon what you’ve told us. They can say that sounds great and then we can show them what we have found and they can say that looks good. As far as the specifics of the model, they don’t understand it. There are only a few people trained to work on this. Very few people understand how it works. I had several dashboards monitoring how effective it was. If we suddenly saw a drop, somebody might take notice. We reported the results out. The system is pretty fragile in that way. We were aware of the inputs. I never thought of myself as choosing them.”

Is there is a misconception that humans are more involved in content moderation decisions than they really are?

“Yes, it’s an incredibly complex system with many competing ideas and models and it’s always evolving. If you check back with me in a year, all of this may have been replaced with something new. The application involves complex math – graduate-level statistics, calculus, etc. To implement a model, you also need a deep knowledge of coding and the ability to put your model into production. It’s very complex and many people are involved. I think people don’t really know how much these algorithms run the Internet, not just content moderation. They are responsible for much of what we see online.”

vIT is a tool we used for vision information technology. Vision transformers- ways of scanning -was a new tool we were testing to see if it was as effective as the old tools.

Here is an example of some of the list of words we were looking for:

Pictured: Examples of words Twitter was searching for.

All these things contribute to a score.

(Shows me on a video interview walkthrough of how the process worked)

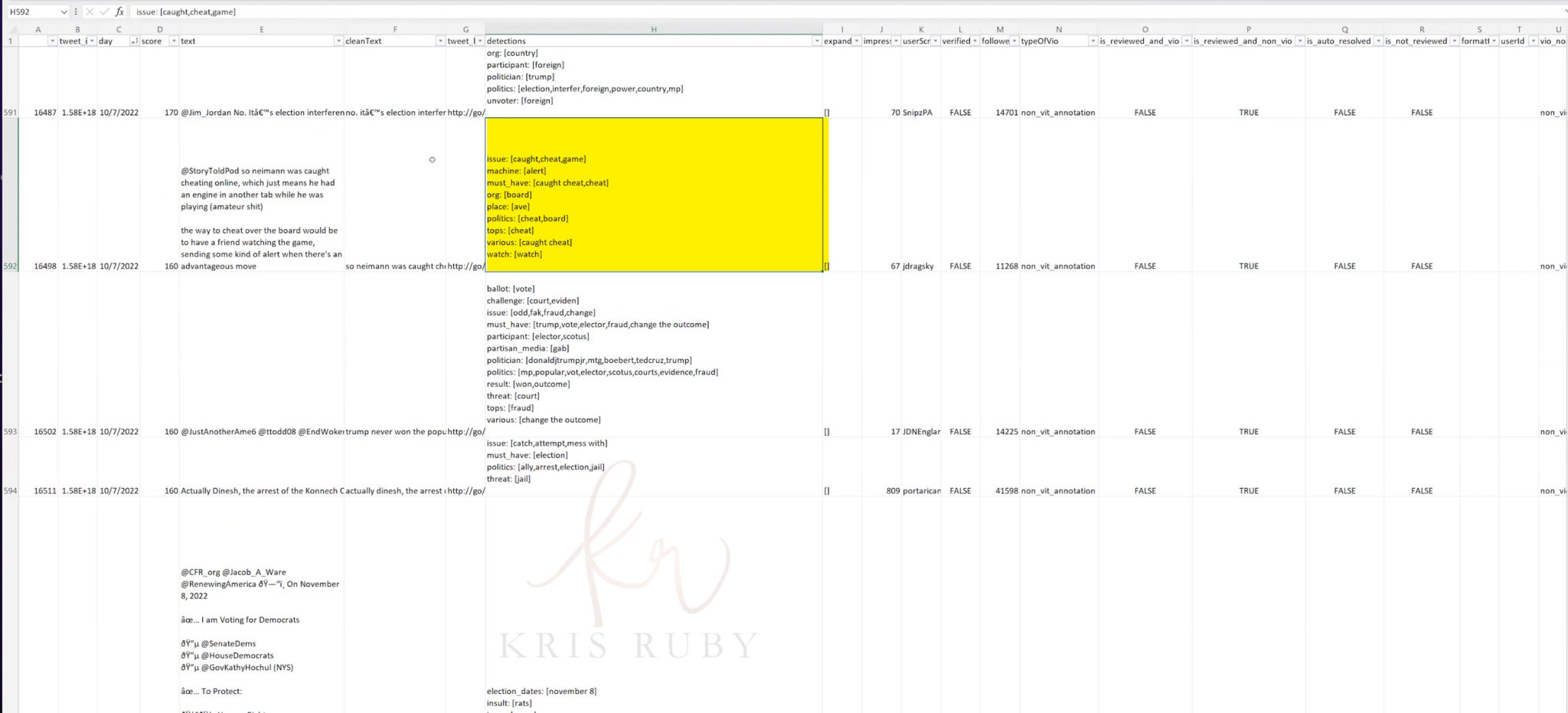

Example of flagged tweets:

Shows me more tweets:(the quote below is not related to the specific quotes in the above examples)

This person received a 160, which is just above the threshold of being flagged. You have to get a score of 290 or above. 290 means we have judged this tweet as the most likely to have misinformation, but it isn’t twice as likely as 150 it’s just more likely. 150 or above you can flag it automatically. It is not an absolute scale. It is not as if 290 is twice as likely as 150- it is just more likely.

We used models from SVM, to neural nets to look for these terms. It wasn’t one size fits all.

The baseline score is 0. A score of 150 or higher meant it was flagged. That’s why only 150 or higher is in the dataset.

Pictured: Twitter ML 290 ballot detections flagged tweet example.

The detection list shows you what we are looking for with AI. The terms under the detection column are what flagged it (the tweet).

Pictured: Example of ML detection list at Twitter. Detections were words that Twitter pulled out of tweets with ML.

Caught, cheat, game, machine, ballot detections. All of these things (terms) contribute to the score.

We found this in the tweet that we then flagged that added up to the score of 290. If it’s 150 or above, you can flag it automatically. This is a straight NLP algorithm that removed this. Is reviewed and violated. In this case, it was false.

These tweets ended up being reviewed by a human at some point. Flagged, sent for human review, and a human said this is or is not worth removing. This tweet that replied to Warnock by Bman is reviewed and violated (false). This was found not to be in violation.

Detections- that is not me. That is not a human. That is the NLP- sorry. It is running in the background. We are always looking for this stuff (these words). Me and other people program it.

This is an example of a straight NLP algorithm that removed this content.

The computer shows: “Is reviewed/violated.”

Auto resolve. Did a human being actually look at this?

If it is auto-resolved, flagged, sent for human review, and human said this is or this is not worth removing.

Is reviewed and violated- false- found not to be in violation.

That’s not a human. That is running in the background. We are always looking for this stuff. Me and other people program it.

Pictured: Example of flagged tweet mentioning Hunter Biden laptop

This tweet is about suppressing the Hunter Biden laptop story.

If there are that many things- ballot detections, etc. These are all the things we found. That is what makes it have this score of 290. This was removed through non-VIT-removal our regular NLP algorithm.

How the model was trained:

The file includes actual tweets used to train the model. The n-grams sent were specifically being used as part of a model to determine if the entire tweet should be marked as misinformation.

All of the confusing column names VIT_removal, vio, non_vio, etc. weren’t put in manually. The annotations or bans had already been enacted and were being sent to human review. Those were records of what the algorithm had already done- not manually entered.

The detections went into building the score. Some were weighted more heavily. Obviously, we got some of them wrong, but we would send these to human review. They had the same terms they were looking for. So, it was a common vocabulary. That’s why we included them- for reference.

Regarding violations over time, we could break apart arrays and count how many times each term got banned. We could look at the data in the detections and look at the terms versus the score. We could look at how many times certain users were banned in that short time frame, etc. Nothing in isolation, but always in contact.”

Can you explain what we are looking at in this dataset?

“A list of specific tweets, some from large accounts, some from small accounts that were banned for the reasons you’re seeing in the files. You’re also seeing a list of policy violations and how many times they were flagged. This is the guts of the machine learning model at Twitter. It’s not pretty or simple. It shows the things Twitter cared about enough to suppress. You can look at the list and say “Nice job, Twitter,” or you can look at it and see Liberal bias.”

Hi @foxnewsmom Were you temporarily suspended on Twitter on or around 9/26/2022?

If so- what was the reason?

This is in regards to a story I am working on that is of public interest. Thank you. pic.twitter.com/5gTMbgdnoI

— Kristen Ruby (@sparklingruby) December 30, 2022

Where did Susan go? 🧐 pic.twitter.com/o7wUbIHpBY

— Kristen Ruby (@sparklingruby) December 30, 2022

Pictured: Actual tweets marked as political misinformation by Twitter.

These are tweets we flagged for removal algorithmically. Some were held up, some reversed.

We looked for terms. If there were enough of them – your tweet could get flagged. We worked with Trust and Safety, who provided many of the inputs.

But it is important to note that we also searched for context. These are words and phrases we had judged when in the right context were indicators of misinfo. Context was important because a journalist could tweet these terms and it would be okay. One or more of them was fine. In combo and in context they were used to judge the tweet as misinformation.

The files show internal Twitter policies that could be violated and how some specific tweets were actioned.

If you search for any tweet in the file and it’s not there, that means it was taken down. I found some where the account is still active but the tweet is not there. Real data is messy. Most of the tweets have a delay between tweet date and enforcement date. I think they told them remove this tweet and we will reinstate your account.

Babylon Bee misgendered Adam Rachel Levine in a joke tweet. He was banned for it and told to delete and they would be reinstated. They refused and then Elon Musk bought Twitter.

Look at the first two lines in political misinfo. The first tweet by someone named ‘weRessential’ which starts with ‘as we all know, the 2020 election…’ that tweet is marked as a violation. Column O ‘is_reviewed_and_vio’ is true. The user still exists. That account is there, but the tweet is not. It was a violation. The next line from screen name ‘kwestconservat1’ was also flagged but found not to be a violation. See the ‘is_reviewed_and_vio’ is false. That tweet is still up.If you filter in that is reviewed and vio column as true- you can look to see if the tweet is still there and if the account is even still there.

Sometimes a tweet was found to be in violation and then the decision was later appealed or overturned and the tweet reinstated.

- The misinfo tweets were already live.

- Is_reviewed_and_vio = true is the row to look at.

These were definitely violations. Many of these are no longer active (they were taken down). If you search the clean text of the tweet and it’s not there and Twitter gives you no results- that means it was taken down.Everything was taken down at some point. But users could appeal. Some appeals were granted.

So the very first line- it’s not there anymore. The second line is. Another example – I found someone whose account was up but the tweet was down. That is pretty good evidence, if not proof that these were tweets we restricted.

Another example in the dataset- “Yeah she got suspended by us.’

If the account is still up, but the tweet is down, it shows the tweet was in violation and they deleted it. How would you know what they tweeted except you have that spreadsheet of their data? She was suspended for the reason your file shows. That means it’s not a test. It’s real. It proves the terms in the list are real.

If your account was suspended, even temporarily, it is an enforcement. Would you rather be suspended temporarily or not at all? It’s a pain to go through the whole process.

They weren’t looking for tweets about An Unfortunate Truth. They weren’t suspending over the Jon Stewart movie. They were suspending over 2000 Mules.

If the cops come and arrest you and put you in jail any time you mention how corrupt they are but they always let you out, is that not still intimidation?

This proves the n-grams are real. The data allows people to objectively look at it and say, ‘look at all these tweets that aren’t there anymore.’ Twitter took them all down. That was pretty common actually.

Were public figure accounts treated differently?

“In general, if an account had over 10k users, we couldn’t suspend. It was considered a political decision then. As in Twitter politics. You didn’t want to ban Marjorie Taylor Greene, because it made you look bad to ban high-profile Republican accounts.

There were two sets of rules at Twitter. If your account had more than 10k followers, you could not be algorithmically banned. If a tweet from a large account was labeled an infraction- it went to a special group.

I don’t know why they didn’t tell people about the large account limit. If said in conjunction with other phrases, 2000 mules could cause someone with under 10k followers to be banned by the algorithm. But not someone with more than 10k. That would go to another team.”

How can one prove that these are real and were live?

“You have the files of policy violations and actual appeals. Why would you appeal a training data set? You have all these appeals for all of these policies. Those actually happened. People got their accounts suspended for violating those policies. You have the n-grams we were looking for. No one makes a whole model for their health. It takes work and time

Let’s say this was test data. What are we testing? Made up words? Why are we running tests on these n-grams? We could have chosen any words in the world, but we chose these. If I test, I want to see how my model performs in the real world.

This is a list of annotated terms from our AI. AI didn’t come up with them, but did detect them. These are not tweets people reported. These are terms we censored. We considered these terms to be potential violations. Even if they were ultimately held not to violate, they were things we looked for.

Does what we looked for show bias? Well? What do you think? All of these terms were flagged for later review. We took a second look. You have appeals data with actual policy violations. This is proof they were live.”

What does SAMM mean?

Pictured: Photo of policy violation in Twitter dataset.

“SAM means state-affiliated media. It might strike your readers as interesting that we were censoring information from other states at all. Some might ask what right we had to do that- you know? What if TikTok censored stuff from our government? Our goal was to keep election misinformation out. Some nations have state media operations- China for instance- which controls what comes out. They don’t have freedom of speech. If we judged their media to be spreading misinformation, we might censor it.

This image should policy categories and examples of actions we actually annotated across the world.

Delegitimization of election process means anything like stolen elections, double voting or alleging voter fraud.

Macapa is a city in Brazil. SAMM FC is state media in Brazil. There are actions on both sides we annotated. I will say we annotated a fair amount of Brazilian National media (SAMM), and I honestly don’t know enough about their media to know if it was politically one way or another. We performed an action of some kind of photos of Brazilian presidential candidate Bolsanaro posing with Satan (I think?) as well as claims of polls being closed or moved.”

How often did you adapt models?

“We adapt typically once a month or so, but as often as weekly when elections were near.”

Once a term is flagged is it flagged forever?

“No. We rotated terms in and out fairly regularly.”

How does moderation work beyond the flagged words?

“It takes all these ngrams it detects and generates a score that it tries to understand based on context. If it thinks the ngrams are likely a violation in context it will flag it. Sometimes the machine could ban you algorithmically, sometimes it would go to human review. Sometimes both a machine and a human would review. But again, keep in mind because of the sheer scale of data you need some type of machine learning.”

Does the machine relearn?

“Yes, we tune it as listed above (monthly or weekly).”

Does the data cover foreign elections?

“Yes, wherever we had enough people we did. We covered elections in the US, UK, EU, Japan, Brazil, India, etc.”

What about abuse content? I don’t see words pertaining to abuse in the file.

“I have a file on violations (not just political violations) and how many times it was caught each month. Abuse is definitely the highest. That would all be under abuse. One of the files I sent in the content moderation folder has a category_c column. That’s the main category. You can see that most violations are in abuse in that file. Within abuse, there are many subcategories – racism, homophobia, misgendering, antisemitism. There were different kinds of abuse specializations. They all had names like ‘safety’ or whatever. Personal attacks on non-public figures were abuse. We weren’t looking for abuse. I was looking for political misinformation. Two separate areas.”

POLITICAL DIVERSITY AT TWITTER:

How many conservatives were on that team?

“I don’t know that we checked. It wasn’t considered. My boss was not. I’m a liberal. Full disclosure here. Keep in mind the 3 legs of the stool. The people who help us understand the policy- ingest the research and work to set the policy. A group they work with. Those of us writing the algorithms. I’m not an expert at US elections and I’m certainly not an expert at other international elections I also worked on so that’s why we had to have these people to understand the issues.

I am finding them, but the strategy and politics/ health and safety people are giving them. We are in meetings with them. They say, these are things we are finding. Rewriting algorithms- how good of a job does this do? We also work with human reviewers to make algorithms better at the same point.”

Did you have politically diverse leadership during your time at Twitter?

“These things come from the top down, and if you had a more diverse team setting policy you might find a different system- meaning the things you are looking for.”

ELON MUSK, TWITTER, & FUTURE SOCIAL MEDIA PREDICTIONS:

Do you think this algorithm will remain intact under Elon Musk?

“That’s a great question. He is very concerned about reducing costs. Have some datasets been taken down? I don’t know what he’s gone through on the backend to change. He doesn’t seem to have a super detailed grasp on what his people are doing. None of us who wrote this work there anymore.