|

Getting your Trinity Audio player ready...

|

Social Media Algorithmic Misfire: Analyzing YouTube’s Notre Dame Algorithm Fail

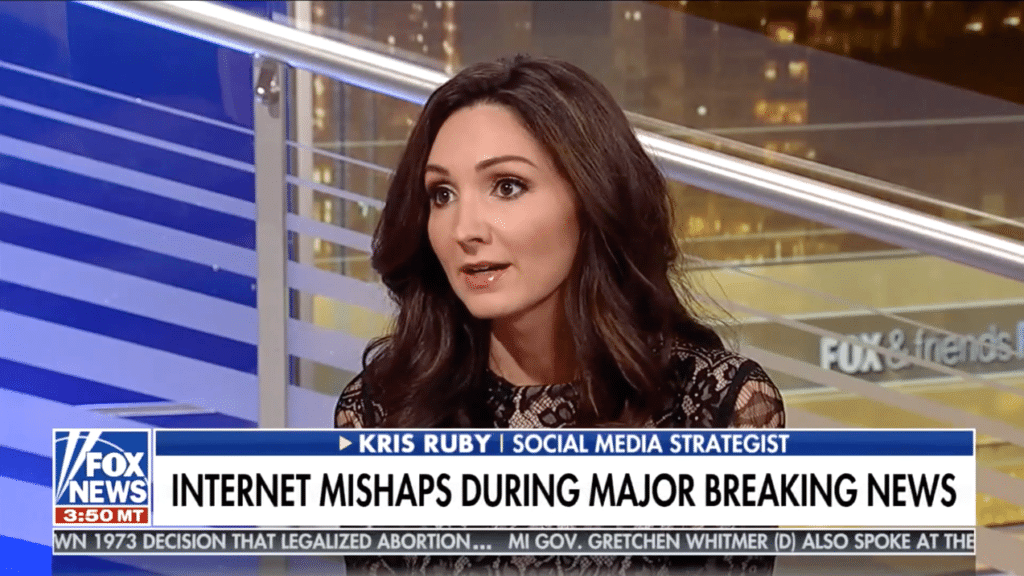

NYC Social Media Strategist Kris Ruby of Ruby Media Group was recently on Fox News discussing social media algorithms. YouTube linked the Notre Dame fire to 9/11 and Twitter wrongly flagged tweets for sensitive content. Why can’t social media platforms get it right? Watch to find out.

Social Media Algorithmic Mistakes:

Who is really to blame? Humans or machines?

Artificial Intelligence plays a critical role in moderating and filtering the content you see on social media. Social media expert Kris Ruby, CEO of Ruby Media Group, discussed why algorithms often make mistakes, and the solution to fix the growing issue.

The growth of AI-powered content moderation tools

AI systems are being developed to detect and filter abusive behavior on social media. These systems can potentially capture large amounts of hateful content before they ever reach the public.

The benefit of deploying machine learning content moderation systems is that they can filter out content before you ever see it, mitigating risk. However, the risk of machine learning content systems can often outweigh the potential benefits. If bias is built into the system, the model can make mistakes and potentially filter content that was never hateful to begin with.

AI should not be seen as a sole solution for content moderation. Human oversight is essential when deploying machine learning systems at scale. Without a human in the loop, no one can course correct, and the system will forever be stuck in a cycle of recursive bias. Humans can fix these issues as they emerge. The best solution to social media content moderation is a hybrid approach with human and artificial intelligence combined.

READ: The Ruby Files

The Future of Social Media Content Moderation: Humans vs. Machines

Why do social media algorithms keep failing us during crises? It’s time to dig deeper into how tech giants manage content. When a tragedy strikes, people turn to social media for instant updates. What happens when algorithms flag critical information as sensitive content?

What happens when algorithms flag correct information as misinformation?

Explore why social media platforms struggle with content moderation, the role of algorithms, and the delicate balance between machine learning and human oversight.

Human Moderators vs. Algorithms:

Can humans ever match the efficiency needed to monitor the sheer volume of social media content?

Artificial intelligence makes errors, but can we afford these mistakes when it comes to real-time crisis information? What happens when AI makes mistakes that sway elections? How can this impact society at large?

These are the real issues we are grappling with that go above and beyond a machine learning issue during a crisis. The big issue is not that machine learning gets it wrong during a crisis, rather, that is gets it wrong when there is not a crisis. IE, machine learning can be used to dictate what is wrong vs. right for society as a whole.

Past mistakes by social media platforms show a need for better solutions. How can we trust a system that repeatedly flags the wrong content?

Some of this is not a mistake. If a system is trained to flag something as misinformation, it will. That being said, machine learning systems are prone to algorithmic errors, especially during a crisis.

Past errors in social media content moderation highlight a pressing issue: are these really mistakes, or systemic failures?

The Role of AI in Modern Journalism

For journalists reporting on the scene of a crime, social media is a vital tool. But what happens when their real-time updates get flagged as sensitive content? The volume of videos and posts on platforms like YouTube makes content moderation a growing challenge for big tech companies.

When AI misfires, there is a direct collision of trial and error at the expense of users and journalists. How can social media platforms minimize these errors while providing real-time information?

What is the right balance between AI moderation and human oversight?

When tragedies unfold in real time, social media becomes our live news outlet. Ensuring that critical updates aren’t wrongly flagged is paramount.

In the world of algorithms and AI content moderation, the only constant is change.

What is a realistic solution to handle content moderation at scale?

The debate over whether to hire more moderators or refine algorithms is the crux of the issue.

Flagged posts during crises can mislead and cause confusion. How can social media platforms improve their content flagging systems?

Social media companies can override temporary filters or adjust their sensitive content filters during a crisis.

Unveiling the Truth Behind Social Media Content Moderation

Have you ever wondered why social media platforms seem to stumble and fall when it comes to effectively monitoring online content? The answer may lie in the very algorithms that power these platforms. Yet, those complex lines of code that are supposed to automate the process often end up creating chaos instead.

The challenges of moderating content on a massive scale, like the unending sea of videos on YouTube, is daunting. How can enough human moderators be hired to sift through every piece of content that gets uploaded? The answer is simple: they can’t.

The consequences of moderation mishaps go beyond a social media faux pas. During critical moments, like times of crisis or a breaking news event, accurate and timely information can be a matter of life and death. But when content gets censored, flagged, or labeled with sensitive content warnings, it’s akin to putting a gag on the truth, leaving citizens in the digital dark.

The million-dollar question for social media companies: Do we enhance human moderation or enhance AI?

What is the true cost of risking it all to algorithms that are still learning on the job?

The Struggles of Content Moderation on Social Media: Algorithms vs. Human Oversight

In the digital age, social media has become a dominant force, driving how we communicate, do business, and disseminate the news.

However, this ubiquity comes with a dark side: managing the vast amount of content generated every second on platforms like Facebook, YouTube, and Twitter. Content moderation isn’t merely about keeping explicit materials off our feeds; it’s about ensuring a balanced, informative, and safe online environment for billions of users.

But what happens when the algorithms are skewed and safety and toxicity labels are mislabeled? What happens when one side chooses to remove equilibrium and stack the algorithmic deck? What are the geopolitical consequences of AI-powered cyber warfare?

A war with natural language processing (NLP) would be silent – you would never even know it happened. Social media warfare is how the wars of the future will be fought and won- and everyone is a key player in it. From content moderation to data annotations and even individual users, everyone plays a role in training the data that will be re trained. The real question is: by who and for what purpose?

Social media platforms have repeatedly tripped over this monumental task, prompting an ongoing debate: should content moderation rely on algorithms or on human oversight?

In this interview, we delved deep into the intricacies of the digital moderation dilemma, exploring the strengths and limitations of both approaches and shedding light on some of the most recent notable failures in social media content moderation. Ruby Media Group Founder Kris Ruby seamlessly navigates the multifaceted issue that stands at the intersection of technology, human judgment, and societal impact.

The Content Moderation Dilemma

Content moderation is a crucial aspect of managing social media platforms, with the stated goal of maintaining safe and respectful online spaces. Unfortunately, the stated goal is far from the reality. Trust and safety teams have been known to go off course, based on the geopolitical whims of civil society organizations that influence data science. All of this controls the underlying mechanism of what you see and don’t see during breaking news events.

The system is fraught with algorithmic challenges that reveal the limitations of existing technological and human resources.

The rise of social media communication has made it imperative for big tech companies (and intelligence agencies) to monitor billions of posts, comments, images, and videos. The stated goal is to prevent the spread of harmful content including illegal content such as CSE or animal abuse, violence, and gore. However, mass data collection under the guise of safety can also pose significant consumer privacy concerns. Who are we truly optimizing safety for? How are we even defining safety in the first place?

While machine learning algorithms powered by AI are instrumental in automating this process, they are far from infallible. Simultaneously, the scalability of human oversight presents its own set of complexities, making the task of content moderation a balancing act between technology and human intervention. This dilemma leaves social media companies grappling with how to best leverage both approaches to achieve effective, reliable, and consistent content moderation.

The Role of AI Algorithms in Content Moderation

Algorithms have become the backbone of content moderation on social media platforms due to the ability to rapidly process large quantities of data. The algorithms are designed using machine learning models trained on specific types of content, such as explicit language, graphic violence, and toxicity. By analyzing patterns in the data associated with an ontology that determines information, algorithms identify and flag potential violations for further review.

The primary advantage of algorithmic review lies in speed and efficiency. Unlike human moderators who are limited by the number of hours they can work and the volume of content they can review, algorithms can operate 24/7, sifting through millions of posts in real-time. This is beneficial to catch harmful content before it can proliferate widely, minimizing its potential impact. Virility breakers also impact this process as well.

However, the efficacy of algorithmic moderation is not without flaws. One of the biggest challenges is the complexity of human language and context. Algorithms often struggle to grasp nuances like sarcasm, irony, and cultural differences, leading to false positives and negatives. For example, an algorithm might flag a harmless joke as offensive or, conversely, miss a subtly coded message promoting violence. These shortcomings of automated moderation systems underscore the necessity for continuous improvement and fine tuning of machine learning models to adapt to language patterns and the evolving types of content. When it comes to moderation, contextual clues and sentiment matters.

The Challenges of Human Moderation at Scale

Employing human moderators to review content offers a level of context sensitivity and judgment that algorithms currently cannot match. Human moderators can understand sarcasm, cultural nuances, and complex language patterns, making them better suited to handle borderline content.

However, scaling human moderation to meet the demands of social media platforms is a Herculean task. On YouTube, users upload over 500 hours of video every minute, making it impossible to hire enough human moderators to review all content comprehensively. This workload can lead to high stress, burnout, and mental health issues among moderators who are frequently exposed to distressing content. These factors make it challenging to maintain a robust, effective human moderation team. Enter artificial intelligence.

Human moderation itself is prone to inconsistencies. Moderators may interpret guidelines differently, leading to a lack of uniformity in content moderation decisions. This variability can frustrate users and fuel perceptions of bias and unfair treatment, further complicating the content moderation landscape.

Impact of Content Moderation on Information Access

The role of content moderation extends beyond filtering inappropriate material; it significantly impacts the accessibility of information, particularly during crises. Accurate information dissemination is critical during emergencies, such as natural disasters, public health crises, and political upheavals. AI censorship can stifle important updates, affecting public awareness and response.

Content moderation plays a pivotal role in information dissemination, emphasizing the need for a precise, balanced approach that leverages both technology and human oversight.

The Future of Content Moderation: Algorithms vs. Human Oversight

The future of content moderation lies in a hybrid model that combines the strengths of algorithms paired with detailed human oversight. Neither approach is perfect alone, but together they can form a more holistic and effective content moderation system.

Advances in artificial intelligence and natural language processing are promising developments for improving algorithmic moderation. Future algorithms will likely become better at understanding context, cultural nuances, and subtle forms of harmful content, reducing the number of errors.

At the same time, the role of human moderators remains indispensable. They can handle complex, context-sensitive cases that algorithms might miss or misinterpret. Involving diverse teams of humans can help identify and rectify biases within the algorithms themselves, leading to more balanced and fair content moderation outcomes.

Social media platforms must also invest in the well-being and training of human moderators and data labelers to ensure they are equipped to handle the psychological demands of the job and stay updated on evolving moderation policies.

Collaboration between platforms, policymakers, and stakeholders will be crucial in developing effective content moderation frameworks. Transparency in moderation policies, accountability for decisions, and open dialogue with users can pave the way for more trust and efficacy in managing online content.

The struggle of social media content moderation illustrates the complexity of balancing machine learning algorithms with human oversight. Both methods have their unique strengths and limitations, and the path to effective moderation lies in leveraging their complementary capabilities.

As technology continues to evolve and our understanding of machine learning content moderation deepens, the goal remains to produce less errors.

In the digital age, social media platforms have emerged as the bastions of communication, connection, and information dissemination. However, these platforms continually grapple with the task of monitoring the content shared across their vast networks. This struggle, fraught with challenges, unveils a deeper, intricate web of technological and human intricacies that prompt us to question why these platforms repeatedly falter in policing online content effectively.

At the heart of this issue lie the algorithms, complex strands of machine learning models designed to sift through the enormous deluge of posts, tweets, and videos every second. These algorithms, although marvels of modern technology, are inherently imperfect, prone to errors that can have wide-reaching implications.

Delving deeper, these technical shortcomings reveal a stark reality: the sheer scale at which content must be moderated — especially on platforms like YouTube, where hours of video are uploaded every minute — is staggering. The enormity of this task shines a light on a critical bottleneck: the limited capacity to employ enough human moderators. Employing a vast army of humans to review each piece of content is as impractical as it is Herculean, thus companies tread a fine line, balancing between human judgment and algorithmic efficiency.

The stakes are high, particularly when content moderation missteps occur during crises. The swift spread of critical information can be hampered, as flagged content results in barriers to important news and updates. Imagine a natural disaster where real-time updates could guide people to safety, or a health crisis where misinformation needs to be quashed swiftly to prevent panic. In such scenarios, the impact of moderation errors extends far beyond inconvenience, becoming a matter of public safety.

Faced with persistent moderation challenges, social media companies find themselves at a crossroads: should they augment their human oversight teams significantly, or throw their weight behind improving machine learning algorithms, despite their existing flaws? This decision is not straightforward. Each path carries its own set of complications and ethical considerations. More human moderators could mean better context-sensitive judgments but at the cost of efficiency and potential privacy issues. On the other hand, improving algorithms promises scale and speed but risks perpetuating the very errors they aim to eliminate.

The dilemma of effective content moderation on social media platforms underscores a pivotal technological and ethical quandary of our time. As big tech companies evolve and scale trust and safety with data science, they must navigate treacherous waters with a dual focus: refining their algorithms while not forsaking the nuanced understanding that only human moderators can provide. This ongoing balancing act is a critical component of the complexities social media platforms must reconcile with to avoid regulatory crackdown.

TRANSCRIPT: Fox News: Social Media Expert Kris Ruby on why Social Media Algorithms Misfire

This is not the first time social media has failed to correctly monitor online content. The question is, why can’t these tech giants get it right?

Social media strategist Kristen Ruby, CEO of Ruby Media Group, joins us now to explain. We were just talking about this in the break. Neither of us think that this was intentionally done, but the question is, why can’t this be done right?

What is the problem when there’s a crisis happening, why does it seem like it’s a flub on social media all the time?

Kris Ruby: The real problem here has to do with the algorithm. The algorithm is done with machine learning, so it’s not perfect. With artificial intelligence misfiring, which is what we’ve seen here, comes trial and error, and you can’t have trial without the error. And that’s what happened here.

There is a statement from YouTube about what happened, and it says these panels are triggered algorithmically and systems sometimes make the wrong call. We are disabling these panels for live streams related to the fire. That’s their statement. That’s one thing. Let’s take a look at some of these past mistakes social media companies, and talk about that. March 2019, Facebook mistakes Dan Scavino for a bot. And by the way, he is the Director of Social Media for the President. February of 2018, YouTube, Facebook promote Parkland shooting conspiracy videos. Then in November 2017, Twitter shuts down The New York Times Twitter account by mistake.

Now, there are some of these things that people question whether or not they’re done by mistake. I actually think in some of these cases, these really are legitimate mistakes. This is a case of robots and machines learning on the job. They haven’t gotten it right yet, and they’re going to keep misfiring. They’re going to keep making mistakes.

But is that okay?

Kris Ruby: It’s not okay. But the other option is they could hire more content moderators, but they can’t flag every single video. They can’t hire the amount of content moderators that they would need for the volume of videos that they have on YouTube. It’s just unrealistic.

Part of the issue I take with this is when you have something going on, journalists as an example. I’ll show you an example of what happened to me on Twitter. We are a source of information, especially people who are out there at the scene of some of these tragic events. I posted this tweet, I said, this is awful, with a heartbreak emoji with a link to the fire that was going on in Paris just this week, and it was flagged for sensitive content. People were sending me messages like, why can’t I see your tweet? If there’s something going on, if they’re not in front of a TV, they turn on their phone and they go on social media to get information. And if that content is being flagged and people can’t see it, that, to me, is a big problem. So how do we change that?

Kris Ruby: It has to change within the social media companies. It’s not something, unfortunately, that on an individual level you’re able to change. It has to do with, what are Twitter’s rules around it? What are YouTube’s rules? What are Facebook’s rules? The same thing with Facebook and their ads all the time. They get it wrong and then you click, why am I seeing this ad? And it says, well, because you said you were interested in XYZ, but they don’t have the actual targeting that specific where they’re going to get it right every time.

What would your message be to these social media companies?

Kris Ruby: My message would be either decide what you want to do, do you want to hire more moderators to get it right? I don’t think that’s realistic. Or do you want to keep teaching the machines and having them learn on the job?

Kris Ruby, we’ll see if it changes. Thank you very much. Appreciate your time.

For more updates on technology and social media, follow us on social media and subscribe to Ruby Media Group on YouTube.

Related Articles:

Understanding Social Media Algorithms

READ: Learn more about Machine Learning.

KRIS RUBY is the CEO of Ruby Media Group, an award-winning public relations and media relations agency in Westchester County, New York. Kris Ruby has more than 15 years of experience in the Media industry. She is a sought-after media relations strategist, content creator and public relations consultant. Kris Ruby is also a national television commentator and political pundit and she has appeared on national TV programs over 200 times covering big tech bias, politics and social media. She is a trusted media source and frequent on-air commentator on social media, tech trends and crisis communications and frequently speaks on FOX News and other TV networks. She has been featured as a published author in OBSERVER, ADWEEK, and countless other industry publications. Her research on brand activism and cancel culture is widely distributed and referenced. She graduated from Boston University’s College of Communication with a major in public relations and is a founding member of The Young Entrepreneurs Council. She is also the host of The Kris Ruby Podcast Show, a show focusing on the politics of big tech and the social media industry. Kris is focused on PR for SEO and leveraging content marketing strategies to help clients get the most out of their media coverage.