|

Getting your Trinity Audio player ready...

|

WATCH: Social media expert Kris Ruby joins ‘FOX News Live’ to discuss the rise of antisemitism on the social media platform TikTok and its impact on Jewish content creators.

Social Media Warfare: Surviving the Israel-Hamas War on TikTok

Fox News: Antisemitism Surges on TikTok

“Every single platform is experiencing a rise in hate speech: Kris Ruby.”

Social Media Expert Kristen Ruby, CEO of Ruby Media Group, joined Fox News Live to discuss the rise in antisemitism on social media platforms.

We have previously covered social media harassment and the acceleration of an increasingly hostile digital environment. But in recent weeks, antisemitism on social media has gotten exponentially worse. For the first time in history, Internet users have enlisted in a war they never signed up for and they play a pivotal role in the digital battlefield.

The Rise of Antisemitism on Social Media Platforms

AI is the weapon. The battlefield is your newsfeed.

Social media platforms have become a hotbed for the alarming rise of antisemitism. X, TikTok, and other online platforms provide fertile ground for the spread of hateful rhetoric and coordinated online hate attacks targeting the Jewish community. This disturbing trend has significant implications for the well-being and safety of individuals and society as a whole.

Antisemitism on social media has become a troubling phenomenon in the United States. Celebrities, politicians, and other high-profile figures use social media platforms like X and TikTok to spread antisemitic messages and amplify bad actors. Jews face daily instances of online harassment and discrimination on the digital streets of social media.

The rise of antisemitism on social media has reached such alarming levels that experts have called it the “high tide of American antisemitism.” This trend is indicative of a larger problem: the normalization of antisemitism.

When antisemitism runs rampant on social media, it sends the message that antisemitism is acceptable. This creates an ecosystem for real-world harm, echoing what people experience online. Make no mistake; it is not acceptable.

The art of social media warfare

Users now have a front row seat to war that would otherwise have been hidden from view unless you served in the armed forces.

Military grade social media psychological operations are rampant. With the decrease in content moderation and shift towards a fully automated moderation landscape, this has strained not only the platforms, but the users.

With less oversight comes less security. While some have cheered on the firing of threat disruption and disinformation teams, others are feeling the effects and consequences of those actions.

In many respects, we have been shielded from what full blown war looks like online. There were always safety guardrails built into social media platforms that protected users.

Sometimes, the guardrails went too far and outright censored users. But we have never actually lived through a time when almost all guardrails have been removed. We are experiencing the societal decline of what that looks and feels like on social media, particularly on X.

When free speech turns into a free for all: the perils of no moderation

The information environment is actively being manipulated by enemies of The United States in an attempt to sow division.

These coordinated social media campaigns (clandestine operations) can directly undermine national security and dilute the credibility of critical information.

Friend or foe?

Even more concerning, these enemies can pose as allies, while concealing their true intentions.

These people do not work alone. And when you get on their bad side, you feel the wrath. Except this time, you are fighting robots instead of humans.

Artificial Intelligence is rapidly changing the digital architecture and infrastructure of information control.

With the rise of AI bot farms, you can have an on-demand digital army at your disposal. Some of the people you are fighting with online may not even be real – they could be AI.

Surviving the age of social media-enabled information warfare

When it comes to narrative control, the loudest voice wins. Artificial amplification fuels this.

Social Media Psyops can be used to alter social behavior. When behavior is altered, users are rewarded with monetization, followers, likes, and digital forms of currency.

The veil of Internet popularity rewards recognition over facts. As a result of this, we are changing human behavior with gamification. My fear is that is a giant AI social media experiment gone wrong.

Social Media platforms created a market centered on selling algorithmic attention. However, this type of selfie fueled attention is directly at odds with cognitive thinking, reasoning, and strategic planning.

We are rewarding behavior that is directly opposed to our best interest. No one has stopped to really consider the long-term consequences of this on civilization.

The Evolution of 21st Century Information Warfare

“Historically, the United States was quick to disband and forget the value of information on the battlefield. After successful Information Warfare campaigns, senior leaders often disbanded the group only to reactivate it again after losing positional advantage. Today’s positional disadvantage is compounded and complicated by the actor’s ability to use social media to influence millions of users across the globe instantly with a low barrier to entry. The speed of the message may be different, but lessons learned during World War II are still applicable today.”

In The U.S. government, psychological operations remain under US Special Operations Command (USSOCOM).

In The U.S. government, psychological operations remain under US Special Operations Command (USSOCOM).

“In 21st Century warfare, everyone is a participant. In its current structure and within a contested environment, the overall US narrative faces bureaucratic barriers unless supported and directed by a single Information Warfare organization. Finally, senior leaders need to reassess the Goldwater-Nichols Act and the Unified Command Plan to determine whether the current command structure meets the needs of the future fight.

The United States needs a consistent Information Warfare strategy on how to navigate the new terrain, but the information and social battlespace also allows the adversary freedom of navigation if left uncontested.

The same Information Warfare lessons learned in WWII and the Cold War need to be relearned again. A failure to anticipate the shifting global information environment left the US ill-prepared to combat technology-savvy extremists in the Middle East.

In War in 140 Characters, David Patrikarakos cites the rise of Abu Musab al-Zarqawi to become the “Bin Laden of the Internet” as emblematic of the weaponization of social media by nonstate actors. Watts elaborates that Zarqawi’s jihadist network, al Qaeda in Iraq (AQI), weaponized YouTube by creating content depicting their successes in killing US soldiers and civilians to a musical soundtrack. Zarqawi’s efforts demonstrated how social media can push power down to an individual and away from the institution to create a movement.

By 2006, AQI rebranded itself as the Islamic State of Iraq with its own Information Warfare and media arm tasked with professionalizing the development of this content with a sticky message of fear, action, and blood to recruit and shape public opinion. His Information Warfare command structure allowed the U.S. to execute forward into the ISIS network to find, fix, and target its key nodes to degrade its command and control.”

Source: Connect to Divide: Social Media in 21st Century Warfare Major Eddy Gutierrez

Coordinated social media influence operations powered by AI: Evaluating the new threat landscape

The needle has moved from politicians and lobbyists directly to the people.

Networks of accounts engage in coordinated efforts to sway political discourse. But what has changed is who the accounts target and the technological arsenal used for precision grade targeting.

Political actors have always tried to manipulate public opinion using coordinating influence campaigns on social media and counterintelligence operations. What has changed is the scale and velocity in which these attacks are waged daily against innocent bystanders. The battle to influence public opinion has moved from Washington to voters, and AI is the weapon that fuels digital arsenal to accelerate information warfare at scale.

Social media networks struggle with the re-emergence of previously disrupted networks attempting to re-establish their presence. AI enables bad actors to re-appear as quickly as they are taken down.

Disrupting networks of inauthentic accounts is a full-time job for threat disruption directors at social media companies. These coordinated campaigns originate from all over the world including but not limited to China, Germany, Iran, Russia, and yes, even Israel.

AI and Covert Influence Operations

AI influence campaigns are waged both domestically and internationally. Most recently, OpenAI released a report stating they disrupted a covert influence campaign originating in Israel. The campaign allegedly used fake AI generated social media accounts to influence public opinion and US lawmakers. According to the report, OpenAI claimed that the Israeli government allegedly used AI to target the U.S. government.

TikTok disrupted more than a dozen influence campaigns originating in China. That being said, TikTok is far from the only platform that governments use to try to shape political opinion.

Google recently reported they terminated 1,341 YouTube channels and 48 Blogger blogs as part of our ongoing investigation into coordinated influence operations linked to the People’s Republic of China (PRC).

- Network operations: Where did the network originate from?

- Key Influencers: Who are the key individuals behind the network?

- Inauthentic Account activity: How many inauthentic accounts were created in the covert influence operation to sway public opinion?

- Fake Accounts: Did the operation deploy fake accounts generated with artificial intelligence?

- Goals: What is the primary purpose of the influence operation? What was the intended outcome? What behavior or policy did someone try to change?

Understanding the Threat Landscape

Generative AI technology is currently being deployed to create mass chaos before an upcoming Presidential election in The United States. With little oversight, this emerging technology can be exploited by adversaries and threat actors to wage war against The United States. The problem is expansive and far surpasses any threat we have ever dealt with in the digital realm. It encompasses all forms of media.

From text to images and synthetic video manipulation, fake media will flood the zone of our current information ecosystem. Make no mistake: this will have serious and long-term consequences because we were never adequately prepared for this threat before the military grade AI weapons were launched at a consumer level.

AI generated images can sway voter preferences on critical policy items all at a fraction of the cost of traditional influence operation operatives.

Generative AI tools can be deployed to create bot farms, AI generated rent a riots, manufactured crisis’, division, and chaos. This AI generated chaos can change algorithmic recommendations, shift trending news, and warp the “For You” tab with little to no human intervention.

In the past, humans needed to be heavily involved in this process, and it was expensive to do. Now, artificial intelligence can do the same job for less, and when it comes time to litigate, who if anyone will be held responsible for the rapid deployment of AI generated influence operations on steroids? The answer right now is no one.

Antisemitism Exposed: Unmasking Antisemitism on Social Media

The art of creating confusion and chaos

How bad actors cause confusion to undermine public trust

Bad actors can exploit the open exchange of information to run psychological operations on platform users. Social media psyops involve the process of eliciting recruits to develop key messages to manipulate public opinion. The goal is to target adversaries by gaining rapid fire attention and gaining followers to give the appearance of credibility and momentum.

We have seen this play out numerous times on X since Elon Musk acquired the company. This was extremely apparent on Twitter Spaces when foreign influencers were leading American political discussions. This would be akin to me leading political discourse on Saudi Arabia, despite never having lived there and knowing virtually nothing about the political system in that region. That is why it is so dangerous.

The issue is not who the person is – but rather- why they are engaged in infiltrating the political discussion.

On Twitter Spaces, numerous Internet personalities rose to fame fast, most of whom I had never heard of. They were new to the scene and climbed the ranks of Internet stardom. But who are these people? No one knows. However, they appear to have one thing in common: secret identities and an affinity for antisemitic sentiment. It is also important to note that many of the actors involved in this are unregistered foreign agents. This isn’t just social media fun and games – it is a violation of federal law. Many of the people involved in this fail to realize this.

Do not underestimate the role of domestic players to undermine democracy

America’s adversaries seek to dominate the social media industry by influencing and controlling our most important communication channels. But what happens when the adversary comes from within?

People who are antisemitic don’t lead with it first. In the case of Twitter Spaces, the hosts in question spend months building up an audience. They faked credentials, called themselves citizen journalists, and started hobnobbing with the highest level of elected officials in America. So of course, it comes as a huge surprise when they were unmasked as being actual Nazis. No, I’m not talking about the kind the media writes about. I am talking about actual leaders in the alt right movement dating back to 2016. Whether the alt right was real or a government exercise of informants, we will never know. But the point remains the same.

Online words lead to offline consequences

Unfortunately, the biggest perpetrators of hate online often will never be prosecuted for it. Without a tangible threat of violence, law enforcement looks the other way. But the most dangerous part of is that bad actors are using the Internet to radicalize others to carry out their dirty work. Some of this “dirty work” was never part of the intended mission. They have gone rogue. Simply put, they don’t care if you get thrown in prison for executing their plan, as long as they don’t.

Unmasking Antisemitism: A Frame Games Case Study

The use of anonymous identities online enables this system of unaccountability to perpetuate. You can continue to reinvent yourself every few years and scrub your entire online existence. In the case of Frame Games, he even got a job in The State Department. How is it that someone with the most hateful views could infiltrate The White House, and no one noticed? Everyone around Frame Games got called out for antisemitism – except him. Why? Because anonymity protected him. It shielded him from real-world consequences of his digital actions.

While his followers showed up at violent events throughout the country, Mr. Benz kept getting promoted. He faced virtually no consequences, while his followers faced serious consequences. Some are now in jail, while others allegedly committed suicide. Years later, they are still searching for their long-lost leader online. The truth is, it doesn’t matter if Benz intended for them to show up to those events or not. That was the outcome. We must start holding people accountable for the outcome of their digital actions, not only their performative actions online.

Those who advocate for anonymity advocate for a system of inequality. They are advocating for a system that protects the leaders of these movements and hurts those they radicalize.

Furthermore, the false veil of anonymity is often associated to informants with ties to the U.S. government. This means you have no idea who you are talking to and what their true motivation is.

In a recent video on the unmasking of Frame Game Radio, Internet personality Luke Ford said, “These are the dangers of posting anonymously online. You don’t use the same care and discretion that you would if you were operating under your own real name. Anonymity online does as many good things as it does bad things. Good people can use anonymity online to do good things. People predisposed to the bad will probably use anonymity online to do bad things, but it does create all sorts of dangerous temptations. It can unmoor a person from his foundations.” Ford also stated that Frame Games does not optimize for truth.

The Weaponization of Social Media by non-state actors on Twitter Spaces

From tweets to spaces: National instruments of power and foreign weaponization of social media.

On Twitter Spaces, some of the most prominent hosts have let the mask slip. They are full blown antisemitic now, even posting swastikas on Twitter, and then complaining about free speech. They seem to lack a core understanding that X is a global company and hate speech laws differ throughout the world. Musk has led them to believe that their speech will be protected anywhere and everywhere, which is simply not true. To follow the law, certain restrictions are placed on speech. Perhaps not in America, but certainly in Germany when it comes to topics like Holocaust denial.

It is extremely concerning that we are being led astray by these great pretenders. It is one thing to say your beliefs out in the open. It is quite another to deceive your audience for months, if not years, only to reveal your true beliefs down the road.

This is dangerous. It is deceitful. And most importantly, it harms the people who follow you.

Most of the time, these “reveals” are not even by choice – instead- they come in the form of someone else calling the person out – which is not exactly a true apology or admission. Rather, it is reactive, not proactive.

People who engage in this type of nefarious cyber activity are selfish. They do not consider the consequences of their actions on those around them. For example, the small business owner who amplified them, and will now have to face the consequences of forever being associated with a secret real life antisemite.

When you don’t tell people who you are or what you believe in, you remove the option of informed consent. People have the right to consent to information. When you hide information, people around you cannot make informed choices about their digital proximity to you. While you may walk away unscathed, those around you will not.

Understanding the historical context and digital manifestations of antisemitism means not ignoring the role anonymous accounts play in fueling the system. You can’t dismantle a system of hate if you don’t understand the system to begin with.

Anonymity fuels the system of deception. The root of the problem is that this part of the system was essentially ignored during the formative years of the “alt right” movement on social media. The seeds of antisemitism were planted by many of these anonymous accounts, and we are now seeing full grown trees emerge from those seeds years later. Musk has also brought many of them back on the platform, leading people to be exposed to content they were typically shielded from.

Collectively, we need to understand who we are talking to. We can no longer trust that people are who they say they are. These bad actors know that they will not get attention and grow followers if they lead with tropes like “Jews control the media.” So instead, they will say the exact opposite.

They will say and do whatever they need to do to gain power, influence, and control. These are the same people who will try to educate you on Internet censorship. The person who hides their beliefs is the greatest censor of all.

Unfortunately, we are seeing a slew of contradictory statements, which is further evidence of the domestic psychological operation war that is being waged against American citizens.

These statements leave Americans in a perpetual state of confusion – not knowing who or what to believe.

Here are a few examples:

- “I am Jewish so I can’t be antisemitic.” This is false.

- “It’s free speech. I can say whatever I want.”

- Social media platforms are global. The First Amendment does not cover all speech in other parts of the world. Germany is a perfect example of that.

In a time of chaos, I can’t think of what is worse. The person who says these comments, the person who believes them, or the person who covers for them. I have lost respect for those who carry the water of bad actors. Ultimately, they have shown themselves to lack character, integrity, and any semblance of a moral compass.

Influence is a responsibility. It is not a right. It is a privilege. When you use your influence to look the other way, what you are really saying is that it is okay to deceive people. Even if you agree with what the person said, you can’t in good conscience agree with the deceptive tactics deployed.

READ: Identifying Influencers for Psyops

Those who deceive others will deceive you. You are not immune. We live in an age where tribalism trumps morality. It reminds me of high school when you see someone get beat up, and you do nothing. Except, you are not in high school anymore. You are a grown adult. You have a choice today. You can stand on your own and say this is not okay, or you can watch someone get beat up and cheer the bully on. I know what choice I would make. I made it. Have you?

You have a right to be heard. But you do not have a right to be amplified. Unfortunately, those who amplify bad actors are complicit in online crimes. At a time when you could have done something, you instead chose to do nothing.

The concept of a social reputation score or credit score is real. AI is keeping track. AI will remember those who spread hate, and those who ultimately choose to end it.

People are immune to the terms disinformation and malinformation. Americans are trained to believe that when these words are used, it is most likely to censor truthful information. After dealing with this op, I am not so sure that is true anymore. After dealing with this latest op, I am not so sure that is true anymore.

Deception is a form of disinformation. Censoring truth is a form of disinformation. Revealing half-truths and concealing the truth is a form of disinformation. You have a right to know where your information is coming from. You also have a right to know who is funding it.

Information means access to all information – not only what you deem to be relevant. When you withhold information from people, you remove informed consent. We must be willing to speak up when we see inconsistencies.

Like it or not, you are in a digital battlefield. The wars of the future will be fought and won with data. Your data and digital likes are ammunition. Every like accumulates, and over time, likes can be the difference between silence and amplification.

Likes can be the difference of victory or defeat.

Who you choose to amplify can cause us to win or lose the most important information battles of our lifetime. You have a responsibility to know who you are supporting. Your likes matter just as much if not more than your vote. I urge you to consider who you like and support.

Social Media Due Diligence

Should monetization be tied to influence if those who are influencing you don’t even fully understand who is influencing them?

Today, there is increased pushback against the existing influencer culture not only through deinfluencers, but from social media users at large. Many social media influencers do not disclose where their messaging comes from or who funds their media operations. Why does this happen? Because many prominent content creators do not conduct proper due diligence when someone offers to amplify their message.

The issue isn’t merely adding #ad. It’s much larger than that.

You need to know who is funding you, where that funding comes from, and how that impacts the media ecosystem. As a content creator, you have a responsibility to your audience to vet those around you, including who funds you. When something goes wrong, you can’t pretend you didn’t know. The real victim in this situation is your audience – not you.

In the political influencer sphere, failure to conduct due diligence has profound consequences. Influencers are not merely shilling products; they are shifting the hearts and minds of voters with psychological warfare and political messaging that is often never disclosed.

The pushback against influencer culture started on TikTok with the deinfluencer movement, but has since migrated to X with pushback towards political influencers who fail to disclose the true source of their funding or algorithmic amplification.

Social media influencers play a critical role in narrative control for elections worldwide. On X, users are pushing back against the rise of the alternative media creator class. Many X users no longer trust the chosen creators who have quickly amassed millions of followers and push messages in unison. When platform founders further amplify messages algorithmically, millions of users wonder how organic anything truly is anymore.

Social media is a digital battleground

Do you really know who or what you are supporting?

READ: U.S. Special Ops wants to use Deepfakes for Psyops

The dangers of ignoring demonstrated hostility in the information space

Despite the demonstration of online rhetoric leading to real world violence, The United States has failed to pivot to taking emerging threats of real world violence seriously. These threats take place on social audio rooms every day around the world. Yet, people are still talking about Charlottesville like that is the greatest threat. And because of that, we are going to miss a real attack on our nation because the methodology is so antiquated to study how groups form, where they congregate, and what this means for the future of digital terrorism.

READ: The Power of Persuasion & Army PSYOP Control

Should monetization be tied to influence if those who are influencing you don’t even fully understand who is influencing them?

The issue isn’t merely adding #ad. It’s much larger than that.

In the political sphere, it is far worse. Influencers are not merely shilling products; they are shifting the hearts and minds of voters with psychological warfare and political messaging that is often never disclosed.

Many influencers do not disclose where their messaging comes from or who funds their media operations. Why does this happen? Because many prominent content creators do not conduct proper due diligence when someone offers to amplify their message.

You need to know who is funding you, where that funding comes from, and how that impacts the media ecosystem. As a creator, you have a responsibility to your audience to vet those around you. When something goes wrong, you can’t pretend you are the victim. The real victim in this situation is your audience – not you.

READ: Justice Department Disrupts Covert Russian Government-Sponsored Foreign Malign Influence Operation

There is genuine pushback against the existing influencer culture not only through deinfluencers, but from social media users at large. The pushback against influencer culture started on TikTok with the deinfluencer movement, but has moved to X with pushback towards political influencers who fail to disclose the true source of their funding or algorithmic amplification.

Social media influencers play a critical role in narrative control for elections worldwide. On X, users are pushing back against the rise of the alternative media creator class. Many Americans no longer trust the chosen creators who have quickly amassed millions of followers and push messages in unison. Musk further amplifies these messages algorithmically, leading one to wonder how organic anything truly is on the platform anymore.

Reacting to evolving threats in real-time

Social media platforms still struggle to remove bot accounts, even when it is reported. With the rise of AI, this problem will exponentially grow.

When it comes to analyzing evolving threats, the number of followers in a network matters. These accounts often grow very fast using inauthentic methods of account growth, including but not limited to AI bot created activity.

To strengthen national security, we must become better at identifying fake AI created bot accounts or deepfakes. Unfortunately, the accounts are created faster than they can be removed, and verification has done nothing to stop the problem. Most importantly, we must understand who is behind the bot armies and who controls them.

- Who are they fighting for or against?

- What mission have they been deployed for?

- Who is the commander?

- Who are the ultimate civilian casualties?

Make no mistake: there will be casualties. The casualty manifestations in digital warfare look different. It can mean PTSD, trauma, blackmail, or being inadvertently tied to intelligence agencies. Essentially, you can be dragged into a proxy war you never even knew existed.

“Russia integrated cyber warfare, hostile social manipulation (e.g., media outlets and social media), and electronic warfare by gathering indications and warnings on soldiers positioning by sending fake social media posts to their family’s page. Russia exploited Ukrainian soldiers’ reliance on mobile technology to adjust target sets designed to create fear, confusion, and chaos.”

These same tactics are being waged against social media users domestically. If you are living in a haze of fear, uncertainty and doubt, it is because you are smack in the middle of a digital information war. Content is the currency.

READ: DHS Investigating the Nexus between Generative Artificial Intelligence and Foreign Malign Influence

Will the real antisemite please stand up?

We have created a surveillance state, but we are surveilling the wrong people. Labeling everyone as an extremist and a nazi has caused a collective societal failure to ignore those who actually are.

When everyone is a nazi, no one is a nazi. When everyone is a white nationalist, no one is a white nationalist.

When you dilute the meaning of words, you dilute the significance behind those labels. We need to stop calling everyone these terms and start reserving the terms for those most deserving of the label.

There were many bad actors who were arguably infinitely more dangerous than the most prominent media face of the movement, but researchers looked the other way. Why? Because they chose personal targets instead of the real target: hate. They collectively ignored micro-influencers and the role they played in creating antisemitic movements that spread. Some of the largest evangelists of this type of rhetoric are those who were radicalized by an anonymous account who went on to work in The White House. Why was this ignored? Did you think ten thousand followers was too small to track? Do you think ten thousand people listening to this content aren’t worthy of your time? Or was the real issue that Frame Games didn’t have a face and name like Spencer did?

Did you make the wrong person the face of this movement simply because he showed his face while others didn’t? You failed to detect actual antisemitic hate because anonymity mattered less to you than having a person you could attach to the movement. By all accounts, after listening to hundreds of hours of antisemitic content, Frame Games spewed more antisemitic content than many other notable alt right leaders. So, I say again, why was this missed? Why was this ignored?

Why did Frame Games go on to work in The White House, while Spencer was seen as the face of hate? I have never heard Spencer say half of the things that Frame Games said. However, this may also be because academic researchers had Spencers videos removed from the Internet.

How can I draw accurate conclusions if you cook the books and delete data? This is a real problem.

When you give a pass to one, what you are really saying is, it’s not antisemitism you are fighting, but instead, who you perceive to be the enemy.

You perceived wrong. You got it wrong. Until you can come to terms with that and admit that, people are less likely to trust you to accurately cover how movements truly grow.

You are ignoring the digital Charlottesville that takes place on social audio every single night.

Words can have a real impact. Online violence can lead to real life violence.

A man named Paul Kessler was just killed for being Jewish. All he did was wave a flag. His crime? Being Jewish. And yet, all of the people who are still talking about the death of Heather Heyer at Charlottesville are radio silent on this. Why does Kessler’s death matter less than Heyers? Why are you refusing to look at actual antisemitic hate? Why do you only investigate the hate you deem worthy of your time, while outright ignoring other forms of hate?

Instead, academic researchers choose to focus on who they want to be the face of antisemitism- at the expense of who actually is- simply because they can’t put a face to the hate. This is morally reprehensible and has caused mass destruction and damage to our nation.

Your refusal to acknowledge it or even come to terms with it makes it infinitely worse. It tells me you will do it again, and miss it again. It tells me that your collective pride matters more to you than sincerely apologizing for your blatant oversight. You track antisemites for a living, but somehow Frame Games never made it on your list.

If you don’t understand how people congregate online and what motivates them, then there is no ability to course correct.

It is the ultimate level of arrogance to think that certain views are not worth engaging with. Researchers felt Frame Games was clearly not worth their time, and because of this, they blew off one of the largest leaders of Jewish hatred in America. The real problem is that researchers ignore micro influencers when it comes to the online threat environment. This is a mistake that has real world consequences.

Furthermore, they block and cancel people for engaging with the very people they should be speaking with.

How can you investigate someone you blocked?

How can you understand who you are researching if you refuse to engage with them?

How can you make the world a better place if you write off the study of your research?

That is not research. It is activism. And it makes you no better than Frame Game Radio.

If everyone is doing activism, who is actually doing real research?

The answer: no one.

How is it that I uncovered this- someone who wasn’t even looking for it and doesn’t track it- but you didn’t? This isn’t my beat – it is yours.

You failed your readers. You failed the audience. And you failed the nation because your cognitive bias of antisemitism led you to ignore actual antisemitism simply because you didn’t know who was behind the account and you didn’t want to do the work to figure it out. It was easier to blame Spencer and his crew than to look at the real people who were behind this group. Mr. Spencer is not Jewish. Frame Games is.

When he spewed antisemitism, his words held infinitely more value than Richards ever could have. Instead of adding me to alt right nazi lists on Bluesky, you could have been adding Frame Games. But your own bias leads you to add everyone with a Republican label to lists, instead of doing the work to find out who really belongs on the list in the first place.

Understanding the historical timeline of antisemitism in The United States helps us confront and combat the current wave of antisemitism.

By studying the past, we can work towards creating a more tolerant society.

By ignoring the past, we can guarantee that the future will be worse.

Why Frame Game Radio Matters

Digital Deception

Elon Musk now has operational control of one of the most important news platforms in the world. He also has collective ownership and direct involvement with critical military and defense units.

The more he removes access to researcher’s data, the less we are able to really understand the elements of war being waged against us. At the beginning of this research journey, I fundamentally thought the exact opposite was true. I believed researchers should not have access to this data, and that the data was being used against us. But I have now come to realize that the data will be used against us regardless.

That is to say, it is better to ultimately have more eyes on it than less.

A real practitioner who is committed to truth is able to adapt their thinking when new evidence is presented. A chaos agent is not. They are not committed to the truth; they are only committed to the outcome.

Currently, Musk and his associates are the only ones that have eyes on that data unless you can pay enterprise rates. That means all data will be filtered through a lens. It is hard to know if one can even trust the data if the source of the data is cut off. That was always the real end play here. Control, domination, and removing access to the source of data. This has taken us further away from transparency and closer towards the dark.

My initial perspective on this may have been flawed, and that is because I was reading information on X that was filtered through the frame of Frame Games. Coming full circle and on the other side, it is imperative that we do not allow information to be framed with a political agenda.

Censorship exists, but when you frame the narrative around it, you hurt the cause and set back the foundation of the entire movement. This is the problem with Frame Games.

He censors information and believes his view is the only one that merits investigation. Censorship is larger than any one person. When you censor people’s access to our system of justice, and pick and choose what evidence is worthy of investigation and only use your own, you are no different than those you claim are censoring others. You become the greatest censor of all.

Censorship is not only about politics; it is also about the control of information. If you believe your evidence is the only evidence that matters, you are censoring the entire control of information.

They say sunlight is the ultimate disinfectant, but you are the cloud that blocks the sun. Your failure to see this makes you an extreme threat to national security.

Like the researchers who deem *you* not worthy of investigation because your influence is too small, you deem *others* not worthy of investigation because you think they are not worth the evidentiary spotlight- which apparently only has room for your evidence. You are doing the same thing the academic researchers are.

In the end, both of you are the same: activists. You frame facts- you don’t present facts. You are not a credible source of information when you have a hidden agenda. The same way they want to eradicate you from the Internet, you want to eradicate them from the Internet too. It is disingenuous to leave this part out.

In the end, both of you are wrong. Neither group is focused on the actual issue of AI censorship. Instead, they are wasting congressional resources taking their political opponents out. This is intellectually dishonest and does nothing to solve the problem of censorship or move it forward. All it does is move *you* forward to get more funding.

Your mission is not our mission. When you hijack the mission, you are no longer part of the mission. Good actors will not tolerate deceit.

The battle to control information

I don’t have an intended outcome. Even after reading this article, I am not asking you to do anything. There is no movement to join. No real call to action. I am also not asking you to cancel Frame Games or even unfollow him. In fact, I am asking you to do nothing except think and use your brain. I am not trying to get you to do anything. My goal is to bring information to you and let you decide what you want to do with it. That is vastly different from activism or psychological operations. I am not trying to change your opinion. I meet people where they are, provide facts, and let them decide.

When people disguise facts as narratives, they do not have your best interest in mind. They want you to do something. They do not want you to understand something. They have a mission. You are the target in the deployment and the goal is to get you to change your thinking.

I don’t want you to change your thinking. I want you to expand your thinking to think for yourself. This is fundamentally different than someone who is executing a domestic psychological operation under the disguise as “truth telling” or “blowing the whistle.”

It’s important to know this so that the same thing doesn’t happen to you. People who fall victim to these operations lose years of their life. Their thinking becomes warped. They sense of reality is flawed. They lose trust and faith in humanity. They report feeling betrayed, broken, and traumatized.

The earlier you can get out and reclaim control of your mind – the better.

People who engage in these clandestine operations want to control how you think about issues that matter to them. They target people with a strong cognitive bias who are already more likely to side with them. They prey on unassuming civilians and target people of power to do their bidding. In some cases, that can include owners of big tech companies, or even International journalists. The goal is to use these people to tell their story. They are impressed with the depth of knowledge that is presented, and rarely question who is presenting it. They do little to no research on these characters who appear out nowhere.

When caught, they disappear as quickly as they arrive.

They have little remorse and blame everyone else for their failure, which they often call a success. In other cases, they will say they are proud of what they did. Their thinking is warped, and as the mask slips, this becomes more and more apparent to any outsider who can objectively view the facts.

That is what makes this so dangerous. They are going after vulnerable people who are already willing to trust them due to cognitive bias and an agreeable disposition. In many cases, they track targets for years before ever actually approaching them or interacting with them.

Unfortunately, smart people feel too embarrassed to come forward when they fall victims to these operations. That is why I exposed Frame Games. To spare more people from getting harmed by him. And in this case, to spare our country and the future of national security. These operations wreak havoc on our system of justice and can cross the line from journalism to activism. From potential witness tampering to forever changing the Congressional record. From narrative placements with witnesses all the way to amicus briefs in the Supreme Court. Frame Games ran a frame on The United States government. He still is. The real question is: why is no one stopping him?

Why are social media platforms monetizing hate?

When you fund hate, you fund terrorism.

21st Century Information Warfare requires the audience’s attention to influence behavior. On social media, digital attention is a finite resource. Due to monetization, it is also a form of digital currency that everyone is now competing for.

Why are we paying content creators to host digital versions of Charlottesville every night on Twitter Spaces? These creators sow division, create chaos, and wreak havoc on the moderation system. Spaces are the modern-day version of targeted group manipulation. These spaces can be used to alter social behavior and social norms pertaining to the most controversial issues of our time.

When the mission turns into using targets for narcissistic supply – it is a clear sign that the person has gone rogue and is not following command. When their flying monkeys are powered by AI – it is even worse.

The operations are being waged domestically instead of internationally. This is a serious problem worth addressing.

Influencers: Paid chaos agents?

Unfortunately, these bad actors can damage populations and civilians. The ops can result in long lasting domestic civilian casualties- which is the part of this that no one ever talks about.

While there may not be any visible blood, there is often psychological damage and trauma associated with the victims of these operations.

Disarming the enemy

You are more likely to get exploited online if you don’t understand all of the ways you can be exploited in the first place. Domestic psychological operations and espionage agents bring criminal elements into your digital orbit. Through algorithmic social engineering and persuasion, the agents are able to disarm the enemy (you) from detecting them in the first place. Their goal is to not only win hearts and minds, but ultimately, to change hearts and minds through persistent narrative control.

Combating Antisemitism on Social Media Platforms: TikTok and X

Proposed Solutions

“U.S. military leaders need to re-evaluate whether the current structure is suited to fight future wars. To win the current fight, we must respect the fact that in 21st Century warfare, everyone plays a role. Both policymakers, SMPs, and private citizens must step up to secure the vulnerability created by our attention, habits, and influence—the technology that connects us to divide US (United States, that is).”

We must prosecute anonymous actors who spearhead digital violence the same way we prosecute those who spearhead real life violence.

We cannot give a pass to those who hold tiki torches on Twitter Spaces every night, while prosecuting only those who carry torches in person. By only prosecuting in person hate, we can guarantee that digital hate will exponentially grow because there are virtually no consequences for it unless real life violence occurs. This has to stop.

Impose costs on repeat offenders and hostile actors in cyberspace.

There must be consequences for those who continue to game the digital system under fake accounts. These bad actors know they will never be prosecuted, so they continue to create digital violence because they can get away with it. They exploit the gap in the system that currently exists. As long as they do not incite violence online, they can get away with it.

This gap is easily exploitable for both state and non-state foreign actors. In the end, our prisons are being filled with the followers of these movements, instead of with the leaders of the movements. The leaders cut deals, while their followers rot away. The system of anonymity is not protecting you. It only protects them.

We are prosecuting the wrong people. It is the leader who has ten thousand students. The student does not have ten thousand followers. If you attack the leader, you cut off the source of hate at the root.

Instead, we are prosecuting backwards. We are taking out the followers, and not the leader. This would be akin to going after those who followed Bin Laden, but not Bin Laden himself. America has it backwards, and we are paying the price for it and seeing the digital consequences for it.

It is not Mr. Benz who I care about- but rather- the ten thousand people he radicalized who still believe the narrative he pushed. The hate we see on the Internet today is a direct result of the hate he taught his ‘students’ aka followers years ago.

Countering hate speech on social media.

Report the content.

Increase volunteer count for annotation.

Familiarize yourself with the law.

- Social media platforms have a judicial responsibility to combat antisemitism. If they continue to host hate, they will be fined to oblivion, rendering these important communication channels obsolete.

- Understanding global hate speech laws plays a crucial role in combating antisemitism. By studying and understanding the history and manifestations of antisemitism, individuals can empower themselves to follow the law in the jurisdiction they reside in.

Questions to ask yourself when engaging with a new account on social media:

- This person has a vested interest in X.

- This person has a known history of being an expert in X. Their professional history online supports this claim.

- This person has recruited followers to do X. What do the followers have in common?

- This person is funded by X.

- This person is a foreign agent.

Leveraging AI to combat Antisemitism

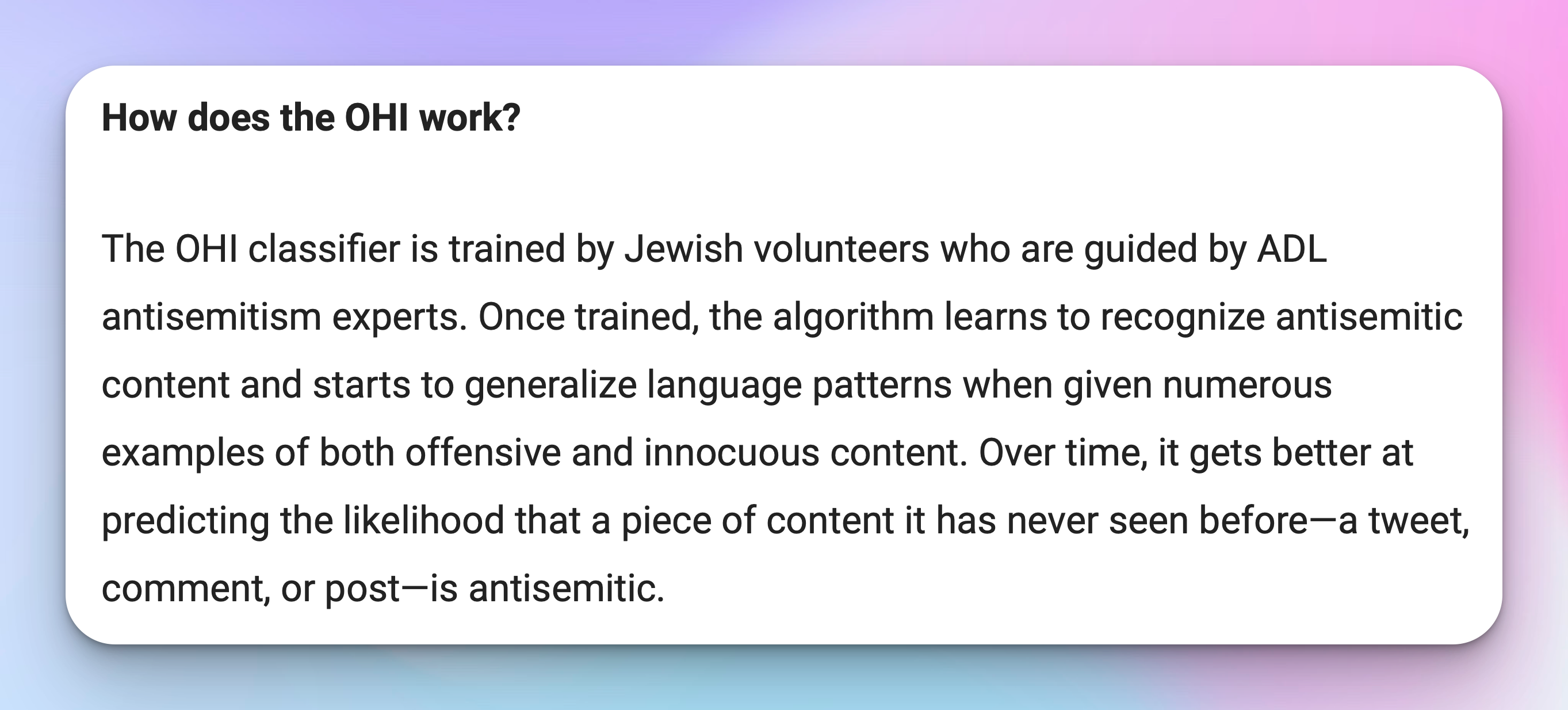

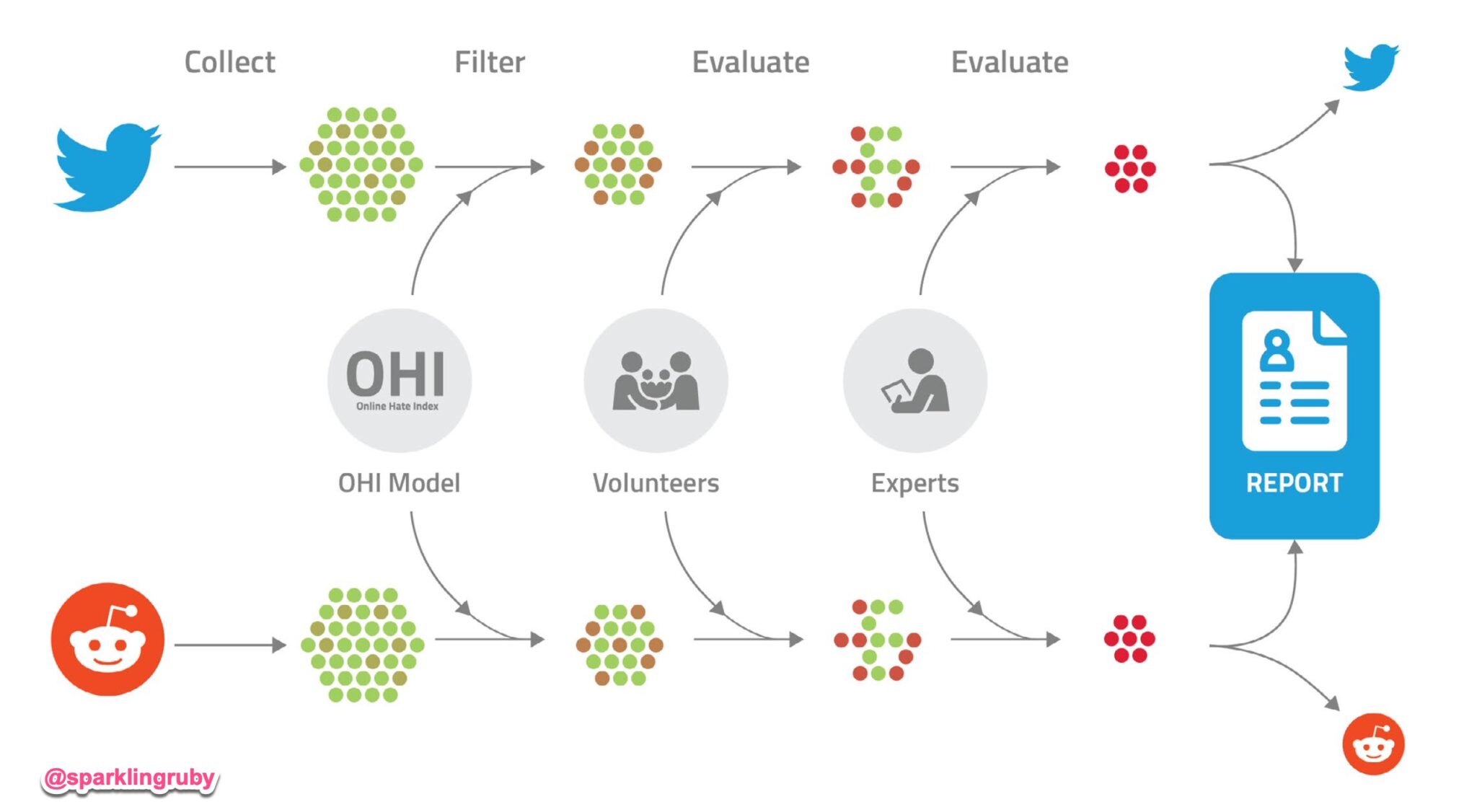

Users rely on content moderation to protect them from social media harassment. But most of these safety features are built with AI modifiers and classifiers. Training machine learning engines to detect antisemitism requires a consistent process of fine tuning. For example, The ADL used volunteer annotations to fine tune the Online Hate Index. They assigned labels to text to label specific words as antisemitic. This process is used to train a model.

How can technology be used to detect and monitor antisemitic content?

The ADL has developed machine-learning detection and monitoring tools specifically designed to detect coded antisemitic hate speech and evolving tropes. By scraping data from social media platforms, new antisemitic terms can be identified.

READ: ADL Launches Online Hate Index to Detect Antisemitism on Social Media Platforms

The Online Hate Index (OHI) is a set of machine learning classifiers that detect hate on online platforms.

Classifiers in machine learning models can predict how to categorize a given piece of data. The process can be fully automated with machine learning and it can also be used to assist humans in sifting through large volumes of data.

“The OHI antisemitism classifier harnesses the extensive knowledge of ADL’s antisemitism experts alongside trained volunteers from the Jewish community. ADL’s OHI antisemitism classifier development and application represents the first independent, cross-platform measurement of the prevalence of antisemitism on social media.” – ADL

“ADL Center for Technology and Society uses machine learning to build classifiers to understand the proliferation and mechanics of hate speech. Machine learning is a branch of artificial intelligence where computers, given human-labeled data, learn to recognize patterns. In our use case, we are concerned with the patterns computers can find in language. Classifiers, models that predict the category in which a piece of data belongs, can be fully automated or assist humans to sift through large volumes of data.”

Machine learning works similarly. In the case of our antisemitism classifier, the algorithm learns to recognize antisemitism and starts to generalize language patterns by being given numerous examples of both offensive and innocuous content. Over time, it gets better at predicting the likelihood that a piece of content it has never seen before—a tweet, comment, or post—is antisemitic.

The OHI classifier learns to identify connections between English-language text (e.g., social media content) and human-assigned labels. First, people who volunteer to serve as labelers of content assign labels to that text (e.g., antisemitic or not). The system converts text and labels into numerical form (called an embedding or input feature).

ADL OHI machine learning classifier

The model then adjusts billions of numerical parameters, commonly called “weights” in machine learning parlance, to produce an output that matches the human labels. It can learn, for example, that the words “k*ll” and “Jews” frequently co-occur with the label antisemitic, unless the words “don’t” or “wrong” also appear. But the model is complex, and not easily explainable in human terms, so data scientists evaluate the models on how well they can evaluate text that is novel to the model. This process repeats so that after each round of making inferences, the model improves. Once trained, the model can receive inputs of English-language text and predict whether the text is antisemitic at speeds far faster than humans can. Whereas it takes seconds to minutes for a human to evaluate one piece of text, the model can process thousands per second. This is useful for processing data at platforms’ vast scales.”

Frequently retrain machine learning classifiers to accurately detect antisemitism.

By the time a model is trained on a term, a new term has already been created to evade model detection. This requires consistent effort to combat evasion.

What responsibility do social media platforms have in addressing antisemitism?

Social media platforms have a responsibility to address and combat antisemitism on their platforms. Platforms have a responsibility to retrain machine learning algorithms and training data that is used to detect and flag hate-filled content and the expansion of content filters.

The use of artificial intelligence to flag antisemitic content and the expansion of content filters to screen antisemitic speech.

Social media platforms are combating antisemitism with artificial intelligence (AI) technology to flag antisemitic slurs and tropes with classification. By analyzing patterns of speech and identifying keywords and phrases associated with antisemitism, AI algorithms can quickly identify and remove this type of content, preventing further dissemination.

This is where free speech absolutists differ. They do not believe AI should be used to classify any message as antisemitic. They do not believe in classifiers at all.

The expansion of machine learning filters to screen out antisemitic content is an ongoing process. By proactively filtering and removing antisemitic content, social media platforms can reduce the visibility of antisemitic rhetoric.

By leveraging artificial intelligence and machine-learning detection tools, you can identify and monitor antisemitic content on social media at scale. This automated approach to AI content moderation enables swift action and ensures that toxic content is swiftly removed.

Technology can be leveraged to detect and monitor antisemitic content, allowing for the identification of evolving tropes and the tracking of language.

However, the responsibility to combat antisemitism on social media does not rest solely on technology. Individuals must participate in creating healthy digital environments.

Social media platforms don’t create hate. They amplify it. People create hate. Moderation is fundamentally a people problem – not a moderation problem.

We cannot continue to blame platforms for the hate that people produce.

People must start taking responsibility and accountability for their contribution to the digital ecosystem.

Using AI Technology to Detect and Monitor Antisemitic Content

The rise of antisemitism on social media platforms requires a triaged approach of human moderation, AI content moderation, and constant retraining of machine learning classifiers.

By utilizing artificial intelligence (AI) detection and monitoring tools, platforms can identify and track antisemitic content on social media platforms.

The Anti-Defamation League (ADL) has been at the forefront of developing machine-learning algorithms that can detect coded antisemitic speech and evolving tropes for years. By analyzing data scraped from social media platforms, machine learning tools can identify new antisemitic terms. This allows for the tracking of the spread of coded language and the early identification of emerging trends when new terms emerge. However, if we cut off their access to data, they will not be able to track evolving tropes and will instead be making decisions based on old training data, which over time, will be outdated.

The truth is that technology will not solve the problem of hate. It can bury it, hide it, or suppress it, but it will never eradicate it. Furthermore, the eradication of hate leads to the erasure of history. We cannot understand history if we delete it from the Internet. This will directly lead to false conclusions based on incomplete data sets. This is the danger of trying to remove every bad actor from the Internet. How can we learn from our past if our past has been removed from Google?

When you search for the Holocaust, you can find what it was. Imagine if someone decided to wipe every instance of Holocaust education from the Internet because they declared Hitler a misinfo spreader and bad actor? Even though he is both of those things, we need to be able to preserve history so we can learn from history. My fear is that the erasure of history is going to lead to more hate because we are not getting an accurate assessment of hate that existed in that past. This is why it is critical not to erase our enemies off of the face of the earth when it comes to search.

We need to be able to find them. We need to be able to spot patterns. We need to be able to know who we are talking to. When you work hard to delete these people, you remove our ability to look into the historical data of who these people were before they reinvented themselves. Whether intentional to not, you are helping them conceal their identities. This is a form of censorship in and of itself. Even if you think your goal is noble, it is not. It assumes that everyone has the same historical data that you do, and you alone should dictate historical records.

History is not for one person to decide. It is a collective decision and shared experience. And when people assume a moral high ground of who or what gets to stay, this is the highest level of arrogance. Judging by current disinfo and hate speech standards today, everyone would be rallying to remove Hitler off the Internet if he were alive. Any trace of him would be wiped. Do you know how dangerous that would be? That would mean we could never learn the history of the Holocaust.

In your effort to shield us from our darkest moments, you are concealing the worst moments of our past, and ensuring we wil blindly go into the future having very little understanding of how we got to where are today. If someone had not preserved the archives of Frame Games hate, we wouldn’t be able to learn from it and I wouldn’t have been able to find it.

This is why it is so dangerous to obliterate people from search. You are essentially removing informed consent. We must have the same access to information that you do so that we can make educated decisions on who we engage with and what they have done in the past. When you get them removed from the Internet, you take away our ability to do that.

You are not stopping hate; you are actually fueling it. You are ensuring that anyone in the future will always work from a flawed baseline.

Furthermore, this means that the younger generations will get further and further away from understanding why antisemitism is such a problem today. Why? Because you got all of the antisemitic bad actors removed from the Internet. This actually hurts the case you are trying to make. If you remove everyone – no one will believe you that this is really an issue. This is why we must archive and preserve hate to preserve history so we can learn from history instead of burn history. Removing Internet search results is a form of Internet revisionism. I do not endorse it nor do I agree with it. NARA is currently trying to do this with artificial intelligence- which would fundamentally change all historical records. Forever.

Kris Ruby’s recommendations for additional safety features and AI content moderation.

How to become more resilient to domestic psychological operations.

- Report problematic accounts. Don’t only rely on Machine Learning to detect hate. If you see something, say something.

- Control your feed. Block accounts that engage in harassment and name calling.

- Change comment settings. Access to you is a privilege, not a right.

Here are the ways platforms are currently dealing with influence operations:

- AI detection and removal

- Restricting the reach of state affiliated media

- Blocking domains from appearing on Google search and discover

The Rise of Antisemitic Hate

Kristen Ruby, CEO of Ruby Media Group, joined Fox News Live to discuss the surge of antisemitism on social media.

The weaponization of social media poses real life risks.

Jewish TikTok influencers sound off:

“We recommend the creation of a community manager role dedicated to Jewish creators.”

Jewish social media influencers recently wrote an open letter to TikTok. In the letter to TikTok, Jewish content creators stated they feared for their physical safety and the offline real-world implications of the online hatred they face.

KRIS RUBY FOX NEWS LIVE TRANSCRIPT:

Arthel Neville: Pro-Palestinian support is flooding the Internet in comparison to pro-Israel content, and with that comes a rise in threats and antisemitic content. Joining me now is Kris Ruby. She’s the CEO of Ruby Media Group and a social media expert. If we could get your reaction to what we just heard. I agree with all of it. We are seeing unprecedented levels of antisemitism on social media platforms everywhere from tick tock to X formerly known as Twitter. I think all of this is coming at the same time as the launch of artificial intelligence, and that makes this even worse because you can really unleash an entire bot army on someone.

Kris Ruby: Sometimes the hate that you’re receiving may actually be a bot army and not a real person, and in the near future, it’s going to become very difficult to discern between the two. Who are you fighting with? A person or AI? That also directly intersects with what we’re seeing in general on social media, which is the increase of psychological domestic operations and cyber warfare being waged to pit people against each other and create partisan division at scale.

Arthel Neville: Did you sign the open letter to TikTok executives?

Kris Ruby: I haven’t signed it, but I’m familiar with it. I agree with what is said in it. One of the standout recommendations was when they said- we want a community manager that is going to specifically represent our interests as the Jewish community. Every other community is represented. Why are Jews not represented in terms of community management on TikTok? TikTok has responded and said that they take this seriously, but from my own experience and reporting. Users are saying something very differently. TikTok has done a good job of removing antisemitic hashtags, but not the comments.

Arthel Neville: Not the comments. Let me read a statement that part of a statement they gave to the media. It says, in part, we’ve taken important steps to protect our community and prevent the spread of hate, and we appreciate ongoing honest dialogue and feedback as we continually work to strengthen those protections. Does this make you feel safe? I mean, is this enough?

Kris Ruby: No, it’s definitely not enough. Again, this is really a war with the machine right now with machine learning, because there is an over reliance on machine learning to detect antisemitism in the first place. ADL has talked about that as well in terms of having volunteer annotators to label text in the online hate index, where they actually have people who will flag text as being this is antisemitic. But AI is not always going to get this right. If you see something, say something, flag the content, and participate in the process.

Arthel Neville: You mentioned this earlier, but I’ll highlight it now, that on X there are atrocious and menacing hashtags like ‘Hitler did nothing wrong.’ Why do you think this kind of vitriol and hatred is allowed on any social media platform? I get what you’re telling me that there can be AI bot farms tossing things into the mix, but I mean, this should just not be allowed.

Kris Ruby: Correct. So, unfortunately, some people seem to be a little bit confused between the difference of free speech and hate speech. Hate speech is not free speech. X is a global platform. They have to abide by global laws. In Germany, there is already a lawsuit against Musk for antisemitic content hosted on X. There are specific laws in Germany regarding Holocaust denial and regarding social media platforms of what can and cannot be allowed.

But as far as X is concerned, in their terms of service, Holocaust denial is explicitly not allowed. So, it is unclear to me why they are still allowing that type of content on their platform. There is simply no excuse for it. What about just boycotting those platforms? I mean, we’ll make it go away, but I don’t know. Could that help? Just asking.

There will always be new emerging platforms that pop up just as quickly as someone can boycott it. So, the real underlying issue isn’t the platform as much as it is the people and the user sentiment and where this hate is coming from and how we can really get to the root cause of that hate.

There should be a letter to X about this issue. Maybe I’ll go write it. This issue is happening across the board on every single platform right now.

Arthel Neville: What shocks me is these are young people. They have these really warped senses of perspective at a young age.

Kris Ruby: We’re also seeing people getting cancelled for this. There is one account where they track this and if you’re seen ripping off these posters in Manhattan, that account will post your face. Young people who are doing this should really consider the future of their career. They will not get hired ever again and that is just a reality. If you are aligned with antisemitism, you’re on the wrong side of this. We need to end hate, period.

Arthel Neville: We need to end hate, period, and we’ll end this segment on that note, Kris Ruby. Thank you very much.

PRESS:

Fox News: Kris Ruby “Antisemitism on TikTok”

Fox News: Kris Ruby “Social media spreads antisemitic and anti-Israeli sentiment”

READ:

THE U.S. NATIONAL STRATEGY TO COUNTER ANTISEMITISM

Subscribe to Kris Ruby on X to follow my ongoing investigation into coordinated influence operations

LEGAL DISCLAIMER AND COPYRIGHT NOTICE:

You do not have the authorization to screenshot, reproduce or post anything on this article without written authorization. This article is protected by U.S. copyright law. You do not have permission to reproduce the contents in this article in any form whatsoever without authorization from the author, Kris Ruby. All content on this website is owned by Ruby Media Group Inc. © Content may not be reproduced in any form without Ruby Media Group’s written consent. Ruby Media Group Inc. will file a formal DMCA Takedown notice if any copy has been lifted from this website. This site is protected by Copyscape. This post may contain affiliate links for software we use. We may receive a small commission if any links are clicked.

This article was written by a human and no artificial intelligence was used to write this article.

No Generative AI Training Use

Ruby Media Group Inc. reserves the rights to this work and any other entity, corporation, or model has no rights to reproduce and/or otherwise use the Work (including text and images on this website) in any manner for purposes of training artificial intelligence technologies to generate text, including without limitation, technologies that are capable of generating works in the same style or genre as the Work. You do not have the right to sublicense others to reproduce and/or otherwise use the Work in any manner for purposes of training artificial intelligence technologies to generate derivative text for a model without the permission of Ruby Media Group. If a corporation scrapes or uses this content for a derivative model, RMG will take full legal action against the entity for copyright violation and unlicensed usage.

DISCLOSURE: This reporting is of public interest.

Date last updated: August 15, 2025

KRIS RUBY is the CEO of Ruby Media Group, an award-winning public relations and media relations agency in Westchester County, New York. Kris Ruby has more than 15 years of experience in the Media industry. She is a sought-after media relations strategist, content creator and public relations consultant. Kris Ruby is also a national television commentator and political pundit and she has appeared on national TV programs over 200 times covering big tech bias, politics and social media. She is a trusted media source and frequent on-air commentator on social media, tech trends and crisis communications and frequently speaks on FOX News and other TV networks. She has been featured as a published author in OBSERVER, ADWEEK, and countless other industry publications. Her research on brand activism and cancel culture is widely distributed and referenced. She graduated from Boston University’s College of Communication with a major in public relations and is a founding member of The Young Entrepreneurs Council. She is also the host of The Kris Ruby Podcast Show, a show focusing on the politics of big tech and the social media industry. Kris is focused on PR for SEO and leveraging content marketing strategies to help clients get the most out of their media coverage.