|

Getting your Trinity Audio player ready...

|

Artificial Intelligence (AI) in Journalism

Artificial intelligence adoption expands to newsrooms across the country.

Artificial intelligence (AI) is already having a significant impact on journalism, and this impact is likely to exponentially grow over the next decade.

What does this mean for the future of journalism?

Artificial intelligence will disrupt every industry, and yes, that includes journalism, too. Digital content and SEO has blurred the line between traditional journalism and digital content production.

Automated Journalism Market

Large language models are disrupting newsrooms by enabling journalists to use AI & GPT-4 to write stories at warp speed.

EXECUTIVE SUMMARY:

- AI cannot write coherent news stories without human intervention.

- AI is useful to summarize articles, but it should not be used to write the entire article.

- AI can automate laborious journalism tasks including interview transcription, summarization, voice to text audio notes and automated fact checking.

- Critical thinking, analysis, solid news judgment and investigative reporting skills will still be essential to the future of journalism.

- AI will be used as a tool to enhance and augment the work of journalists. It will not replace journalists. It may, however, replace TV news anchors with AI-generated avatars and anchors.

How is AI used in Journalism?

There are a variety of AI-powered journalism tools that writers can use to assist in the reporting process.

However, before a journalist chooses an individual AI tool to use, it is important to understand the different software categories that exist in Machine Learning.

Here are some examples:

- Natural language processing (NLP) software: NLP software can be used to analyze large quantities of text data, such as social media posts or news articles, to identify patterns and trends. NLP is useful for uncovering new story leads and identifying topics of interest based on trend analysis.

- Speech-to-text transcription software: Speech-to-text AI transcription software can be used to transcribe audio or video interviews with sources, making it easier and faster for journalists to extract quotes. This is particularly useful for journalists when it comes time to promote their work on social media.

- Facial recognition tools: AI-powered image and video analysis tools can be used to identify and analyze visual content. This includes identifying faces, objects, or locations in photos or videos. This type of facial recognition software was typically reserved for U.S. law enforcement use, but is now available to the public. Investigative biometric AI tools can help journalists quickly identify a suspect. The tools can also be weaponized for social media stalking and harassment, so it is important to utilize them ethically and responsibly.

Reporting/ Interview Process:

- Write article outlines before an interview

- Use Natural Language Processing to condense frequently asked questions to dig into unanswered questions

Publication/Promotion Process:

Before an article is published, there are technical details that must be plugged into a content management system. Typically, a search engine marketing professional would work with a reporter to input this information.

Today, AI tools can facilitate a smoother transition and shared responsibility set between reporters and search engine marketers. SEO AI tools can give journalists a competitive advantage, especially among peers who are not yet proficient in SEO.

Writers can also use AI to:

- Write article meta descriptions

- Craft SEO categorization tags for articles

- Create AI-generated feature header images for articles

Journalism Automation

How can AI help journalists?

AI can help journalists automatically transcribe interviews, condense research, and create AI-generated images for articles instead of stock photos.

With a few text prompts, it is now possible to generate a complete article in seconds.

AI is already impacting journalism. Here’s how..

- Automation of news writing: AI-powered writing tools can help writers automatically generate news articles based on large datasets such as earnings reports or bureau statistics. This can help news organizations quickly produce large volumes of news content and data journalism. However, it can also exponentially increase the margin of error with false facts and misinformation. Quantity of output does not equate to quality of output.

- Personalization of news: AI algorithms can analyze a reader’s browsing history and preferences to deliver personalized news content, which can increase engagement and reader loyalty. This can also make AI news more addictive, similar to what we have seen with the TikTok algorithm. Personalization of news benefits publishers more than it benefits writers.

- Fact-checking: AI-powered tools can help to identify fake news and misinformation by analyzing sources, cross-checking information, and detecting anomalies in images or videos with AI encoding technology. However, this can also be widely problematic if the source is incorrectly labeled as misinformation when it is in fact real information.

- Data-driven reporting: AI can help journalists identify and analyze patterns in large data sets, such as social media posts or government data, to uncover trends or even a margin of error. AI will accelerate the adoption of data journalism.

- Audience engagement: AI can help news organizations to better understand their audience by analyzing engagement data, such as click-through rates and social media visibility. Publishers can leverage this information to inform content distribution strategies. However, this blurs the line between advertising and editorial, and puts journalists in a position where they are optimizing for clicks instead of valuable reporting and investigative journalism.

READ: AP’s ‘robot journalists’ are writing their own stories now

The benefits of automated journalism

What are the benefits, advantages, and disadvantages of automated journalism?

It is important to carefully consider the potential benefits and risks of using AI in journalism.

Strengths:

AI strengths for journalism

- Acceleration of content output

- Acceleration of volume of published content

Weaknesses:

AI weaknesses for journalism

- Plagiarized content

- Duplicate content

- Factually incorrect content

Opportunities:

AI opportunities for journalism

- Automation of SEO tasks related to content publication and promotion

Threats:

AI threat to journalism

- Journalists replaced with AI

- Over time, it will become increasingly difficult to discern factual output from AI-generated output.

- The entire content marketing agency model could be replaced with AI tools for a fraction of the cost. Agencies must quickly adapt or perish.

Legal Risks:

- Human bias can seep into datasets and AI models, resulting in ethical and legal issues pertaining to Intellectual Property.

- Are you paying for plagiarized content that you can get sued for down the line? Are you paying a consultant to create content for you that someone else can steal? If so, what does this mean for your original investment in content marketing?

- Will this create a race to the bottom?

- How can you protect your initial investment and content assets?

- If people know that their content will inevitably be scraped by large language models, will this dissuade people from hiring a content marketing agency?

- How does this paradigm shift change the value of content marketing?

- AI-generated content is often plagiarized content from other writers who were never compensated. These writers never consented to have their content used or scraped in the first place.

AI NEWSROOM DISRUPTION

How is artificial intelligence disrupting the modern-day newsroom?

While AI has the potential to greatly enhance the efficiency and effectiveness of newsrooms, it also has the potential to disrupt traditional newsroom structures and workflows.

Here are four ways AI could disrupt newsrooms:

- Automation of tasks: AI can automate manual labor tasks traditionally performed by journalists, such as data analysis, fact-checking, and even writing. This could lead to job loss for journalists if their work becomes automated in the near future. It could also lead to a significant decrease in salary for journalists if AI is used to automate the research they get paid to do.

- Changes in newsroom workflows: AI can change the way newsrooms operate, with journalists working alongside algorithms and technologies that help to identify stories and analyze data. This could require changes in newsroom structures and workflows to accommodate the use of AI.

- Increased competition: As AI tools reach mainstream adoption, newsrooms that do not adopt AI technology will inevitably fall behind in terms of efficiency and reach, leading to increased competition between news organizations. TV news anchors are already being replaced with AI-generated TV news anchors to report the news, which could also eat into the cable news industry if more talent is replaced with AI.

- Changes in content and audience engagement: AI-powered personalization could lead to changes in the type of content produced by newsrooms and the way that audiences currently engage with news. This could result in a seismic shift towards more personalized news content and a greater emphasis on audience engagement metrics.

- Automation of routine tasks: AI can automate routine tasks such as fact-checking, data analysis, and content distribution, freeing up journalists to focus on more complex and creative tasks.

- Changes in job roles and responsibilities: As AI takes on some tasks traditionally performed by journalists, roles and responsibilities may shift, and journalists will need to develop new skills and expertise in Machine Learning to remain competitive.

- Increased reliance on data and analytics: As AI-powered tools become more prevalent, newsrooms will become increasingly data-driven. Journalists will be required to have a strong understanding of data analysis, interpretation, and prompt engineering. This will not be a nice to have- it will be a must have.

- Business model changes: As newsrooms adopt AI-powered tools, they may need to rethink their revenue models. AI tools could potentially reduce costs and increase efficiency.

The long-term impact of AI on newsrooms will depend on how quickly machine learning technology is implemented and adopted.

Journalists Vs. Machines

Boots on the ground investigative reporting will support AI- it won’t replace journalists.

AI copywriting tools make it easy for content creators to publish content rapidly. This has led to unrealistic expectations about how long it takes for a journalist to produce meaningful and impactful investigative reporting.

When humans compete with a machine, the machine will always win.

The machine can produce output much faster than a human can. This will create long-term problems for humans. The expectations will become increasingly greater for journalists to produce more for less, or risk being replaced by a machine.

Operation Warp Speed

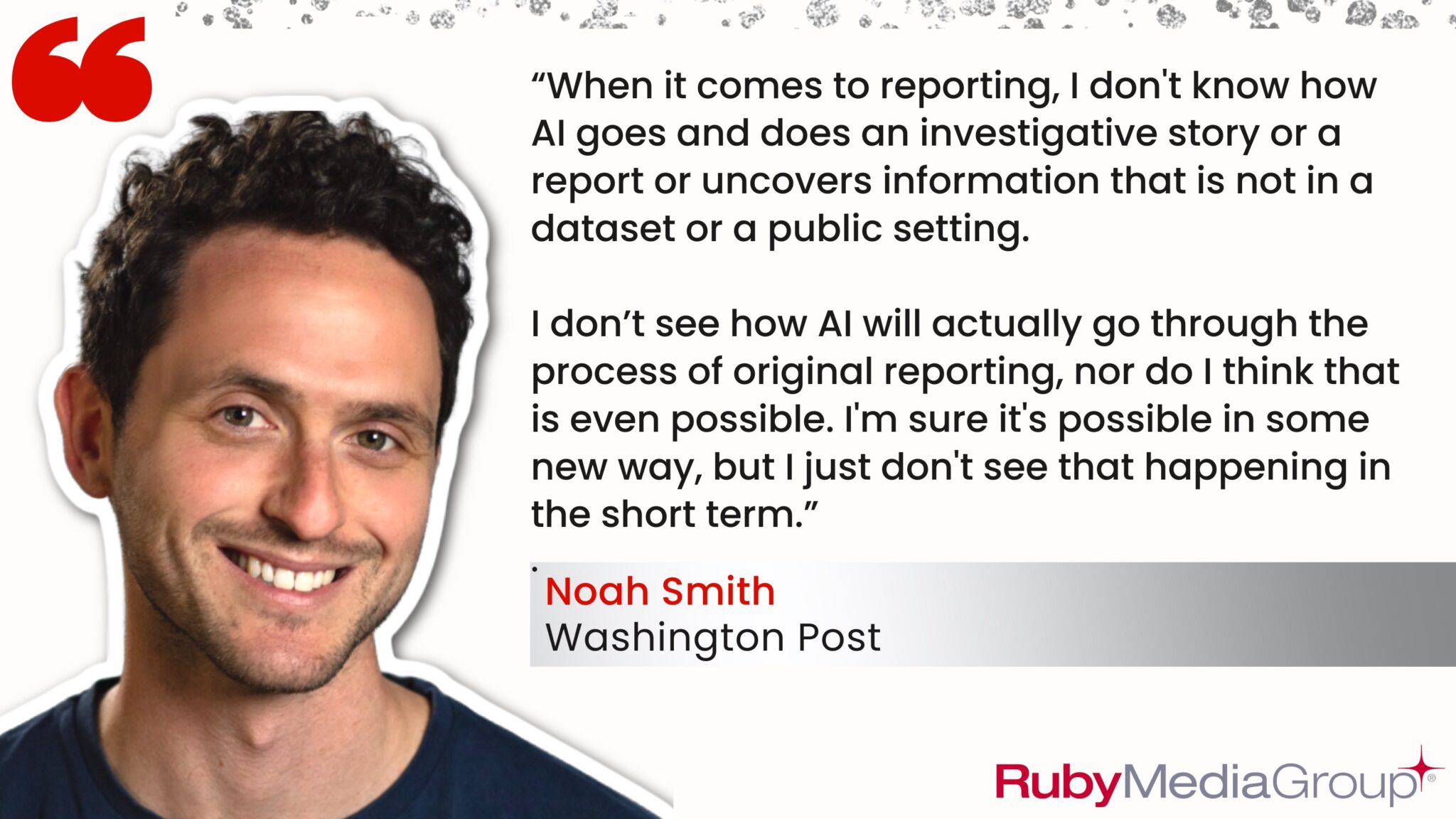

Over the past year, the rapid-fire advancements in artificial intelligence continue to accelerate. When we hosted this fireside discussion with journalist Noah Smith of The Washington Post and Bree Dail of The Epoch Times in 2021, generative AI art tools like Dall-E, Midjourney and Stable Diffusion had not yet been released to the public.

Generative AI art tools have created an entirely new layer to the discussion of the application of AI in journalism.

To compete for eyeballs and clicks, journalists are now able to create generative artificial intelligence images in seconds.

Many mainstream publications have already started to incorporate AI-generated art into their publications and feature stories. This has created widespread controversy in editorial departments and newsrooms across the country.

While our conversation focused on the use of AI-generated content in newsrooms, we would be remiss if we didn’t include AI-generated art as well. Both have the ability to rapidly transform how newsrooms publish content at scale.

That being said, there are pros and cons to using the technology.

We are witnessing the real-time transformation of journalism.

Recently, Canva integrated AI-art generation for graphic design capabilities.

The agency tools we use daily for business communication and publishing will soon come bundled with built-in artificial intelligence capabilities.

It is not a question of if, but rather, when.

SHOP our full AI agency tech stack.

For journalists, what matters most is original and factual information.

Journalists use Artificial Intelligence to research topics and collect structured data, but they should not use it to write copy for investigative journalism.

For serious topics and in-depth reporting, you need to conduct investigative research.

- AI can lead to duplicate content.

- AI can include outdated facts.

Pro Tip: Always run AI-generated content through Copyscape before publishing.

80% of Internet content is generic clickbait spam.

Artificial Intelligence and Journalism

Why subject matter expertise and human creativity is more important than ever before

Will AI replace journalists?

No, AI won’t replace journalists, but it will replace the process of journalism that is currently deployed at many digital newsrooms across the country.

Digital media outlets have changed. Publications are looking for website traffic, an increase in daily active users, and content that converts to clicks for ad revenue.

AI tools can assist with automation and business intelligence on the B2B marketing analytics side to analyze which articles are performing the best with high click through rates.

Unfortunately, the journalists who are rewarded will be the ones who write the best copy for the web.

This means writing with an SEO-first approach, thinking about backlinks, images, and prioritizing alt text.

The optimization of writing is about to become more important than the actual writing itself.

This is a major problem.

Many traditional journalists love the art of writing. They don’t want to be bogged down with the SEO side of journalism. This has caused a rift between traditional and digital writers.

The use of assistive generative AI technology will quickly become a critical skillset that publishers expect before hiring an investigative reporter.

Will AI replace writers?

“No, it will replace really low-quality writing,” said Jeff Coyle, Founder of MarketMuse.

“The fundamental principle is that for the best writers, AI isn’t a replacement for people who understand the writing process.

The act of writing is only part of what makes a successful writer and only part of what makes a successful content marketing team or journalist.

Making decisions about what to write and using artificial intelligence in that process is critical.

Create sources of truth for an editor to communicate what is needed to a writer so that the article that is produced meets the needs of the business or publication.

All of these writing stages can be enhanced and amplified with artificial intelligence processes.

- Provide insights for spell check

- Grammar checks

- Quality comprehensiveness throughout the writing process

- Post-publish editing and improvements

The greatest misconception is that you are able to go from a not well thought out idea to a finished piece of content and that AI is some sort of replacement value.

That it is much like a magician’s trick, where in reality, there is work to be done and treating content generation like an outsourced person is much more aligned with the market reality.

The better an outline or content brief is that a writer produces, the better the output of the generation will be.”

Validation is critical.

You need to treat AI-generated content like somebody who you still need to vet.

Treat the text you receive from an AI generative writing tool as something that is not vetted and needs to be enhanced, improved, checked, and balanced before it is meaningful and reasonable to publish.

“If I don’t do that, the punchline is that the joke is on me,” said Jeff Coyle.

“I’m legally and ethically responsible for that content. If I’m publishing something that hasn’t been reviewed, the implications for anything a level above a person’s solo blog are substantial.”

“If you are an entity or a business and you publish something that has no checks and balances against it, it is a Pandora’s box.”

Is there a class of writers who write very low-quality content where that bar just got raised?

“Yes, super low-quality content exists and that market is already saturated, because if you want to produce that type of content with automation, you can today. You can produce that and you can get away with selling it. Good for you. That is not the business I ever want to be in.”

Will journalists be replaced with AI writing tools?

“Over time, the maturity model for writers has evolved. Think about it the first time you ever used a spell checker. It seemed alien, like it wasn’t going to actually be real. Now can you imagine not having a spell check?

Can you imagine not having a grammar checker or a tone checker? Being a writer’s market, we build technology that tells you whether your content is comprehensive, high-quality, or tells the story that an expert would tell. For some writers, that is still a little bit alien.

The fact that there’s an article about artificial intelligence and they don’t talk about machine learning and computer vision is a problem.

AI optimization software will say, an expert would have mentioned machine learning and you didn’t. That’s really helpful and it’s something that writers who adopt this type of technology early on can’t live without. It is currently in that maturity curve.

Now, what are the next things that are going to come back? Fact checking and structural integrity, being able to know whether an article fits well with a collection of content would be amazing. Fact checking if it’s AI and it’s not generated by demagogues.”- Jeff Coyle

The rise of social media misinformation will continue to grow exponentially, and you will no longer be able to discern real information from false information.

Artificial Intelligence stands to fundamentally change the pace of news distribution.

The law has not caught up to the technology, making the rise of deep fake AI imagery a substantial threat to the news industry.

People will soon have trouble distinguishing fact from fiction if they no longer know who or what to trust.

“There are elements of traditional journalism that will likely go away. Oftentimes, it’s happening through ideologies that are being pushed, and ideologies are emotional where we see the manipulation occur. We also see that in the judicial system or in the political sphere.” -Bree Dail

Washington Post Reporter answers: Will AI replace journalists?

Washington Post journalist Noah Smith believes that AI will substantially impact journalism over time.

“As far as new innovations it could be helpful. The technology is useful, but it is mitigated by the potential downsides and continued erosion of trust in journalism.

It is definitely going to replace some journalists. The first thing that comes to mind is that AI can be used in every single story I write to reword, reformulate or change some words around. For those kinds of stories, absolutely. Those journalists who are doing that are ripe to be replaced.

For those who say AI will be the end of writers, maybe in one hundred years. There have always been companies who say they will write movie scripts and win Oscars with AI. That discussion has been taking place for the past 15 years. The companies never go anywhere, but they always get a lot of funding. It’s always really exciting and they get a few news stories published and go on.”

Journalist: How to use AI for Research

Are journalists using AI?

Personally, I find AI to be extremely valuable as a research tool. However, one critical problem is that I can never trust the output to be reliable. Half the time the output is real, while the other half the time, the facts are completely false.

For journalists, this is a serious problem that can erode their professional reputation. One wrong fact from AI and your credibility can be destroyed overnight.

Even if AI is easier and more efficient to use, you have to weigh the cost benefit analysis of what you stand to lose if AI gets it wrong.

Sometimes, you can get real gems. Those gems often come in the form of 404 and broken links- this helps me see what stories and articles have been entirely erased from the Internet. For me, that is a story in itself to explore.

What AI won’t show is often more important to investigate than what it will show.

That gives me a good starting point on where I need to look. By seeing what is broken, I can get a sense of where to look next. If I were to manually do this, I would have to use traditional SEO tools or spend hours searching through the Lumen database. In this respect, AI does save me a substantial amount of time.

How can newsrooms integrate AI into their workflow?

“When it comes to the big datasets and AI, that can always get better in terms of how the data is analyzed and different patterns that can be pulled in the data. Beyond transcription services, I’m not sure where AI or Machine Learning will fit in. I don’t know how it would help very much,” said Washington Post reporter Noah Smith.

JOURNALIST AI USE CASE:

Not having to transcribe interviews.

“If you think about AI more broadly, AI-generated transcription services have been a godsend. In some cases, transcribing interviews can take hours. But today, for instance, I did an interview instead of having to spend last night transcribing it.” -Bree Dail, Reporter

AI can also help reporters with the parts of their jobs they disdain such as adding SEO meta tags, keyword tagging, content optimization, and H2 tagging.

Key Takeaways for AI-written copy:

If a journalist uses Generative AI tools to publish content, they must do the following:

- Have the content edited for typos, grammar

- Thorough editing rounds

- Add a disclaimer that the article was not human generated and clearly list out where/how automation was used

- Make sure the tense is correct (AI sometimes gets it wrong)

- Check for unsubstantiated opinions vs. facts alongside reporting

- Ensure that thorough analysis has been added to the article (AI is notoriously bad at doing this)

- Make sure all facts are fact checked and sourced/cited

- Any quotes that are attributed to others must be corroborated by the source as well as a third-party (AI notoriously makes up false statements.)

Failure to do any of the above can lead to reputational harm and credibility issues over time.

“Technology is there to help make things easier. Oftentimes, technology can also be abused. Unfortunately, opinions have now become news, and things have gotten very grayscale. People need to go back to the reality of, why do we have to hold our kids to that standard? But professionals in the realm of information are not being held to that standard.” -Bree Dail, Reporter

RMG’s AI Prompts for Writers:

- Check Grammar. Fix grammar.

- Make me a better writer. How can I improve this article?

- You are an editor. Provide feedback, revisions, and suggested changes.

- Tighten up the copy and create a more compelling headline.

- Insert URL. Summarize this article.

- Insert URL. Write engaging social media copy to promote this article.

- Fix capitalization. Remove all caps.

- What is this article missing? Provide bulleted recommendations. Don’t hold back.

How can you use AI to improve your writing skills?

Beware: AI is a ruthless editor.

You can use AI to improve your writing by asking the AI tool to analyze your writing and provide feedback on suggested areas for improvement. You can also use AI to generate headline ideas and suggestions for content clarity and summarization of key points. If the AI can’t summarize they points in your article, it may be time to revisit the article to make sure you have key points.

How far away are current AI tools from fully automated content creation?

As AI writing tools move closer to automation, there are concerns about accuracy and trustworthiness of content. While AI can create the bulk of content, it can still generate inaccurate information, requiring manual fact-checking.

AI-generated audio. The next trend in journalism.

When it comes to the future of AI in Journalism, not everyone wants to read your reporting. Some people prefer to listen to it.

The creation of text to speech summarization with Artificial Intelligence is the next wave in content marketing and digital journalism.

“For any content creator, there’s no reason why you shouldn’t have an audio version of your article,” said Ron Jaworski, Founder of Trinity Audio. Trinity Audio dubs itself as the AI audio of Spotify.

AI generated audio apps like Trinity convert the text in your articles into an audio file. This creates an interactive audio experience for your readers looking to engage with your content in different ways.

READ: How Companies are using audio AI

AI audio isn’t just a novelty, it is also critical for user accessibility. Jaworski said that AI generated website audio can free up time for your users throughout the day to consume your content.

“The engagement with audio is much higher. It can increase time spent on your website, as well as conversion rate optimization (CRO) of current users.

Jaworski says that not every user wants to read. “They don’t want to read it, they want to listen to it.”

TL; DR: Give users the option to consume content in audio form on the go.

Listen to my Twitter threads here:

News writing bots and the future of AI Journalism

Examples of Automated Journalism

The use of AI content tools to accelerate and amplify intellectually appropriate business writing is here. We have reached the age of AI content maturity.

“I love it as an ensemble with multiple approaches. Think about other ways of using artificial intelligence throughout the writing and content creation process,” said Coyle.

The generation process is in the middle, but also paired with a different type of generation, like template-based or data-based generation tools like Narrative Science or Automated Insights or Aria, similar to what Washington Post uses with Heliograf, a template-based AI content generation platform.

Digiday: Can robots help reporters?

“There are ways that you can level up your content marketing strategy. But to use AI to replace writers, what are you actually replacing?

You’re replacing the execution of content that has the equivalent of something that requires exhaustive review, assessment and improvements.

Who you are replacing is not going to be universal.

You have writers publishing AI-generated content and saying, “I published thousands of posts to my blog this month.”

- How much work was done?

- How long is that success going to last on SERPs?

Many times, you will see people publishing content on disparate topics.

Some of the performance is lucky and very temporal. It’s going to lead to tremendous crashing for those websites over time.

Detection is critical and it’s just not meaningful for a business to take on that type of risk.

It would be like hiring fifty outsourcers on Fiverr or a writing network. Not checking their work and publishing it to your corporate blog is bananas. Don’t do it. Nobody can make that business justification.” -Jeff Coyle

The Future of Journalism in an AI-driven world.

It is rare to have a nuanced discussion on generative AI. People either believe that AI is coming to replace your job, or that all generative AI content output is terrible.

There is no in-between.

We are entering a digital renaissance where journalists will have significant value in the content marketing process.

If you can produce content that AI cannot; you will win.

This will give rise to investigative journalism – the type of reporting and content research AI cannot produce.

That will be the defining factor in truly compelling content creation.

The key is to produce content that leads to true information gain- which is a core tenant of investigative journalism.

For example, what facts can you find that may have been left out of a corpus?

What can you discover that a model was never trained on? This will give you a competitive content advantage. If it’s not in the model- your competitors are less likely to be pumping out the same AI-generated content.

What is not in the model will be more important than what *is* in the model when it comes to creating valuable content in the future.

If it’s not in a model, it is more likely to be extremely valuable.

- How can we write a story that AI can’t write?

- What do we know that AI doesn’t know?

Ethical AI issues in Automated Journalism

As we have discussed, Large language models are disrupting newsrooms. As more journalists use AI to write stories, serious ethical issues arise.

Emerging issues include:

Authorship. Who is the real author of the article? If a journalist uses machine learning models and generative AI tools to produce output, technically they are not the real author of the article if the model was trained and scraped on another writer’s work. Some of that work is copyright protected, which raises serious ethical considerations pertaining to IP and plagiarism. Who is the real author? According to The U.S. Copyright Office, AI does not count as a real author of an article. AI can also inadvertently plagiarize other sources depending on the source it was trained on.

The U.S. Copyright Office stated that only work created by a human author, with at least a minimal degree of creativity, is protectable by copyright. The U.S. Copyright Office also wrote that AI-generated work may be protected by copyright if a human is sufficiently involved in the creative process. “Sufficient human involvement may include selecting or arranging AI-generated material in a creative way or modifying material after it is created by AI.”

READ: Potential Implications of US Copyright Office Determination on AI-Generated Work

Journalistic Integrity & Accuracy. One common risk of using large language models in journalism is loss of credibility. AI frequently inserts fake quotes and attributes these quotes to real people. AI does not have to adhere to the same ethical standards as human journalists. If the AI gives a journalist a quote from a source that never existed, the AI won’t take the fall for it- you will. Attribution of fake quotes to real living people is a serious ethical consideration to be aware of. False and misleading reporting can lead to an injection of misinformation into the news cycle. Journalists who rely on AI can have their journalistic integrity questioned if they do not fact check the output of AI generated text.

READ: OpenAI Sued by Authors Alleging ChatGPT Trained on Their Writing

Do you have any other ethical concerns about the use of AI in journalism?

“We’re going to see AI tools replace people who are doing the bare minimum. We are already seeing areas where people are being replaced by automation. The people who think that it’s going to be the end of writers or the people who think they can just pull things out and source them. They will be the people who are discredited very quickly. There are going to be people who do that. But those are the same people who are taking our work and passing it off as their own and not sourcing. Eventually, that’s going to weed out the people who are doing the bare minimum. To me, that is bare minimum work when you’re sourcing in that way.”-Bree Dail, Reporter

Avoiding legal pitfalls & AI quote attribution

The ethics of AI generated source quotes in public relations and journalism

Is it okay for ChatGPT to write quotes on behalf of subject matter experts as sources? (Hint: no!)

Machine Learning and AI quote attribution

“As a journalist, if and when I request a quote, I go directly to the source. If a Publicist says, “I have someone who could be an expert for you,” I’m going to want to talk to that person.

We earn our credibility through our work. We also discredit ourselves through our work. No one does that for us.

It’s our responsibility to double check sourcing, which means we have to be engaged with the original source. That is my ethical understanding of what to do in journalism and it has been very successful in my work.” -Bree Dail

This worked well in a pre-AI world where all sources were human generated. But how will this fare in a post-AI world where chatbots can ghost as subject matter experts for PR interviews?

What if the original source turns out to be AI instead of a human?

How can you fact check a source if the source is a machine and not a human?

If a Publicist provides an AI-generated quote for a source:

“If you are claiming that a source said a statement exclusively to me on a subject and the quote is AI-generated, I find that to be ethically questionable. I wouldn’t put it past a journalist to use it because many journalists aren’t very aware on the technology side of the new AI tools and how they can potentially be used or abused.”

Right now, we are seeing a real danger with unbridled use of AI tools in this nature.

“For example, if you generate a statement with AI that says, “The most important thing about AI today is NLG,” and then attribute that to Ben, that would absolutely be unethical. It would be close to plagiarism. It would be a lie. It is quite litigious in Italy against the press for defamation. A misused quote or a quote that could come back at us could potentially be a serious issue. This would be an example of AI being used for a nefarious purpose.”

If I were to say that a statement was attributed to a source and the publicist who provided me that information said it’s attributed to a source and I don’t double check my sourcing on that. I would be delegating that responsibility to a person that I’ve hired in order for me to take less time to do the initial research, but then go back and triple check my sourcing. But let’s say I used a quote like that, and I put it to print.

Now, let’s say Ben comes back and says I never said that. Where did you get that quote? Here in Italy, I could be sued. And I think that there is more movement in that realm against the media especially now when we have seen recent cases of media defamation.

We know that certain media outlets, such as The New York Times, will just make up anonymous sourcing.

Why do they use anonymous sourcing?

Just trust us. An informed source if I have to deal with this in the Vaticanista world, too, informed sources say. So, you’re now just saying, trust me. I’m a journalist. Trust my word that these people are saying this without providing any evidence.

It’s like a lawsuit trickle-down effect generated by AI.

“This is even worse, because now you have a quote that may be completely fake and it could come back and really cause a lot of problems not only for the journalist, but for the person that you’re supposedly quoting as well as the Publicist who provided the source quote. That type of abuse will result in lawsuits because if I use a quote like that and I was given that source quote from a publicist and it ends up being a wrong quote and the person who I attributed the quote to comes to me and says, where did you get that? I would turn to the person who I hired.

I will speak directly to a source directly or in one case right now, I just got an exclusive with a governor in the U.S. for a piece I’m doing for Newsmax, and I just went back to their office. I wanted to make it very clear because I put it in writing from the office and said, “may I attribute these words directly to the Governor, or do I need to attribute it to his office? I need to have that clarification in writing. So legally, I don’t make a mess of attribution.” -Bree Dail

Key Takeaway: Never assume the source quote provided for an interview is from a human. Always triple check sourcing.

“When people ask me questions about the 101 ethical standards of journalism and sourcing, I always try to remind them about citing sources in high school.

Do you remember in high school when you had to write book reports? You would get in trouble if you didn’t source your material in quotations with proper footnotes.

They were teaching you about responsibility. The professionals should have experts in the field. That should be the bottom line and ethical standard.” – Bree Dail

Taking Responsibility for AI-generated text

Jeff Coyle urges publishers to think about the next step that gets taken in the content marketing process.

- How is that justifiable?

- What are you going to do next?

- What happens if there is an inquiry on it?

- Where did it come from?

The person needs to take responsibility for that text, so it’s theirs.

What they produce is theirs. They are ethically responsible for it. This is true for a content consultant, an in-house content marketer, or an external content marketing agency.

Think two moves ahead.

If someone sells an article and states that they wrote it, but they didn’t write it, that’s a completely different legal discussion.

“It’s something you’re getting away with.”

- Did they research and source the expert quote?

- What process did you use to get the quote?

When it comes to AI, a lot of people see the technology as a way to cut corners and get away with risks. But you are not really getting away with anything. You are gambling your entire corporate reputation, and in the end, someone will have to pay the price.

“It is the equivalent of building a business or a reputation on a pile of sticks instead of bricks. That’s the reality,” said Jeff Coyle, Founder of MarketMuse.

“This has happened many times in the content and search marketing space. Just because it’s GPT-4 generation, it is not exactly a novel process that no one has experienced. It is the modern-day equivalent of article spinning that search marketers have done for years.

We’re now at another level of AI maturity.

“In 2003, there was a software product called The Blogfather, which automated massive blogs and would just pump out blogs. Endless spinners have been around forever. The fundamental basics of natural language generation (NLG). There was a large media group called Myers Media Group. You can still look at them. They do what’s called macro SEO.

They use templates and databases to create content. If you’re at the bottom of your favorite travel site, go to the bottom of the results. It’s probably generated with a natural language generation (NLG) pod that says if you’re going to Atlanta, you are 1.8 miles away from the aquarium. Make sure you go to the Coca-Cola Museum. It is 2.4 miles from Petro City. That content existed for a long time, and before that, there were other versions of this.

Is anyone worried that the person who had to historically write that text was not data-driven?

In 90% of the cases, the article that is on every single stock page on your favorite stock site is no longer sourced by a writer. The same is true with the listing you see for a real estate listing.

Those are all pieces of automated content.” -Jeff Coyle

“The fringe use case where it’s someone responding to a media request and masquerading content that isn’t theirs or isn’t sourced by them or generated by them (as them) would be the equivalent of extractive summarization.”

In artificial intelligence, you have two different core types of summarization.

Abstractive summarization and extractive summarization.

How does extractive summarization differ from abstractive summarization?

Abstractive AI can read content, and it will provide its view of the summary of that content. That’s very powerful, by the way. It’s very real. It’s a layer of technology like GPT-3. Abstract summarization can be applied as a layer to obfuscate generated content as well and it is being done by some of the AI tools that are very popular.

Extractive AI is when you take snippets. You are actually taking pieces out of content that you review. Extractive summarization marketed as one’s own work is plagiarism.

If someone is saying- I wrote this and you didn’t write it- at some point, that is going to catch up to you and create a PR nightmare. It doesn’t make any sense from a business perspective. If you desire to have a writing career, it makes absolutely no sense to do that.

Similarly, if you desire to have a steady stream of inbound PR opportunities for executive thought leadership, it also makes no sense to do that. By putting a writer’s reputation in jeopardy because of AI, you are putting the executive’s reputation in jeopardy.

There are long term, serious consequences of doing that that extend far beyond any short term wins or current agency relationship.

The most concerning part of this is that oftentimes, clients won’t even know if a Public Relations firm is using AI to ghostwrite content on behalf of the executive when they pitch them to a media outlet. In the end, the liability will fall back on the client, not the agency, which is why it is critical to understand the risks and rewards of using automated tools in public relations.

However, if you say, I source my content through a combination of my research conducted with the assistance of artificial intelligence, that is a different story. You have to be very upfront about that, and your AI content policy also has to reflect that. The FTC is very clear that any use of automated tools must be clearly disclosed by the business.

If you are rewriting the AI content and making fundamental edits, that is a different story. At that point, the content is yours. But AI generated content is never really yours.

It is usually the byproduct of stolen content trained without user consent. This poses serious ethical questions, and also opens your organization up to liability if the original writer takes action against you for plagiarizing their work.

Article spinning is not immune to this either. It is very obvious when a few words have been moved around. AI article spinning is the modern-day form of plagiarism. Don’t do it.

Pro Tip: If the core structure of the story and research is someone else’s, don’t use it.

AI-Generated Journalism

What does generative AI mean for the future of journalism?

Closing Thoughts:

How will AI impact the future of journalism?

AI-powered tools can help journalists to work more efficiently and effectively, allowing them to focus on more complex and creative tasks, rather than spending time on routine tasks like transcription.

- Pattern recognition

- Plagiarism detector

- Transcription

AI has the potential to enhance the efficiency of workflow automation in journalism, but it will not replace the core tenets of journalism.

AI cannot investigate, nor can it conduct interviews with primary sources.

The core tenets of journalism such as accuracy, fairness, and transparency must be upheld in the new era of AI-based journalism.

As a content marketing agency, our goal is to create the best and most extensive piece of content on any given topic on the Internet for our clients.

The acceleration of AI further will further reinforce the need for strong journalism and research skills to truly stand out in a world of regurgitated content created with AI article spinning tools.

That type of content does not contribute to true information gain. If someone else can write it, or if you are using an AI tool to rewrite what someone else wrote, that is not journalism. It is plagiarism.

Helping consumers understand how to deploy ethical AI in the context of content marketing & PR is a core focus of our company.

While technology frequently changes, the importance of exercising critical thinking, research, and analysis will not.

Too often, what is missing in journalism today is the lack of historical context or analysis.

To understand today and tomorrow, you need to understand the past to understand what brought you to that point.

In a social media driven economy, the trending topic is over-indexed, and the historical background is under-indexed.

Unfortunately, this has led to a black hole of analysis and strategic insight, which is necessary for readers to truly understand the issues and context on any given topic.

The idea of actual memory vs. artificial memory will impact the future of journalism.

The speed of innovation has made it possible for everyone to have an opinion and hot take, which often comes to the detriment of readers because the speed trumps quality of thought.

How long should it take to write an article?

Recently, there was a poll in a Facebook group asking how long it should take to write a new piece of content. Answers ranged from a few hours, to a few days, tops. I replied, “a few months.”

Generative AI writers believe that nothing should take more than a few days to write. One commenter stated that by the time the article is published, it would lose topical relevance.

Real investigative journalism takes time, regardless of how quickly AI can generate an article. There is a difference between clickbait headlines and underground investigative work.

My fear is that people believe that the speed of AI will somehow accelerate the time it takes to do real reporting. It won’t. Encouraging human writers to keep up with machines will lead to people cutting corners and missing facts that can potentially make or break a story.

Reporters are always looking for the scoop or the story.

AI excels at synthesizing large volumes of data to the common denominator- but the common denominator is typically neutral. The scoop cannot be found in the common denominator- it is often found on either end of it.

The road less traveled

AI is not the road less traveled; it is the road that everyone travels. There is no scoop to be found when you use an AI tool to write a story, unless of course you are looking to find the content that AI has already censored or dropped from indexed search results.

Journalists can leverage AI to process large amounts of data at warp speed to look for patterns in the data, which can lead to a scoop if you find an anomaly in the data. For example, if you are downloading court documents, there can often be thousands of files to sort through. An AI tool with advanced search parameters to detect fraud can speed up the process for a reporter on deadline.

TL, DR: Artificial Intelligence writing software can help journalists save time on research, but it won’t reduce the time it takes to conduct in-depth deep dive investigative reporting.

Will AI replace journalists? Listen to the full recording:

Social Media Expert Kris Ruby, Founder of Ruby Media Group, shares her concerns on X’s Privacy Updates:

- AI: Twitter has the right to use any content that users post on the platform to train AI models, and users grant Twitter a worldwide, non-exclusive, royalty-free license to do so.

New X Privacy Policy: More questions than answers…

As X transforms into an AI-first social media platform, Social Media Expert Kris Ruby advises users to tread carefully and understand the long-term implications of publishing content on social media platforms that train on your content.

Social media, Journalists, and AI:

The integration of using tweets to train AI models creates a conflict of interest for journalists. With Musk pushing for journalists to publish on X, the possibility of having your content harvested for AI training data poses a serious Intellectual Property threat.

Do Not Train

Journalists need the ability to opt out of having their work scraped for AI training data.

Musk’s push to get journalists to publish on X is directly at odds with the new AI training clause in the terms of service. Musk states that it is “just public data,” but the data is the body of work a journalist publishes, which may include copyrighted material.

What are the consequences of using a social media platform that trains on your data?

Your reporting can be used to train AI models. You will not receive compensation when your writing is used to train Twitter’s machine learning models. Receiving ad revenue or subscription payment is not the same as being paid for your content to train a model.

The risk is not inherent to publishing on X- it spans across the board on all platforms- but it is a critical topic for creators to consider before deciding which platform to publish on.

This is less of a risk for creators who do not plan on publishing long-form content on the platform – but a greater risk for journalists who plan to make Twitter their primary publishing platform of choice.

What choices users have on social media when it comes to AI and content protection?

Ideally, Twitter would offer journalists and content creators a do not train button. Currently, that option does not exist.

Twitter also has a serious content theft issue, and plagiarism is rampant on the platform, with users stealing other people’s content without proper sourcing in an attempt to get monetized or go viral.

To protect your content, you can lock it as gated content and make it available to paid subscribers only. That still doesn’t solve the underlying issue of training data and IP concerns, but it can temporarily reduce the risk of plagiarism if your content is available to a smaller audience.

This is a short-term fix for a long-term problem. Ultimately, it is not a sustainable solution for the more significant IP issues at play regarding training data and artificial intelligence model training.

SUBSCRIBE to Kristen Ruby on Twitter for exclusive members-only content.

RESOURCES:

*During the original Twitter Space in 2021, Bree Dail was a reporter with Epoch Times Daily is now a reporter with The Daily Wire. During the original Twitter Space in 2021, Noah Smith was a reporter with The Washington Post.

ARTIFICIAL INTELLIGENCE COMPANIES | ABOUT US

Digital transformation. AI Consulting.

Ruby Media Group builds your brand by leveraging the latest innovative AI technology. With RMG, a human will always be in the loop.

RMG helps businesses harness the power of AI to drive growth and scale publicity outreach.

If you are interested in learning more about how RMG can help you leverage AI for your business, contact RMG today.

Kris Ruby | Social media expert with experience in Artificial Intelligence

Kris Ruby is the founder of Ruby Media Group, an award winning PR, Content Marketing & Social Media agency. Kris has been on the cutting edge of marketing technology for 15+ years, increasing awareness of the latest technology to founders across the nation so that they could benefit from emerging tech. From the advent of social media marketing to artificial intelligence in marketing, Kris is always on top of the latest social trends.

Ruby Media Group stays up-to-date on the latest trends and technologies in digital marketing and social media, including Twitter AI, and Machine Learning.

Published by Ruby Media Group, Westchester, New York.

© 2023 Ruby Media Group RubyMediaGroup® is a registered trademark.

Ruby Media Group is an award-winning NY Public Relations Firm and NYC Social Media Marketing Agency. The New York PR Firm specializes in healthcare marketing, healthcare PR and medical practice marketing. Ruby Media Group helps companies increase their exposure through leveraging social media and digital PR. RMG conducts a thorough deep dive into an organizations brand identity, and then creates a digital footprint and comprehensive strategy to execute against.